Side-by-side viewing and comparing different annotations made by various annotators on text documents present several challenges.

Firstly, aligning the annotations for accurate comparison can be difficult, especially if the annotators have used differing interpretations of the text. This variability can lead to inconsistencies that complicate the review process.

Secondly, managing and displaying multiple annotations simultaneously requires robust interface design to prevent visual clutter and ensure that differences are clearly discernible.

Lastly, synthesizing the feedback from these comparisons into actionable insights demands careful analysis, as minor discrepancies might reveal underlying ambiguities in the text or annotation guidelines, requiring adjustments to training or instructions for future consistency and accuracy.

Generative AI Lab has enhanced its capabilities by improving the feature that allows users to view two or more completions side by side. Users can now select any two completions for a more detailed comparison, facilitating a deeper understanding of the differences and discrepancies between them. This upgraded functionality aids in pinpointing inconsistencies more effectively, ensuring a thorough review process and contributing to higher accuracy and quality in the annotations.

Using this feature is straightforward:

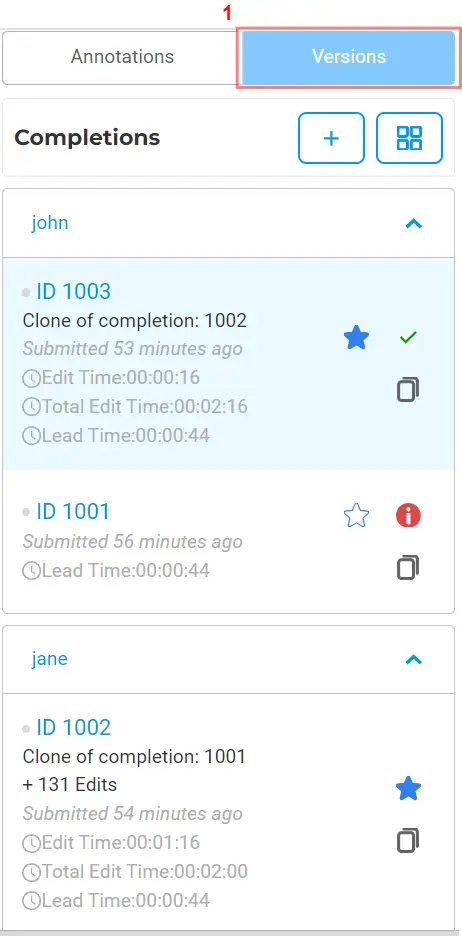

- Using Task View, open the desired task and in the right-side panel click on Versions tab. This action will list all completions for the selected task

- In the Completion Section, click on “View All Completions” button

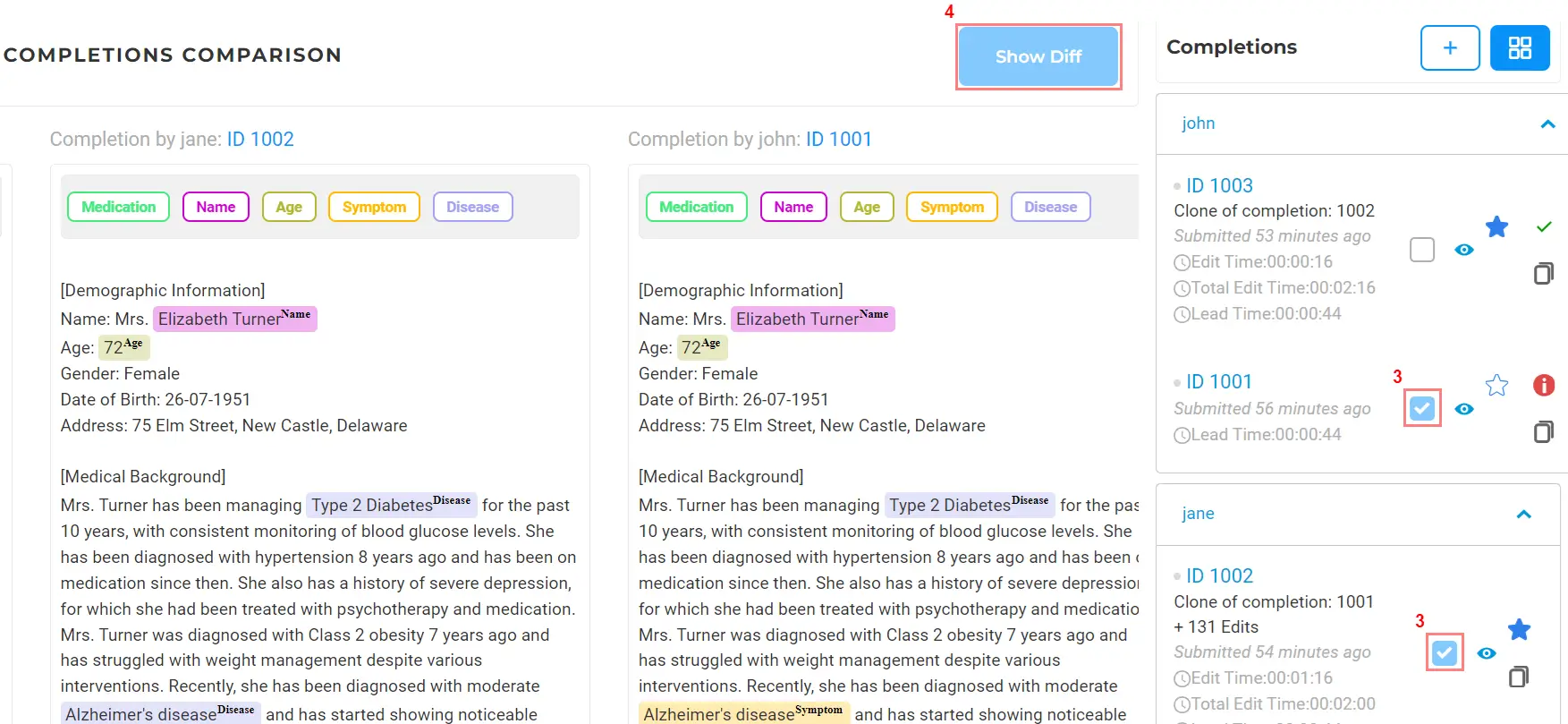

- Select any two completions in the right-side completion list

Click on the “Show Diff” button to display the detailed diff view

Such a comparison tool helps in identifying inconsistencies and understanding variations in annotation approaches, enabling more effective reviews and refinements of the annotated data. This feature enhances the accuracy of the training data and improves the overall quality of the annotations by streamlining the review process.

Getting Started is Easy

Generative AI Lab is a text annotation tool that can be deployed in a couple of clicks using either Amazon or Azure cloud providers, or installed on-premise with a one-line Kubernetes script.

Get started here: https://nlp.johnsnowlabs.com/docs/en/alab/install