John Snow Labs’ Medical Language Models library is an excellent choice for leveraging the power of large language models (LLM) and natural language processing (NLP) in Azure Fabric due to its seamless integration, scalability, and state-of-the-art accuracy on medical tasks.

As Azure Fabric is designed to support large-scale data processing and analytics, John Snow Labs enhances it by providing a robust, high-performance LLM & NLP toolkit built on Apache Spark. This combination allows businesses to process vast amounts of text data quickly and efficiently, unlocking advanced insights through tasks like named entity recognition, text summarization, question answering, and document classification.

John Snow Labs’ rich feature set, which includes state-of-the-art pre-trained models for multiple languages and domains, empowers organizations to implement complex text understanding workflows with minimal effort. When combined with the scalable and flexible infrastructure of Azure Fabric, users can seamlessly deploy LLM & NLP models across distributed environments, ensuring high availability and performance. Whether for real-time text analysis or batch processing, this integration provides a powerful solution for businesses aiming to drive data-driven decision-making using cutting-edge language understanding technologies.

John Snow Labs’ Medical LLM is optimized for the following medical language understanding tasks:

- Summarizing clinical encounters – E.g. Summarizing discharge notes, progress notes, radiology reports, pathology reports, and various other medical reports

Example: Summarization Model

- Question answering on clinical notes or biomedical research – E.g. Answering questions about a clinical encounter’s principal diagnosis, test ordered, or a research abstract’s study design or main outcomes

Example: Question answering Model

- De-identification – E.g. Automatically de-identify structured data, unstructured data, documents, PDF files, and images in compliance with HIPAA, GDPR, or custom needs

Example: Data De-identification Model

- Information Extraction – Detect 400+ types of entities and relationships from free-text clinical notes, including specialty-specific entities like tumor characteristics and radiology findings. These are used in a variety of use cases from matching patient to clinical guidelines, personalized cancer diagnosis, clinical trial matching, treatment planning, and drug repurposing.

Example: Oncology Model

In a blind evaluation by medical doctors, John Snow Labs’ “Medical LLM – Small” outperformed GPT-4o in medical text summarization, being preferred by doctors 88% more often for factuality, 92% more for clinical relevance, and 68% more for conciseness. The model also excelled in question answering on clinical notes, preferred 46% more for factuality, 50% more for relevance, and 44% more for conciseness. In biomedical research question answering, the model was preferred even more dramatically: 175% for factuality, 300% for relevance, and 356% for conciseness. Notably, despite being smaller than competitive models by more than an order of magnitude, the small model performed comparably in open-ended medical question answering tasks.

See here for benchmarks and responsibly developed AI practices.

About John Snow Labs

John Snow Labs, the AI for healthcare company, provides state-of-the-art software, models, and data to help healthcare and life science organizations put AI to good use. John Snow Labs is the developer behind Spark NLP, Healthcare NLP, and Medical LLMs. Its award-winning medical AI software powers the world’s leading pharmaceuticals, academic medical centers, and health technology companies. John Snow Labs’ Medical Language Models library is the most widely used language processing library by practitioners in the healthcare space (Gradient Flow, The NLP Industry Survey 2022 and the Generative AI in Healthcare Survey 2024).

John Snow Labs’ state-of-the-art AI models for clinical and biomedical language understanding include:

- Medical language models, consisting of over 2,400 pre-trained models for analyzing clinical and biomedical text

- Visual language models, focused on understanding visual documents and forms

- Peer-reviewed, state-of-the-art accuracy on a variety of common medical language understanding tasks

- Tested for robustness, fairness, and bias

What is Azure Fabric

Azure Fabric is a unified data platform designed to help organizations manage, analyze, and integrate data across various sources and environments. It provides a suite of tools for data engineering, data science, business intelligence, and analytics. Built on top of Azure, Microsoft Fabric combines elements like data lakes, data warehouses, and real-time analytics, offering a seamless experience for managing data pipelines, performing advanced analytics, and delivering actionable insights. It enables businesses to collaborate more effectively by unifying their data management and analytics processes within a single platform.

Use the Medical LLM – Small model in Azure Fabric

With John Snow Labs Medical Language Models library being an agnostic solution, you can access the LLMs and NLP models through Azure Fabric in the Data Science Studio UI and Notebook Python SDK. In this section, we cover how-to run successfully John Snow Labs LLMs on Azure Fabric. Please see here for all models, including LLMs and SLMs, offered by John Snow Labs.

Azure Fabric offers an integrated development environment (IDE) via notebooks that provides a unified visual interface for all purpose-built tools and use for the end-to-end development lifecycle.

Step-by-Step instructions

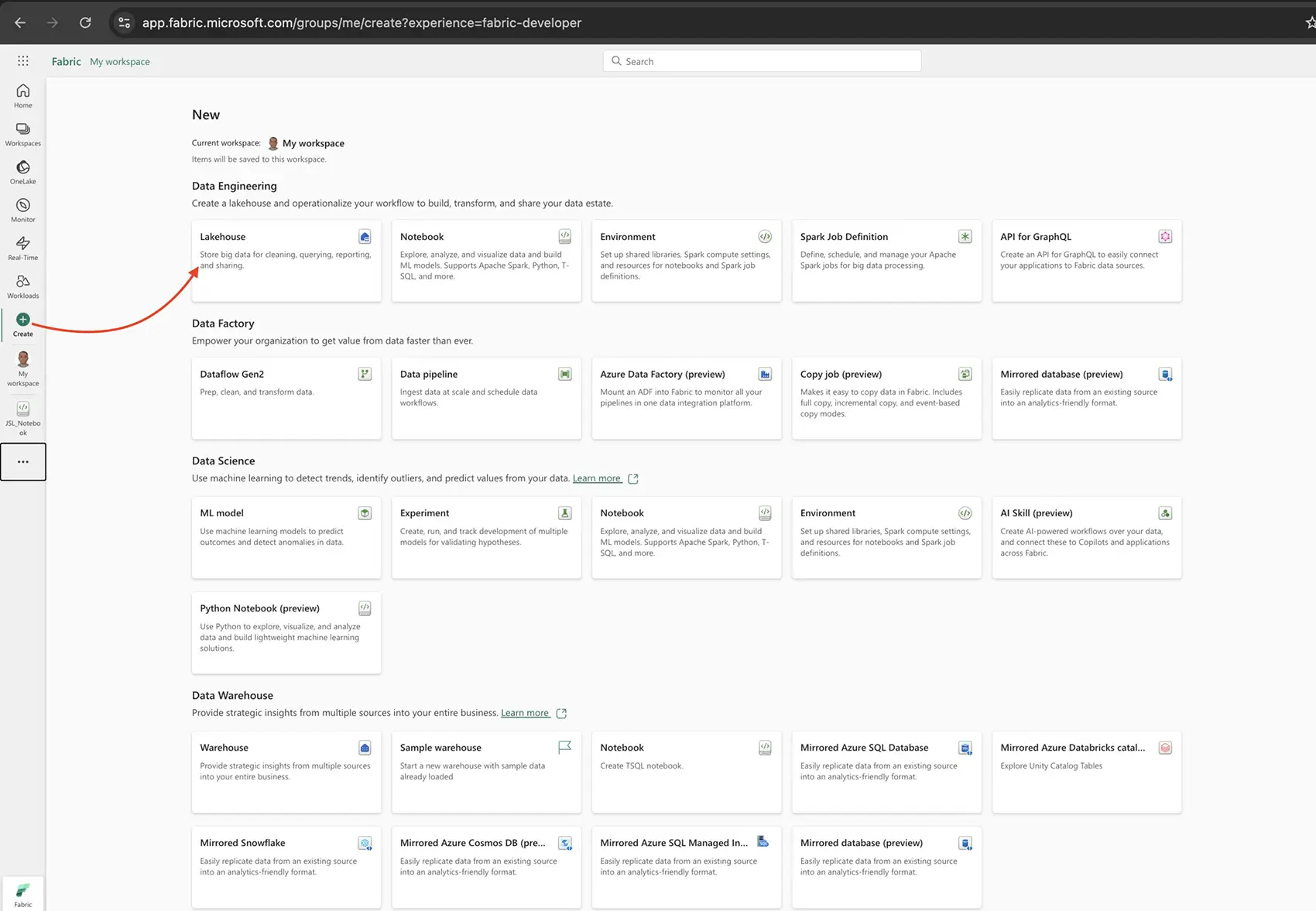

Log into Azure Fabric and Create an Azure Fabric Lakehouse

First, navigate to MS Fabric and sign in using your MS Fabric account credentials. Once you’re logged in, head over to the Microsoft Fabric Data Science section. From there, proceed to the Create section. Finally, create a new lakehouse, and for the sake of this example, let’s name it jsl_workspace.

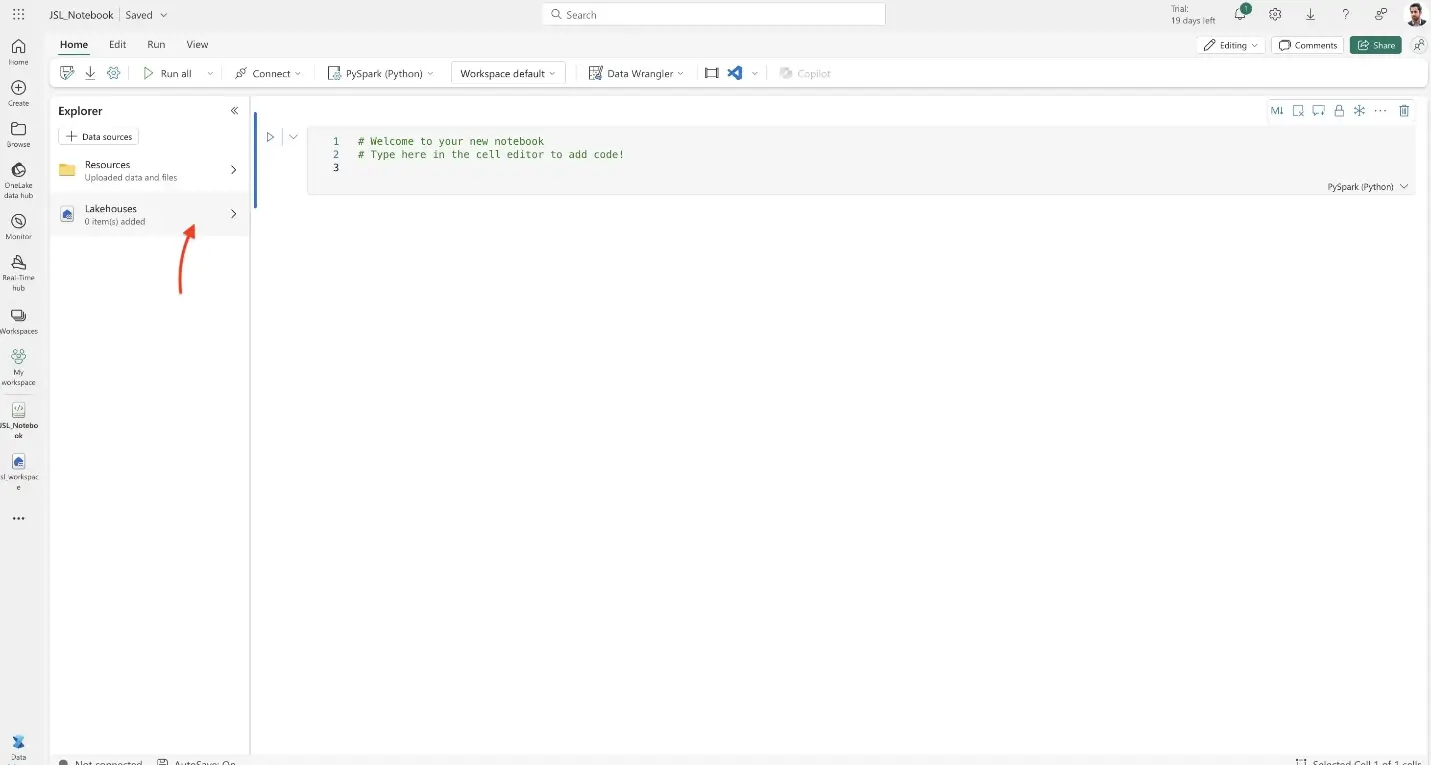

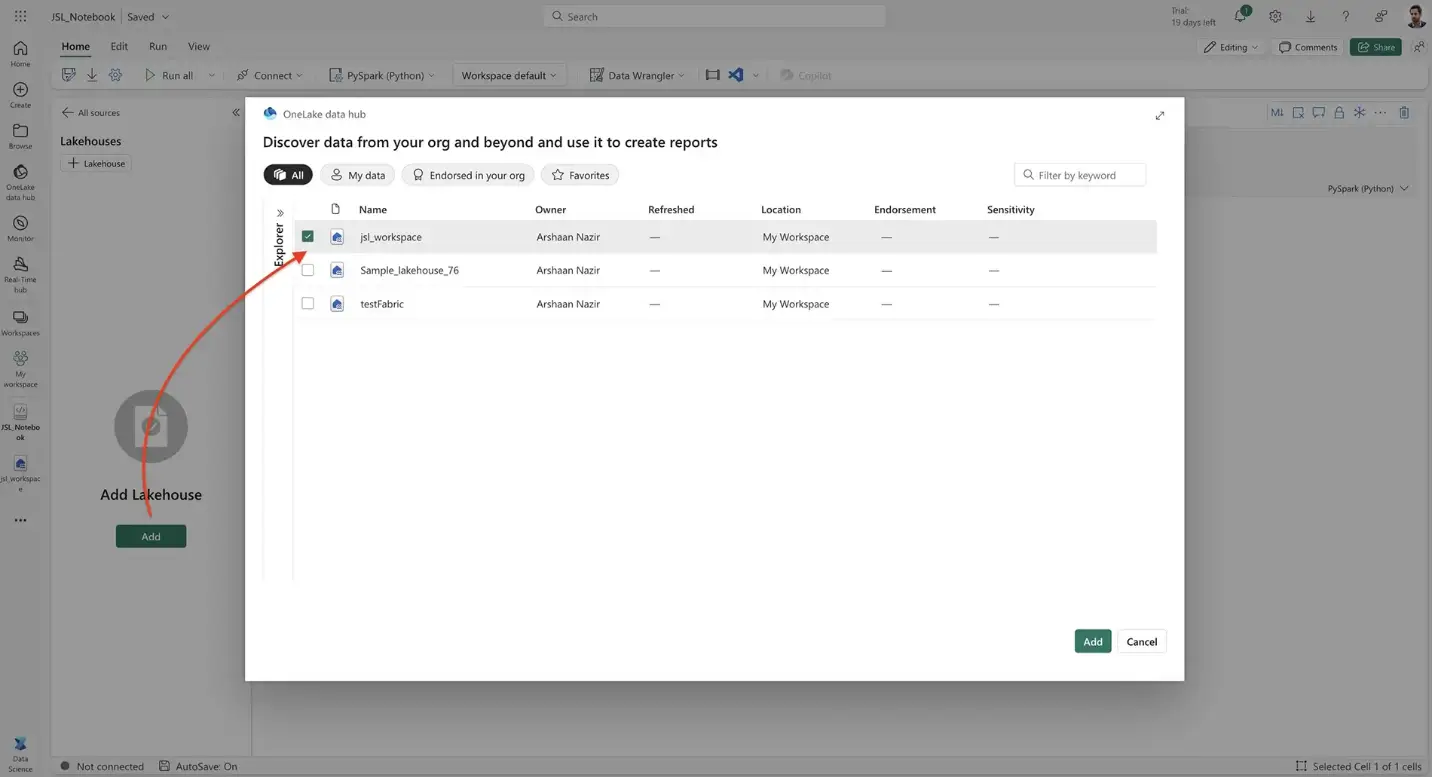

Create a Notebook and Attach the Lakehouse

To start, create a new notebook—let’s call it JSL_Notebook for this example. Once the notebook is created, the next step is to attach the newly created lakehouse, which we’ll refer to as jsl_workspace, to your notebook.

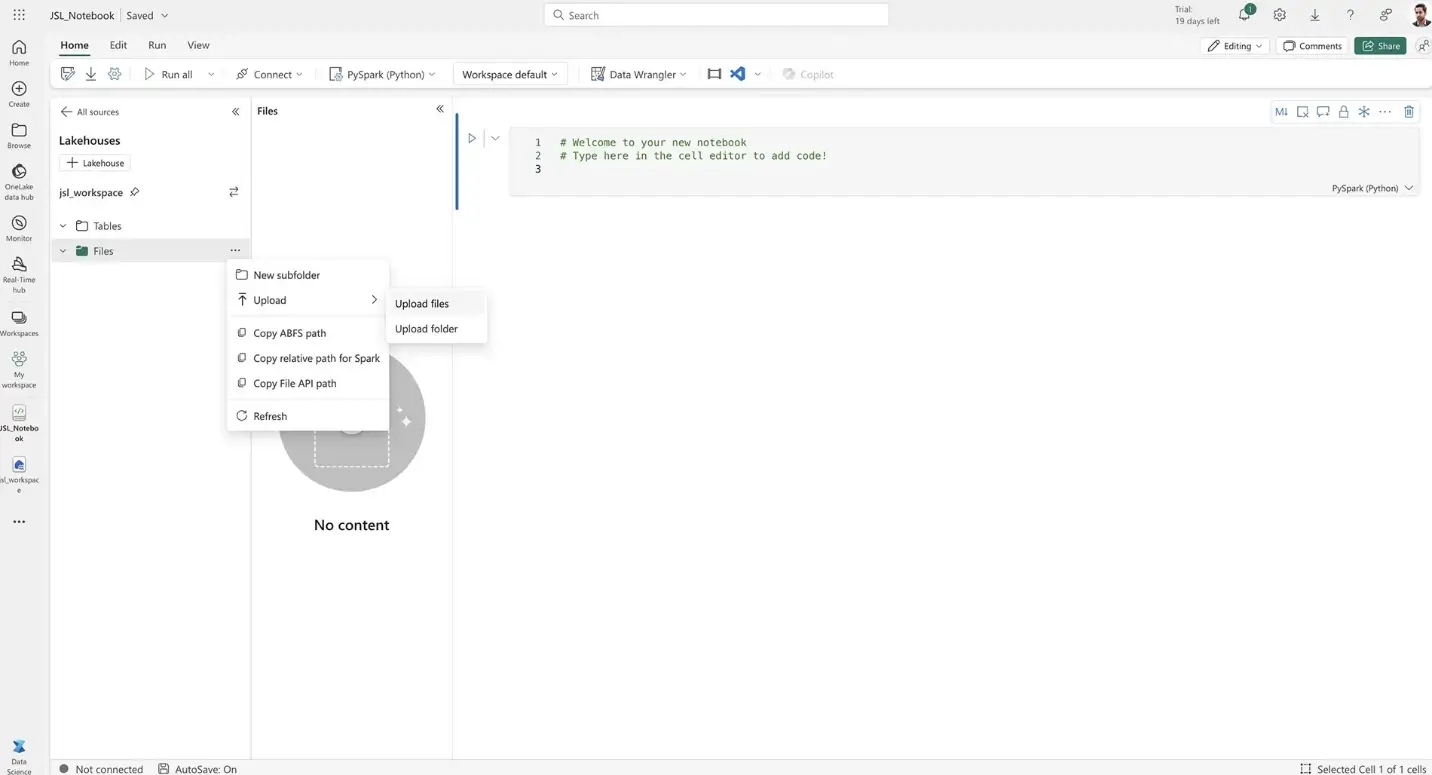

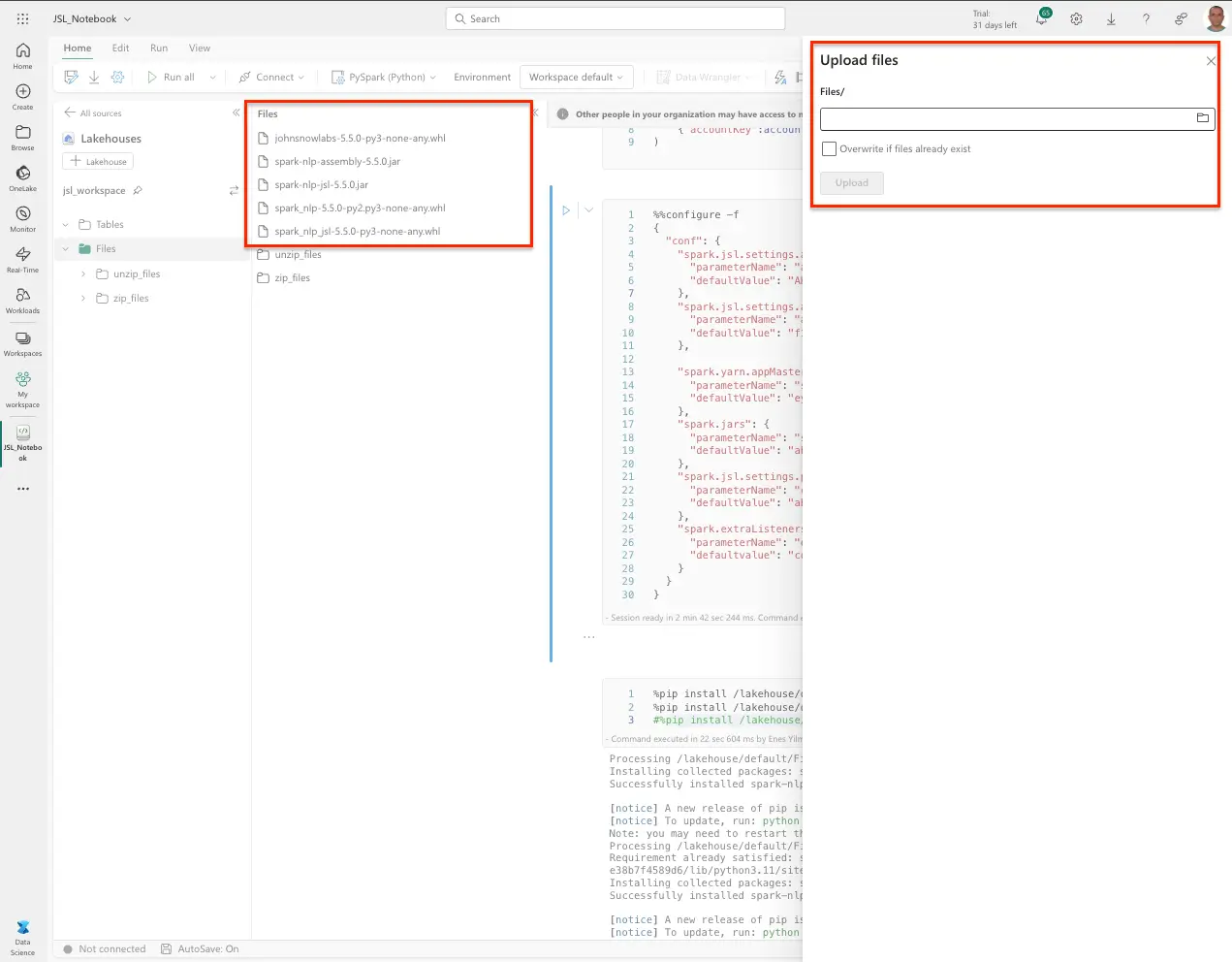

After attaching the lakehouse, upload the necessary .jar and .whl files to the attached lakehouse, ensuring that all required dependencies are available for use within the notebook.

After uploading is complete, you can configure and run the notebook.

Configure the Language Model Notebook

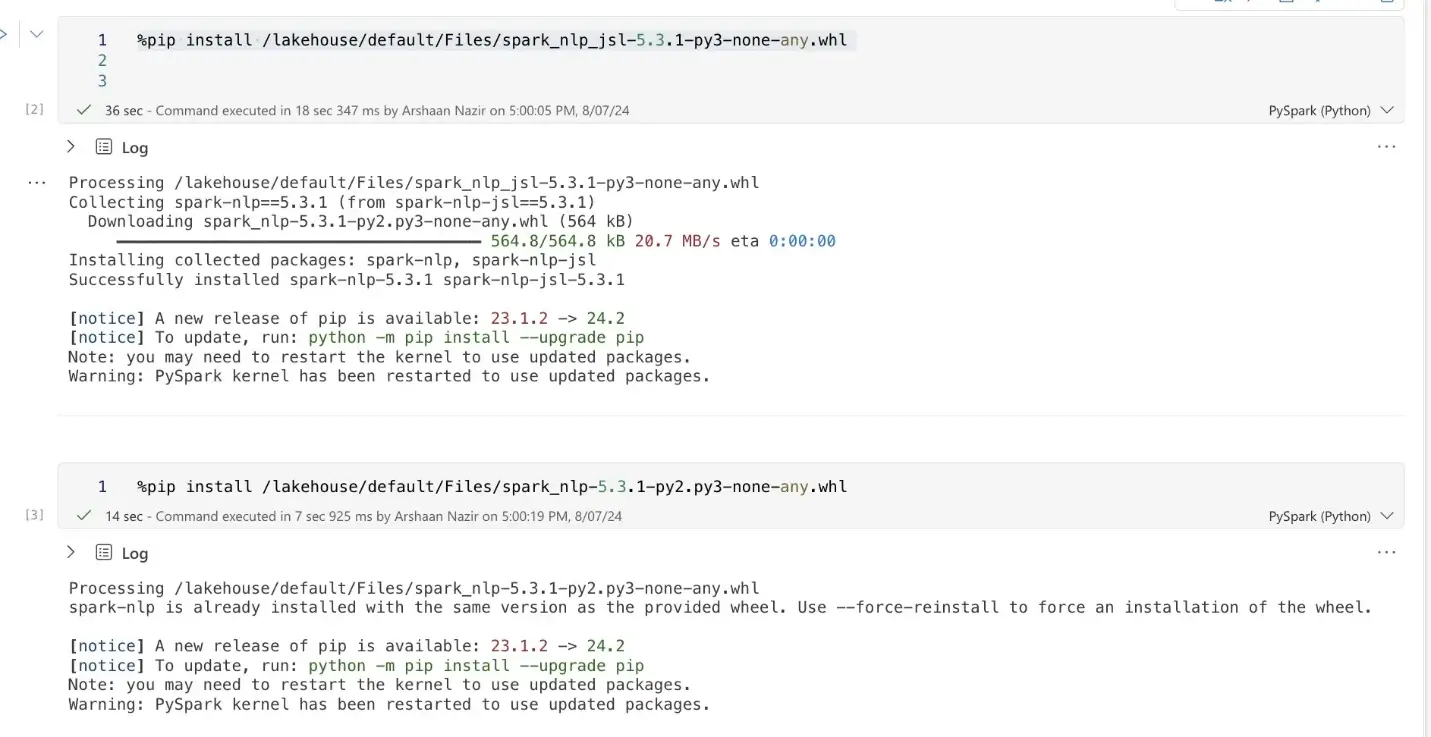

Begin by configuring the session within your notebook. For detailed guidance on this process, refer to the relevant section in our documentation. Next, install the required John Snow Labs libraries using the appropriate pip commands to ensure all necessary packages are available for your project.

Once the libraries are installed, proceed by importing the essential Python and Spark libraries into your notebook. Additional information on these steps can also be found in our documentation.

Find more information in our documentation.

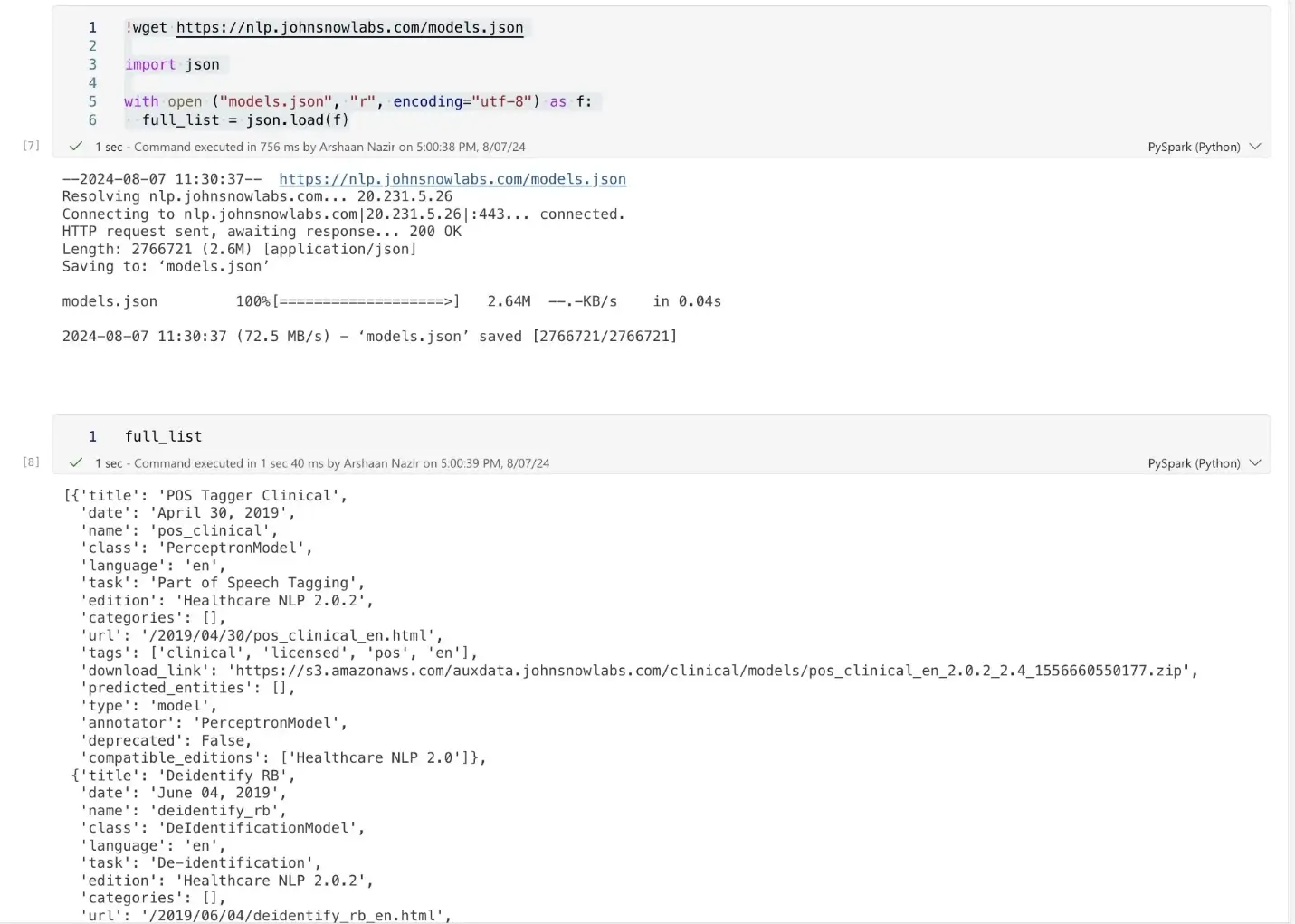

Exploring the available licensed models will help you pair the right model with the specific language task you wish to execute. Start by downloading and reviewing the list of available licensed models to find the best fit for your needs.

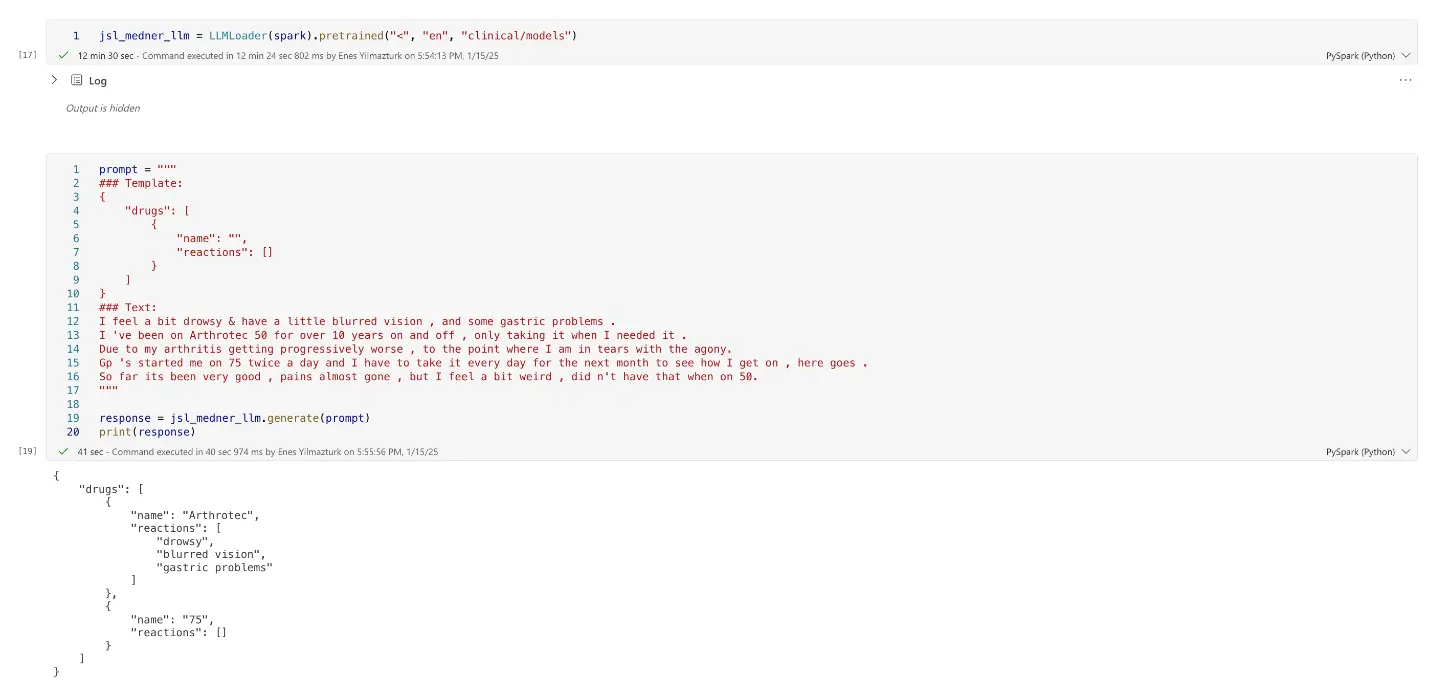

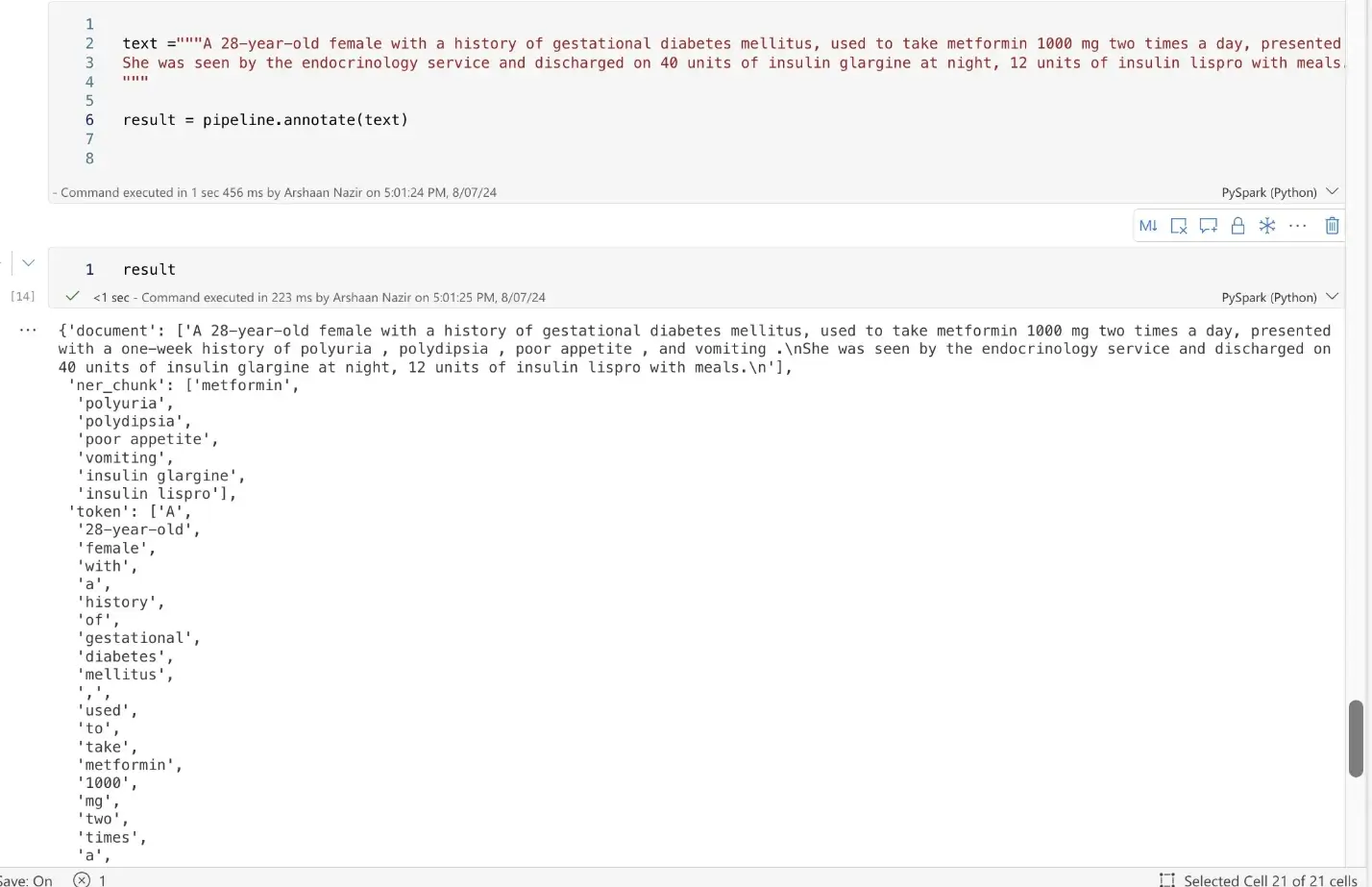

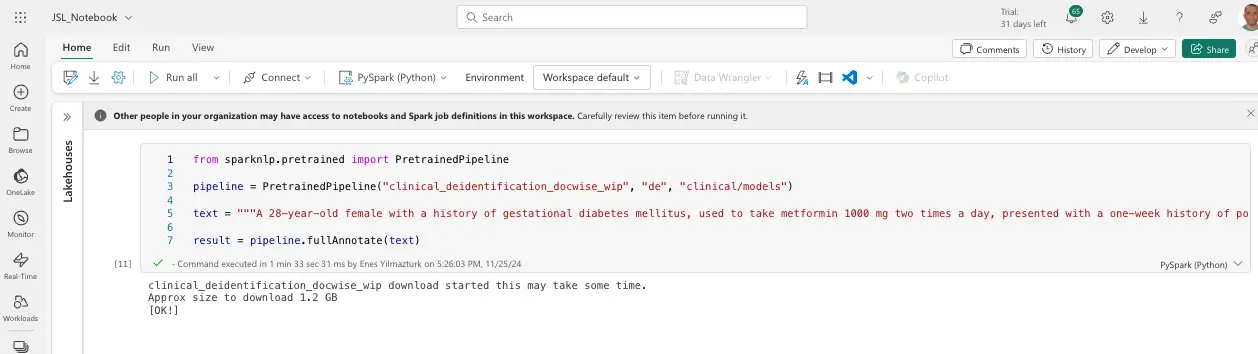

Introducing the LLM loader calling LLMs to load and run LLMs in gguf format that can run within an NLP pipeline, and scale horizontally without code changes on a Spark cluster:

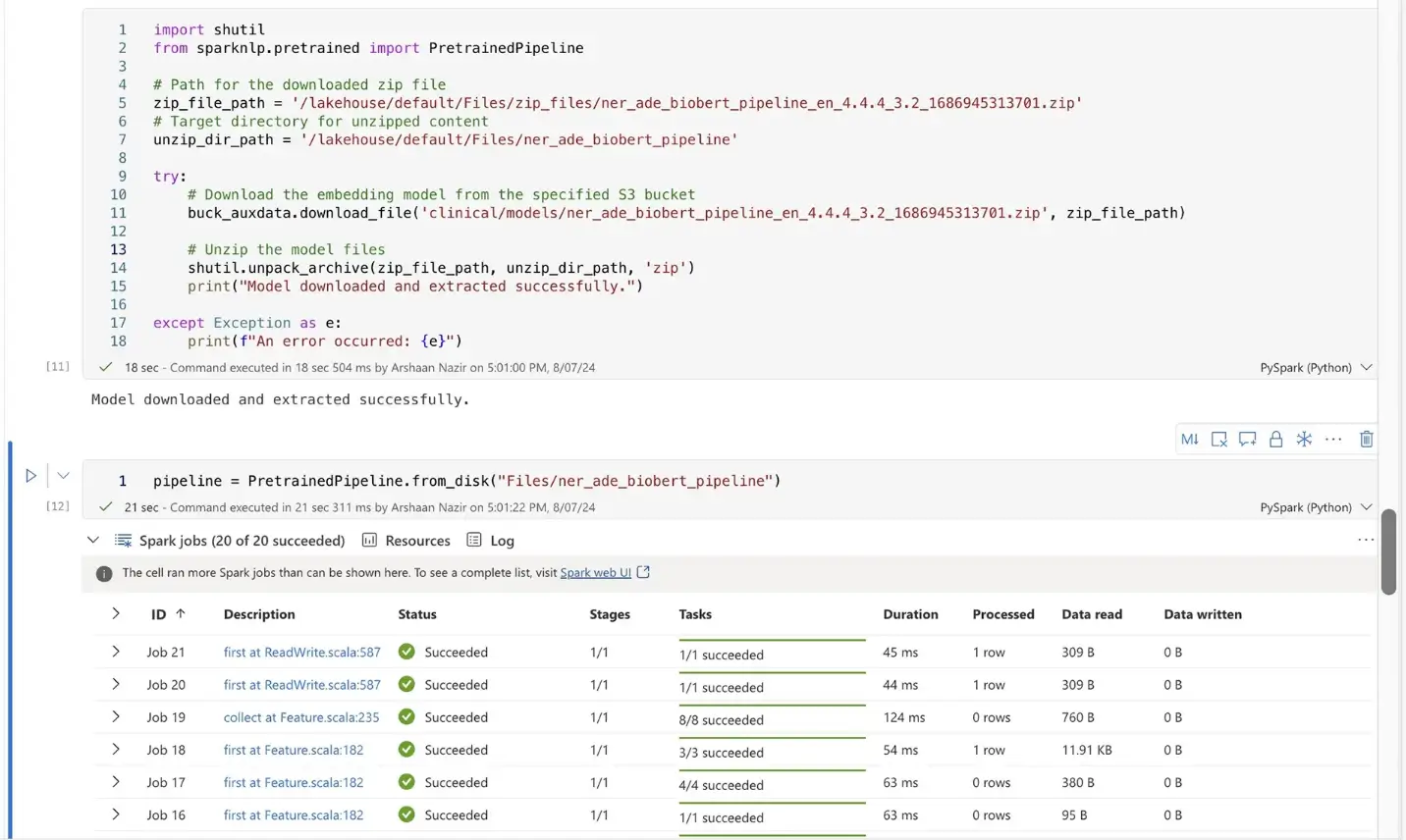

Once you’ve identified the required models, download and extract them, then load the model to perform inference. To handle file operations, set up your credentials and use Boto3 for managing the files. For detailed instructions, refer to our documentation. After setting everything up, load the model and proceed to perform predictions on the desired text.

Find more information in our documentation.

See below the proper job execution of the JSL pipeline in Azure Fabric. NER_chunks and token can be simply displayed for further utilized for analysis.

Run the pipeline Inference in Azure Fabric

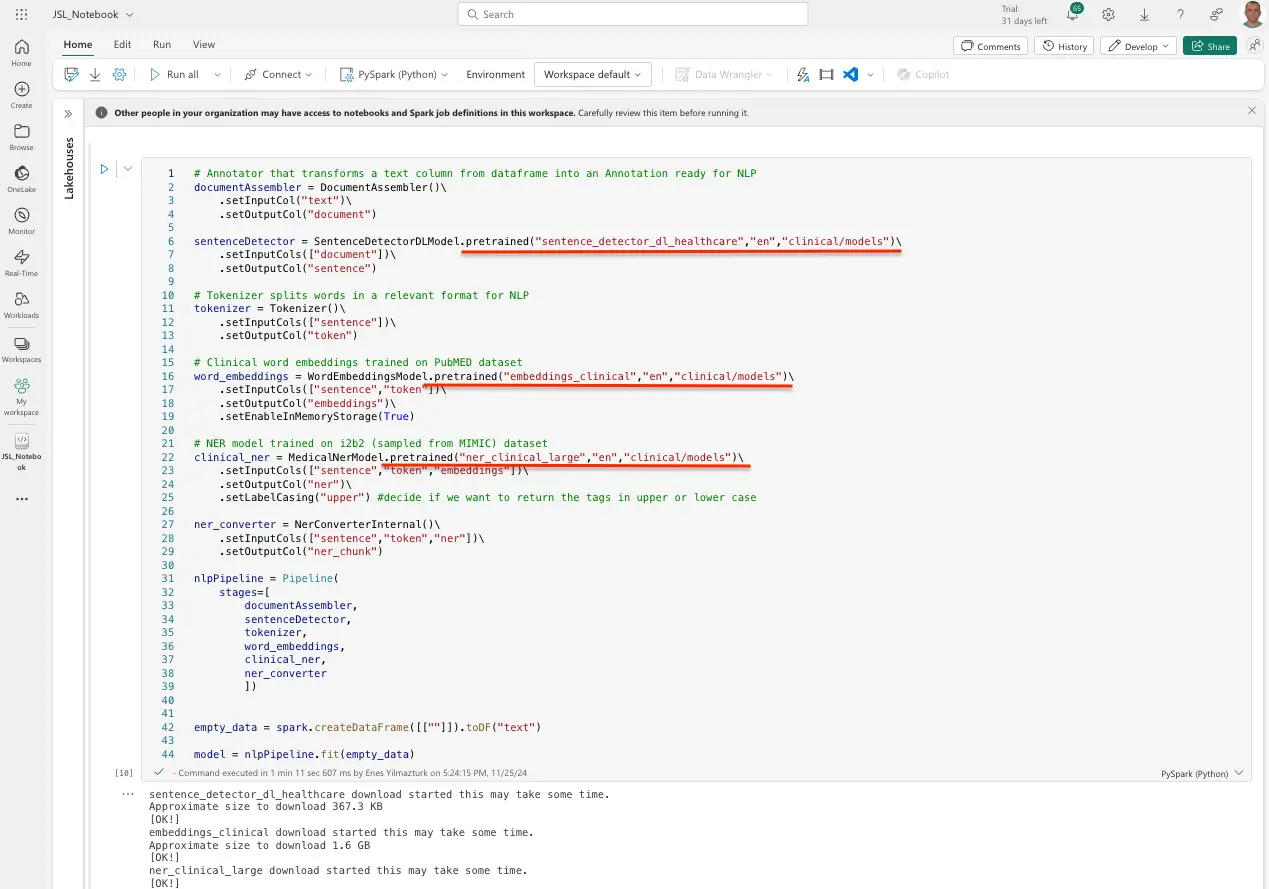

In this case, we are running inference using the .pretrained() method. Alternatively, you can run the pipelines without needing to use the .load() or .from_disk() methods, simplifying the process while still achieving the desired results.

integrating John Snow Labs’ Medical Large Language Models (LLMs) with Azure Fabric offers a powerful solution for healthcare organizations looking to harness advanced AI to enhance clinical decision-making, automate workflows, and improve patient outcomes. By combining John Snow Labs’ cutting-edge natural language processing capabilities with Azure’s scalable infrastructure, medical professionals can gain deeper insights from unstructured data, enabling more efficient and accurate care delivery. Whether it’s extracting actionable insights from medical records, assisting in research, or supporting complex diagnostic processes, this partnership provides a robust framework for advancing healthcare with AI. As the healthcare landscape continues to evolve, leveraging such innovative technologies will be essential for staying ahead of the curve and delivering the best possible care to patients.

Conclusion

In this blog, we show-cased how to get started with the first healthcare-specific models using Azure Fabric. Please see here for our documentation and detailed how-to. To learn more, refer to the following resources:

- All Azure documentation

- Installing John Snow Labs NLP Libraries via Azure Marketplace: A Step-by-Step Tutorial