In this blog post, we spotlight the potential of smaller, specialized language models within a Retrieval-Augmented Generation (RAG) framework — a space where large LLMs like GPT-4o are commonly used. By examining JSL’s purpose-built models, including jsl_med_rag_v1, jsl_meds_rag_q8_v1, jsl_meds_q8_v3, and jsl_medm_q8_v2 we demonstrate that even an 8-billion parameter model, when fine-tuned for clinical use, can deliver performance comparable to larger, general-purpose LLMs. Our results indicate that, for specialized healthcare tasks like answering clinical questions or summarizing medical research, these smaller models offer both efficiency and high relevance, positioning them as an effective alternative to larger counterparts within a RAG setup.

The field of healthcare AI has been evolving rapidly, with Large Language Models (LLMs) playing a pivotal role in the development of cutting-edge medical applications. A significant advancement in this space is the emergence of Healthcare-Specific LLMs, particularly those built for Retrieval-Augmented Generation (RAG).

Healthcare NLP with John Snow Labs

The Healthcare NLP Library, part of John Snow Labs’ Library, is a comprehensive toolset designed for medical data processing. It includes over 2,400 pre-trained models and pipelines for tasks like clinical information extraction, named entity recognition (NER), and text analysis from unstructured sources such as electronic health records and clinical notes. The library is regularly updated and built on advanced algorithms to support healthcare professionals. John Snow Labs also offers a GitHub repository with open-source resources, certification training, and a demo page for interactive exploration of the library’s capabilities.

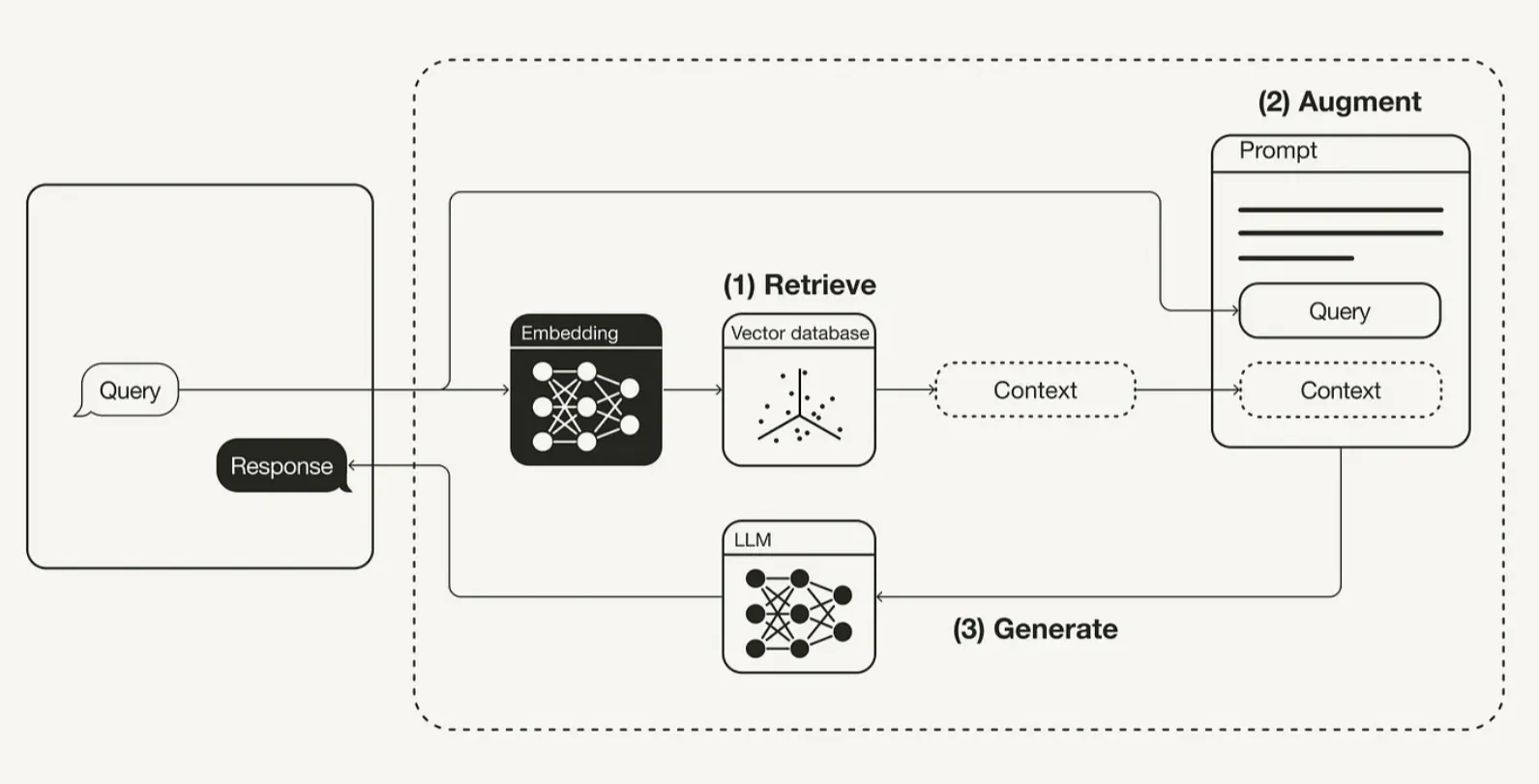

What is Retrieval-Augmented Generation?

RAG is a hybrid framework combining two major processes: information retrieval and text generation. In healthcare, this can be especially useful for tasks such as medical question-answering (Q&A), summarization of clinical data, and conversational interfaces for patients or doctors. Rather than relying on the model’s built-in knowledge alone, RAG systems first retrieve relevant external information, ensuring the answers generated are grounded in up-to-date, reliable sources. This is particularly crucial in medical contexts, where accuracy, specificity, and trustworthiness of information can significantly impact decision-making.

Retrieval-Augmented Generation Workflow by towardsdatascience

Creating The Vector Database With FAISS

FAISS, or Facebook AI Similarity Search, is a high-performance library created by Facebook AI Research to enable efficient, large-scale similarity searches. It is optimized to identify similar items (such as documents or images) within extensive datasets by utilizing vector representations, making it ideal for tasks involving deep learning embeddings. FAISS is recognized for its remarkable speed and scalability, offering support for both CPU and GPU computations, which enhances its versatility for handling large datasets effectively.

Implementation

To set up a Retrieval-Augmented Generation (RAG) pipeline with a large language model (LLM), first a relevant dataset is selected — in this case, a diabetes dataset from PubMed. This dataset transformed into a form that supports efficient information retrieval. Using John Snow Labs’ tools, the dataset divided into manageable text chunks, each carrying focused content. Each chunk is then converted into a meaningful vector representation through embedding, allowing the language model to grasp complex contextual cues from the data.

Next, a FAISS (Facebook AI Similarity Search) database created to store these vectors, offering a highly efficient way to search and retrieve relevant information based on query similarity. The FAISS retriever pulls up the top-matching chunks from the database in response to a given question. These retrieved pieces are then accumulated into a single text string, forming a context-rich prompt, which the original question is appended to for clarity.

Then, the prompt is returned as a complete string, which is used by the language model to generate contextually accurate responses. This approach, which combines question-specific context retrieval with the language model’s generation abilities, leverages FAISS for efficient and relevant context gathering and enhances the language model’s response relevance and accuracy, especially for questions dependent on detailed contextual information.

The prompt is fed into the LLM. The RAG framework processes this data to create a response that combines both the general knowledge embedded within the model and the specific context provided by the retriever. This setup empowers the language model to generate precise, insightful answers tailored to the questions and dataset.

LLM Models and Evaluation

In the medical AI landscape, several specialized models have emerged to cater to healthcare professionals and researchers. Among these, John Snow Labs offers models such as jsl_med_rag_v1, jsl_meds_rag_q8_v1, jsl_meds_q8_v3, and jsl_medm_q8_v2 stand out as healthcare-focused models. These models are tailored specifically for handling medical inquiries with high precision and depth, setting them apart from general-purpose models like GPT-4o model of OpenAI and LongCite-llama3.1–8b (developed by Tsinghua University’s Department of Computer Science and Technology (THUDM)), which often lacks the specialized medical knowledge essential in healthcare settings. They offer various quantization levels (q4, q8, q16) that let users fine-tune the balance between model performance and resource efficiency, making them adaptable to diverse healthcare tasks and requirements.

To evaluate the suitability of JSL large language models (LLMs) for healthcare applications, these models were tested with seven medically relevant questions, focusing primarily on diabetes. The example below highlights a question alongside the corresponding answers generated by the models, illustrating why JSL’s specialized LLMs may be a better fit for healthcare use cases.

question = "What is the recommended total daily dose of hydrocortisone for adults with adrenal insufficiency?" rag_prompt = process_question(question, db, top_k=5) print(rag_prompt)

""" ### Context: Plasma ACTH did not increase with insulin loading. A low plasma vasopressin (AVP) level and no response of AVP to a 5% saline administration were observed. We diagnosed central adrenal insufficiency with central diabetes insipidus. Six months after starting administration of hydrocortisone and 1-deamino-8D-arginine vasopressin, his psychological symptoms had improved, and 1.5 years after starting treatment, he was able to walk. In conclusion, it is not particularly rare for adrenal signs. Adrenocorticotropic hormone deficiency replacement is best performed with the immediate-release oral glucocorticoid hydrocortisone (HC) in 2–3 divided doses. However, novel once-daily modified-release HC targets a more physiological exposure of glucocorticoids. GHD is treated currently with daily subcutaneous GH, but current research is focusing on the development of once-weekly administration of recombinant GH. Hypogonadism is targeted with testosterone replacement in men and on A 36-day-old male infant who presented with severe dehydration was admitted to the intensive care unit. His laboratory findings showed hyponatremia, hyperkalemia, hypoglycemia, and metabolic acidosis. After hormonal evaluation, he was diagnosed with adrenal insufficiency, and he recovered after treatment with hydrocortisone and a mineralocorticoid. He continued to take hydrocortisone and the mineralocorticoid after discharge. At the age of 17, he did not show any signs of puberty. On the basis bicarbonate was 23.7 mmol/L. The diagnoses of HHS and hypovolemic shock were made. During treatment with fluid replacement and insulin therapy, the urine volume continued to be approximately 3 to 4 L/day, and an endocrine examination revealed ADH insufficiency and nephrogenic diabetes insipidus. Desmopressin 10 μg/day and trichlormethiazide 2 mg/day were necessary and administered, and the endogenous ADH secretion improved gradually. The signal intensity of the pituitary posterior lobe, dexamethasone (8 mg) was administered followed by hydrocortisone, thyroxine and topical testosterone replacement. Two weeks post administration of the third cycle, he became unwell with lethargy, weight loss and nocturia. Central diabetes insipidus was diagnosed on the basis of symptoms and sodium of 149 mmol/L (135–145 mmol/L). Desmopressin nasal spray was instituted with symptom resolution and normalization of serum sodium. Three weeks later, he presented again polyuric and polydipsic. """

Each model delivered varied performance based on its capacity to provide clear, concise answers with sufficient medical context. The answers to certain questions serve as a critical benchmark, functioning as the definitive litmus test for evaluating model performance.

jsl_meds_rag_q8_v1_response = "The recommended total daily dose of hydrocortisone for adults with adrenal insufficiency is 20-30 mg divided into 2-3 doses. The recommended total daily dose of hydrocortisone for adults with adrenal insufficiency is 20-30 mg divided into 2-3 doses."

jsl_meds_rag_q8_v1 provides detailed, concise answers but tends to repeat sentences. Model delivered direct answers, and provided a detailed response despite the lack of information from other models. It excels in giving long and clear answers, except for its tendency to be repetitive.

jsl_meds_q8_v3_response = "The recommended total daily dose of hydrocortisone for adults with adrenal insufficiency is 2–3 divided doses."

jsl_meds_q8_v3 is effective in conveying necessary information. The model provided detailed answers even with limited context. However, in some questions, its response was only partial, highlighting a need for improvement with complex queries.

jsl_medm_q8_v2_response = "I don't know."

jsl_medm_q8_v2 mirrors GPT-4o’s performance, samewise responding with “I don’t know” for certain questions. It provides straightforward and brief responses, which are suitable for scenarios demanding clarity without much detail.

jsl_med_rag_v1_response = "Based on the case study, the recommended total daily dose of hydrocortisone for adults with adrenal insufficiency is not specified. The case study focuses on a 36-day-old male infant who was treated with hydrocortisone for adrenal insufficiency. However, it does not provide details on the dose used in adults with adrenal insufficiency. The American Association of Clinical Endocrinologists (AACE) recommends a total daily dose of hydrocortisone in the range of 15-30 mg for adults with adrenal insufficiency. However, the dose may need to be adjusted based on clinical response and serum cortisol levels. In summary, while the case study does not provide specific details on the recommended total daily dose of hydrocortisone for adults with adrenal insufficiency, the AACE guidelines suggest a total daily dose in the range of 15-30 mg. The dose should be individualized based on clinical response and cortisol levels."

jsl_med_rag_v1 provides detailed, concise answers. In certain questions , it gave the clearest and most explanatory answers, offering a detailed explanation about the context not having information. Its answers are both concise and clear, making it a reliable model for comprehensive explanations.

gpt-4o_response = "I don't know."

GPT-4o gives concise answers in line with the prompt instruction, stating “I don’t know.” for certain questions. It provides straightforward and brief responses, which are suitable for scenarios demanding clarity without much detail.

LongCite-llama3.1–8b_response = "Based on the information provided, the recommended total daily dose of hydrocortisone for adults with adrenal insufficiency is not explicitly stated. However, some context is provided: Adrenocorticotropic hormone (ACTH) did not increase with insulin loading, indicating adrenal insufficiency. The patient was treated with hydrocortisone and a mineralocorticoid and recovered. At age 17, the patient did not show signs of puberty, indicating ongoing adrenal insufficiency. The patient was later diagnosed with central diabetes insipidus and treated with desmopressin. He continued to take hydrocortisone and a mineralocorticoid after discharge. So while the exact daily dose of hydrocortisone is not given, it is clear that the patient with adrenal insufficiency required ongoing treatment with hydrocortisone and a mineralocorticoid. The dosage and formulation (immediate-release vs modified-release) would depend on the individual patient's needs and response to treatment."

LongCite-llama3.1–8b model delivers detailed answers that balance clarity and depth. For certain queries, it provides highly explanatory responses, even addressing cases where contextual information is limited.

- Comprehensiveness and Clarity in Responses

JSL Medical LLMs, like the jsl_med_rag_v1, stand out for their ability to generate responses that are both detailed and comprehensive. This model gives clear and detailed answers, which is critical in healthcare where ambiguity can lead to misunderstandings. For instance, when faced with a challenging question, jsl_med_rag_v1 gave the clearest and most comprehensive explanation. It went beyond just saying “no information” and offered a detailed understanding of why certain data might be unavailable.

The LongCite-llama3.1–8b model provides detailed and comprehensive responses, while GPT-4o tends to offer clear and concise answers. However, GPT-4o occasionally does not provide answers for certain questions.

- Handling Complex Queries

In the comparison, jsl_med_rag_v1 outperformed other models by providing context-aware, in-depth responses. It managed to give a clear and informative answer when faced with complex medical questions, where GPT-4o and other models fell short by providing incomplete or overly simplistic responses. This makes the jsl_med_rag_v1 model more reliable for healthcare professionals who need detailed information on topics such as drug interactions, diagnosis, or treatment recommendations.

Similarly, jsl_meds_rag_q8_v1 and LongCite-llama3.1–8b provided lengthy and detailed answers, even when information was limited. However, jsl_meds_rag_q8_v1 model’s tendency to repeat itself could be seen as a minor drawback. Still, when it comes to providing essential information, it outperforms general models like GPT-4o, which may resort to “I don’t know” for more complex or context-limited questions.

- Specialized Medical Knowledge

JSL medical models are designed specifically for healthcare use, which means they have a more extensive and precise understanding of medical terminologies, protocols, and patient care scenarios compared to general-purpose models. For instance, jsl_meds_q8_v3 effectively handles short answers but still conveys critical medical information. It manages to extract relevant data even from limited context, which is crucial for scenarios like emergency diagnoses or incomplete patient data.

In contrast, jsl_medm_q8_v2 closely mirrors GPT-4o, often defaulting to “I don’t know” when the information is unclear or unavailable. While this might be acceptable for general queries, it’s a disadvantage in medical contexts where professionals need as much information as possible to make decisions.

- Consistency with Detailed Responses

Where GPT-4o shines in brevity and directness, JSL models like jsl_med_rag_v1 balance the need for both clarity and thoroughness. GPT-4o’s tendency to simplify its responses, especially under instructions not to explain in detail, can sometimes leave healthcare professionals wanting more context or a more in-depth answer. In certain querries , GPT-4o provided only brief statements like “no information in context,” which may be insufficient for medical applications where the full picture is required.

On the other hand, the JSL models offer answers that are not only comprehensive but also targeted to the specific medical need, providing healthcare professionals with actionable insights rather than just minimal information.

For additional information, please see the following references:

- Comparison notebook: Comparison of Medical and Large LLMs in RAG

- Pretrained models: Loading Medical and Open-Souce LLMs

- Various RAG Implementations with JohnSnowLabs: generative-ai

Healthcare models are licensed, so if you want to use these models, you can watch “Get a Free License For John Snow Labs NLP Libraries” video and request one from the John Snow Labs web page.

Conclusion:

In medical fields where accuracy, clarity, and depth are critical, specialized models like jsl_med_rag_v1 tend to outperform general-purpose models like GPT-4o. Moreover, other models that were tested achieved results that were either superior to or comparable with those of GPT-4o. This indicates that by utilizing smaller language models in conjunction with Retrieval-Augmented Generation (RAG), it is feasible to attain performance levels similar to GPT-4o while utilizing fewer resources. Consequently, this approach can yield outcomes that closely mirror those produced by GPT-4o, demonstrating the potential for efficient and effective use of smaller models in medical applications.

JSL LLM models ability to provide long, detailed, and context-aware answers makes them invaluable for healthcare professionals. Whether it’s tackling complex medical queries, offering in-depth explanations, or delivering concise yet complete responses, these models are tailored to meet the unique needs of the healthcare industry. As AI continues to evolve, models like JSL will play a critical role in improving patient care, diagnostics, and medical research, offering an edge over more general-purpose systems like GPT-4o.