Natural Language Processing (NLP) drives an imperative advantage behind the growth of emerging technologies, like text classification, chatbot communication, sentiment analysis, and speech recognition. Owing to an enhanced interest in human-to-machine communications, the technology around NLP is rapidly advancing. The availability of big data complements the outcomes of powerful NLP computing models. Besides, the rise of supervised and unsupervised NLP research leverages artificial intelligence (AI), and deep learning into analytics-ready data pipelines for greater gains.

The constant improvements in NLP research have bought a paradigm shift to how AI algorithms learn. Fintech NLP improve analytics in financial field. Significant breakthroughs in Healthcare AI, for instance, will be surrounded by image classification and filter into computer vision systems for medical image analysis. However, to leverage the greatest potential that AI, NLP, and Machine learning (ML) offer, businesses would need to instill their trust in NLP applications that go beyond research and become reliable real-world systems.

Putting Business Before Technology: Data First Products

To catapult NLP from the research to the production stage enterprises need to shift their focus on data-first products. They need to formulate a business problem that starts with understanding their data at a fundamental level to articulate its context. The next step is to bring together a diverse set of experiences and domain experts to collaborate on data exploration, model creation, model deployment, and evaluation.

Building an NLP model starts with a well-defined business goal tied to the NLP model’s output and follows through the model deployment. Thereafter, monitoring model performance utilizes live data pipelines. Overcoming biases that endanger the model outcome is another challenge that needs an answer. For instance, AI systems may develop discriminatory biases in their output when trained on patient datasets that misrepresent or under-represent features of an entire population. The ultimate success of an NLP model into the production stage, thus significantly depends on the data used as input for model formulation. Besides, these models see their adaption as pre-trained input models for other NLP evaluated tasks. Based on how well they perform on these downstream tasks, forms the supplementary benchmark for the successful transition of an NLP model from the research to the application stage.

Understanding Jargon & Context in Healthcare

In recent years, NLP has increasingly found its adoption into a myriad range of applications to improve outcomes, optimize costs, and deliver a better quality of care across business functions. The application of NLP in the healthcare domain has grown to offer more customized and patient-centric solutions. Text analytics and NLP systems add value to automate administrative workflows, streamline information governance, improve data management and manage regulatory compliance using real-time data.

Healthcare organizations like healthcare providers, pharmaceutical companies, and biotechnology firms leverage NLP to improve patient health literacy, guide patients through medical jargon to help them make informed medical decisions, and keep their health on track. NLP finds its application in clinical trials, drug discoveries, patient admission, and discharge to enhance human to machine interaction for search-oriented conversations and mapping clinical concepts and diagnosis with clinical guidelines. NLP models improve EHR data readability with tools like voice recognition that offer a viable solution to EHR distress. Many clinicians already utilize NLP enabled voice recognition as an alternative to tedious typing or handwriting clinical notes.

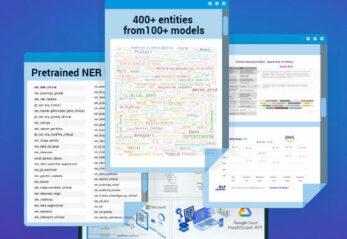

Over the years, enterprises have shifted their focus to text processing libraries that implement the most recent advances in deep learning & transfer learning. These libraries offer production-grade, scalable, and trainable versions of the latest academic research findings in NLP. John Snow Labs’ Spark NLP for Healthcare – the most widely used NLP library in the enterprise, and by a larger margin in healthcare – is one such library that holds the top spot in 8 of 11 accuracy leaderboards for medical named entity recognition on the Papers With Code website, which tracks peer-reviewed papers relating to each benchmark.

Spark free & open-source NLP for Healthcare provides:

- Clinical Named Entity Recognition (NER) to extract named entities – like symptoms, drugs, vital signs, and procedures – from clinical narratives.

- Assertion status detection that assigns a specific assertion category (e.g., present, absent, and possible) to each medical concept mentioned in a clinical report.

- Entity resolution to map a term to standard medical terminologies. This helps clean synonyms and jargon to standard terms – for example, knowing that “shortness of breath”, “SOB”, “short of breath”, and “dyspnea” are the same thing.

- NLP De-identification to separate Personally Identifiable Data (PII) from the Protected Health Information (PHI) to conduct healthcare research analyses with statistically de-identified health information without privacy & compliance violations.

- Object Character Recognition (OCR) to digitize scanned healthcare documents and medical images so that all the above capabilities can be used on them to extract facts.

Ensuring Data & Model Integrity Over Time

NLP holds immense potential in capturing real-life application requirements that range from speech recognition, speech translation, and writing complete, grammatically correct sentences. It is tough to develop a brand identity if businesses don’t know their customers’ emotions behind the brand. NLP-based sentiment analysis extracts customer views/reviews and converts them into insightful data for brands to develop executable strategies. Chatbots are increasingly finding their application in sales to target prospects, strike a conversation, schedule appointments and close a sales call. NLP helps financial institutions to evaluate the creditworthiness of their customers who have practically zero financial records. NLP applies to financial trading as well. Intent recognition and Spoken Language Understanding identify user intent (for example “purchase”, “sell”, and so forth) that helps brokers and dealers choose what to trade and by how much quality.

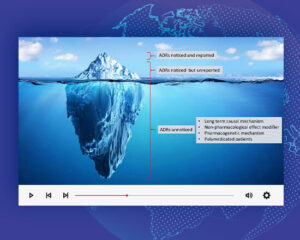

Seamless adoption of NLP across enterprises comes with its adoption challenges. Data Quality (DQ) and Data integrity (DI), are considered to be massive technology bottlenecks towards the successful implementation of NLP models. The massive explosion of available healthcare data confronts the very fundamentals of data integrity and accuracy. NLP technology plays a critical role in closing care gaps and improving data quality. They automatically detect and identify errors in electronic patient notes that are compiled by speech recognition systems to enhance patient safety and health care delivery.

Overcoming Adoption Challenges

Going forward, for the pragmatic use of NLP, enterprises need to harness their large swathes of unstructured data and effectively mine them for insights. However, there remains a lot to be done. According to Gartner’s predictions, 80% of AI projects failed to get into the production stage in 2020 alone. Which brings us to the question: why do NLP projects fail? Reasons are plenty- siloed data formats, lackadaisical data strategies, not having enough and the right quantity of data, to as far as putting the wrong models, lack of data synergies, and leadership support.

Overcoming these challenges calls to address the engineering, science, operations, and safety gaps between research prototypes and production-grade systems. Extracting reliable information from machine learning models requires a multi-discipline team of data scientists, data engineers, and domain experts (such as doctors, financial analysts, or lawyers) who deeply understand the business and customer for which a product is being built.

Fine Tuning pre-trained language models have become imperative in NLP projects because it carries the potential to save a lot of time and improves model accuracy. There are different approaches for safety, reliability, and monitoring of NLP models – which a team that claims to deliver production-grade software must have hands-on expertise with

Conclusion

2020 has been a significant yearfor the growth of NLP, with most enterprises increasing their budgets and investment in this area (according to Gradient Flow), against the grain of most technology investments due to COVID-19. And the future looks even brighter for the NLP market as it was valued at USD 13.16 billion in 2020, and it’s expected to be worth USD 42.04 billion by 2026, registering a CAGR of 21.5% during 2021-2026. It is also expected that 85% of business engagement will be done without human interaction in the upcoming years. Therefore adopting an NLP strategy and technology across the whole company is now paramount for those who want not just to survive but stay competitive and thrive in business. To know more you can register for our new workshop Natural Language Processing for Business Leaders.