Healthcare NLP employs advanced filtering techniques to refine entity recognition by excluding irrelevant entities based on specific criteria like whitelists or regular expressions. This approach is essential for ensuring precision in healthcare applications, allowing only the most relevant entities to be processed in your NLP pipelines.

Introduction to ChunkFilterer for Healthcare NLP

In the continuously advancing field of Natural Language Processing (NLP), the accurate identification and filtering of entities are essential for deriving significant insights from unstructured text. Healthcare NLP offers a variety of Named Entity Recognition (NER) models available in the Models Hub, including the Healthcare NLP MedicalNerModel, which utilizes Bidirectional LSTM-CNN architecture, BertForTokenClassification, as well as powerful rule-based annotators. Among these tools, the ChunkFilterer annotator stands out as a powerful annotator designed to refine entity recognition results based on predefined criteria.

Let us start with a brief introduction to Healthcare NLP, followed by an in-depth exploration of the ChunkFilterer annotator, including detailed examples to demonstrate its functionality.

Healthcare NLP &LLM

Healthcare Library is a considerable part of the Spark NLP platform developed by John Snow Labs for improving natural language processing tasks within the healthcare sector. It offers more than 2,200 pre-trained models and pipelines carefully crafted for medical data, which makes possible accurate information extraction, named entity recognition for clinical and medical concepts, and text analysis capabilities. Healthcare, developed with advanced algorithms and updated periodically, helps in information processing to provide deep insights to healthcare professionals emanating from unstructured medical data sources such as electronic health records, clinical notes, and biomedical literature.

John Snow Labs has created custom large language models (LLMs) tailored for diverse healthcare use cases. These models come in different sizes and quantization levels, designed to handle tasks such as summarizing medical notes, answering questions, performing retrieval-augmented generation (RAG), named entity recognition and facilitating healthcare-related chats.

John Snow Labs’ GitHub repository serves as a collaborative platform where users can access open-source resources, including code samples, tutorials, and projects, to further enhance their understanding and utilization of Spark NLP and related tools.

John Snow Labs also offers periodic certification training to help users gain expertise in utilizing the Healthcare Library and other components of their NLP platform.

John Snow Labs’ demo page provides a user-friendly interface for exploring the library’s capabilities, allowing users to interactively test and visualize various functionalities and models, facilitating a deeper understanding of how these tools can be applied to real-world scenarios in healthcare and other domains.

What is ChunkFilterer?

The ChunkFilterer is a versatile annotator in the Healthcare NLP suite that allows users to filter entities extracted during Named Entity Recognition (NER) processes. This filtering capability is especially useful in clinical and healthcare applications, where there is often a need to focus on specific types of information or data points while ignoring irrelevant or extraneous entities. The primary function of ChunkFilterer is to process NER results and either include or exclude chunks based on a whitelist of terms or a regular expression pattern.

Imagine working with large amounts of clinical text, such as medical reports or patient histories. These texts often contain a wide array of entities, including medications, procedures, diagnoses, and dates. However, not all of these entities may be relevant to your specific analysis. For instance, if you’re only interested in filtering out certain medications or dates, the ChunkFilterer helps you achieve this without having to manually comb through the data.

Key Capabilities of ChunkFilterer

ChunkFilterer gives users control over what entities to retain for further analysis. Here’s how it achieves that:

- Flexible Filtering Criteria: You can define how to filter entities using two different methods:

- isIn: This mode allows you to define a whitelist of entities that should be retained. For example, if you’re only interested in specific medications like “Advil” or “Metformin,” you can use this mode to retain only those entities.

- regex: When more advanced pattern matching is needed, you can use regular expressions to define which chunks to keep or discard. This is especially useful for filtering out entities like dates, addresses, or any text pattern that can be described using a regex.

- Whitelist and Blacklist Options: The annotator allows users to define a whitelist of terms that should be processed while ignoring everything else. Alternatively, a blacklist can be set, enabling the model to process everything except the entities listed in the blacklist.

- Entity Confidence Filtering: One unique feature of the

ChunkFiltereris its ability to filter entities based on confidence scores. For certain use cases, it might be necessary to only retain entities that were identified with a high degree of confidence. TheChunkFilterercan use a CSV file containing entity-confidence pairs and apply a filtering mechanism based on this threshold. - Filter by Entity Label or Result: The

ChunkFiltereralso provides flexibility in how it filters data. You can choose to filter by the actual label (entity) assigned to a chunk, such as “MEDICATION” or “DIAGNOSIS”, or you can filter based on the result, which refers to the text extracted by the NER model. This distinction is valuable when you want to refine results in different ways.

Key Parameters and Usage

The ChunkFilterer annotator comes with a variety of configurable parameters:

- inputCols: Specifies the input columns containing the annotations to be filtered.

- outputCol: Defines the name of the output column that will hold the filtered annotations.

- criteria: Determines the filtering method (

isInorregex). - whiteList: Lists the entities to keep. Only entities in this list will be processed.

- blackList: Opposite of the whitelist, it lists entities to ignore.

- regex: Contains regular expressions to process chunks, allowing for flexible pattern matching.

- filterEntity: Chooses whether to filter by the entity label (

entity) or by the annotation result (result). - entitiesConfidence: Specifies a CSV file path containing entity-confidence pairs, allowing filtering based on confidence thresholds.

Setting Up Spark NLP Healthcare Library

First, you need to set up the Spark NLP Healthcare library. Follow the detailed instructions provided in the official documentation.

Additionally, refer to the Healthcare NLP GitHub repository for sample notebooks demonstrating setup on Google Colab under the “Colab Setup” section.

# Install the johnsnowlabs library to access Spark-OCR and Spark-NLP for Healthcare, Finance, and Legal. ! pip install -q johnsnowlabs

from google.colab import files

print('Please Upload your John Snow Labs License using the button below')

license_keys = files.upload()

from johnsnowlabs import nlp, medical

# After uploading your license run this to install all licensed Python Wheels and pre-download Jars the Spark Session JVM nlp.settings.enforce_versions=True nlp.install(refresh_install=True)

from johnsnowlabs import nlp, medical import pandas as pd

# Automatically load license data and start a session with all jars user has access to spark = nlp.start()

Practical Example with Code

To demonstrate the power of the ChunkFilterer annotator, let’s walk through a simple example. Suppose we want to filter medication names from a clinical note, keeping only specific drugs of interest.

# Annotator that transforms a text column from a dataframe into an Annotation ready for NLP

documentAssembler = nlp.DocumentAssembler()\

.setInputCol("text")\

.setOutputCol("document")

sentenceDetector = nlp.SentenceDetectorDLModel.pretrained("sentence_detector_dl_healthcare", "en", "clinical/models")\

.setInputCols(["document"])\

.setOutputCol("sentence")

# Tokenizer splits words in a relevant format for NLP

tokenizer = nlp.Tokenizer()\

.setInputCols(["sentence"])\

.setOutputCol("token")

# Clinical word embeddings trained on PubMED dataset

word_embeddings = nlp.WordEmbeddingsModel.pretrained("embeddings_clinical", "en", "clinical/models")\

.setInputCols(["sentence", "token"])\

.setOutputCol("embeddings")

posology_ner = medical.NerModel.pretrained("ner_posology", "en", "clinical/models") \

.setInputCols(["sentence", "token", "embeddings"]) \

.setOutputCol("ner")

ner_converter = medical.NerConverterInternal()\

.setInputCols(["sentence", "token", "ner"])\

.setOutputCol("ner_chunk")

chunk_filterer = medical.ChunkFilterer()\

.setInputCols("sentence", "ner_chunk")\

.setOutputCol("chunk_filtered")\

.setCriteria("isin")\

.setWhiteList(['Advil', 'metformin', 'insulin lispro'])

nlpPipeline = nlp.Pipeline(stages=[

documentAssembler,

sentenceDetector,

tokenizer,

word_embeddings,

posology_ner,

ner_converter,

chunk_filterer

])

empty_data = spark.createDataFrame([[""]]).toDF("text")

chunk_filter_model = nlpPipeline.fit(empty_data)

In this pipeline, we’re first transforming the text into a document format, detecting sentences, tokenizing the words, and applying clinical word embeddings. The NerModel identifies medications in the text, which are then converted into chunks by the NerConverterInternal. Finally, the ChunkFilterer refines these chunks, keeping only the medications specified in the whiteList parameter.

Here’s how we can use this pipeline to process a clinical note:

light_model = nlp.LightPipeline(chunk_filter_model) text = 'The patient was prescribed 1 capsule of Advil for 5 days. He was seen by the endocrinology service and was discharged on 40 units of insulin glargine at night, 12 units of insulin lispro with meals, metformin 1000 mg two times a day.' light_result = light_model.annotate(text)

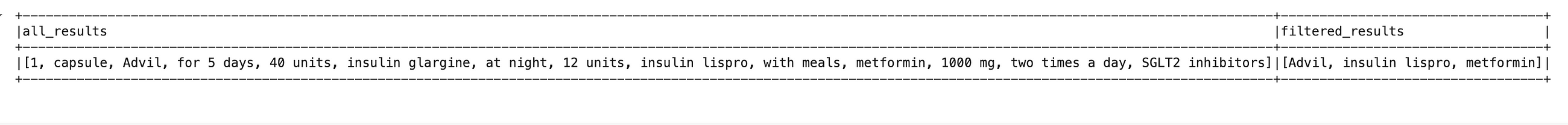

After running this code, light_result['ner_chunk'] would give us the original list of detected entities, while light_result['chunk_filtered'] would show only the entities that passed through the ChunkFilterer.

Displaying all_resuts and filtered_results

Additionally, the ChunkFilterer can be used with a regular expression to filter chunks based on patterns such as dates. Here’s how you can set up the filter to capture date formats like MM-DD-YYYY or MM/DD/YYYY:

chunk_filterer = medical.ChunkFilterer()\

.setInputCols("sentence", "ner_chunk")\

.setOutputCol("chunk_filtered")\

.setCriteria("regex")\

.setRegex(['(\d{1}|\d{2}).?(\d{1}|\d{2}).?(\d{4}|\d{2})'])

Let’s see this in action with some example data:

text = [

['Chris Brown was born on Jan 15, 1980, he was admitted on 9/21/22 and he was discharged on 10-02-2022'],

['Mark White was born 06-20-1990 and he was discharged on 02/28/2020. Mark White is 45 years old'],

['John was born on 07-25-2000 and he was discharged on 03/15/2022'],

['John Moore was born 08/30/2010 and he is 15 years old. John Moore was discharged on 12/31/2022']

]

data = spark.createDataFrame(text).toDF("text")

result = chunk_filter_model.transform(data)

result.select('ner_chunk.result', 'chunk_filtered.result').show(truncate=False)

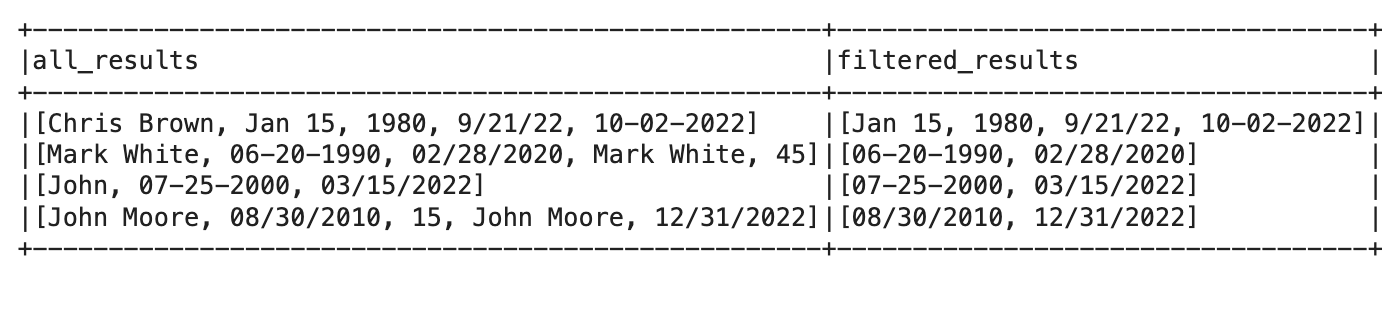

In this code, we define a ChunkFilterer with a regular expression that filters out date formats. The input data consists of sentences containing various date formats. After running the pipeline, the results will show all the detected entities in the ner_chunk column and only the filtered dates in the chunk_filtered column.

Displaying all_resuts and filtered_results

Conclusion

The ChunkFilterer annotator in Healthcare NLP is an important tool to refine and filter the extracted entities by the NER system. In clinical and healthcare applications where precision is paramount, the ability to get rid of irrelevant entities and focus on the main information can greatly enhance the effectiveness of downstream tasks like entity resolution or assertion status detection.

ChunkFilterer offers flexible methods of filtering, such as whitelisting, blacklisting, regular expression matching, and confidence-based filtering that enables different users to customize their NLP pipelines.

In practical applications, this will translate, to more precise diagnosis of patients, better recommendations for treatments, and much more efficient retrieval of information from large medical databases. By integrating ChunkFilterer into an NLP pipeline, one can maximize the accuracy and efficiency of healthcare data processing toward more meaningful insights or better decision-making.

ChunkFilterer is an important component in any healthcare NLP pipeline that requires careful filtering of entities. This tool is especially able to handle complex filtering scenarios easily and seamlessly integrates into the greater Healthcare NLP ecosystem. It is, therefore, a must-have tool in the quest to maximize value extracted from medical text data in unstructured form. You will provide the processes involved in analyzing clinical documents with much ease when you have this annotator in your tool kit for higher accuracy in extracting that critical information out of healthcare datasets.