In this article we are going to show two examples of how to import Hugging Face embeddings models into Spark NLP, and another example showcasing a bulk importing of 7 BertForSequenceClassification models.

In addition to that, a link to the several different notebooks for importing different transformers architectures and task types is also included.

1. Importing a RobertaEmbeddings model

- Importing Hugging Face and Spark NLP libraries and starting a session;

- Using a AutoTokenizer and AutoModelForMaskedLM to download the tokenizer and the model from Hugging Face hub;

- Saving the model in TensorFlow format;

- Load the model into Spark NLP using the proper architecture.

Let’s see step by step the process.

1.1. Importing the libraries and starting a session

First, we are going to need the transformers library (from Hugging Face), more specifically we are going to use AutoTokenizer and AutoModelForMaskedLM for downloading the model, and then TFRobertaModel from loading it from disk one downloaded. We are going to need tensorflow as well.

In case you don’t have them installed in your environment, run first:

!pip install -q transformers==4.6.1 tensorflow==2.4.1

Afterwards, import the libraries:

import transformers

from transformers import TFRobertaModel

from transformers import AutoTokenizer, AutoModelForMaskedLMimport tensorflow

Let’s add also some generic libraries for file management:

import json

import os

import shutil

And last, but not lest, let’s add sparknlp and pyspark libraries. In case you don’t have Spark NLP installed, please refer to https://nlp.johnsnowlabs.com/docs/en/install to see installation instructions. For Google Colab, it’s as easy as running the following line:

! wget http://setup.johnsnowlabs.com/colab.sh -O - | bash

Then, both PySpark and Spark NLP will be installed, so you can import the libraries…

import sparknlp

from sparknlp.base import *

from sparknlp.annotator import *

from sparknlp.annotator import *from pyspark.ml import Pipeline

from pyspark.sql import SparkSession

… and start a session…

import sparknlp

spark = sparknlp.start()

1.2. Using a AutoTokenizer and AutoModelForMaskedLM

HuggingFace API serves two generic classes to load models without needing to set which transformer architecture or tokenizer they are: AutoTokenizer and, for the case of embeddings, AutoModelForMaskedLM.

Let’s suppose we want to import roberta-base-biomedical-es, a Clinical Spanish Roberta Embeddings model. I’ve found it in Hugging Face hub, available at https://huggingface.co/models.

Let’s import both tokenizer and model using the classes described above:

tokenizer = AutoTokenizer.from_pretrained("PlanTL-GOB-ES/roberta-base-biomedical-es")model = AutoModelForMaskedLM.from_pretrained("PlanTL-GOB-ES/roberta-base-biomedical-es")

1.3. Saving the model in TensorFlow format

Models in HuggingFace can be, among others, in PyTorch or TensorFlow format.

MODEL_NAME_TF='my_model_tf' # Change metokenizer.save_pretrained(f'./MODEL_NAME_TF}_tokenizer/')

model.save_pretrained(f'./{MODEL_NAME_TF}', saved_model=True, save_format='tf')

In this case, save_format=’tf’ was set because the model was originally in PyTorch format. That would not be necessary if the model is originally in TensorFlow/Keras.

At this point, you can check the model can be loaded by HuggingFace TensorFlow specific classes by doing this:

loaded_model = TFRobertaModel.from_pretrained(f'./{MODEL_NAME_TF}')

After saving the model, you also need to add the vocab.txt file to the assets directory of the saved model. You can achieve that by doing:

vocab_pth = f"./{MODEL_NAME_TF}_tokenizer/vocab.txt"

saved_model_pth = f'./{MODEL_NAME_TF}/saved_model/1/assets/'!cp $vocab_pth $saved_model_pth

Sometimes, instead of a vocab.txt file, a vocab.json may be found. If that is the case, execute the following code before you copy the file with the cp command above:

with open(f'./{MODEL_NAME_TF}_tokenizer/vocab.json', 'r') as f:

data = json.load(f)with open(f'./{MODEL_NAME_TF}_tokenizer/vocab.txt', 'w') as f:

for d in data.keys():

f.write(d)

f.write('\n')

Last, but not least, copy also the merges.txt file the same way:

merges_pth = f"./{MODEL_NAME_TF}_tokenizer/merges.txt"

saved_model_pth = f'./{MODEL_NAME_TF}/saved_model/1/assets/'!cp $merges_pth $saved_model_pth

1.4. Load the model into Spark NLP using the proper architecture.

With the model ready, let’s just import it into Spark NLP using the RoBertaEmbeddings class.

embeddings = RoBertaEmbeddings.loadSavedModel(f'./{MODEL_NAME_TF}/saved_model/1',spark).setInputCols(["document",'token']).setOutputCol("embeddings")

Now, let’s save to disk the Spark NLP version of the model:

embeddings.write().overwrite().save(f"./{MODEL_NAME_TF}_spark_nlp")

And we are ready to zip it…

shutil.make_archive(f"roberta-base-biomedical-es", 'zip', f"./{MODEL_NAME_TF}_spark_nlp")

…and to upload it to the Spark NLP Models Hub. To do this, just visit https://nlp.johnsnowlabs.com/models and click on “Upload Your Model” button.

2. Bulk import of different BertForSequenceClassification models into Spark NLP

Let’s suppose I have taken a look at Hugging Face Models Hub (https://huggingface.co/models), and I have detected 7 models I want to import into Spark NLP, for BertForSequenceClassification:

models = ['philschmid/BERT-Banking77']

models.append('Hate-speech-CNERG/bert-base-uncased-hatexplain')

models.append('mrm8488/bert-mini-finetuned-age_news-classification')

models.append('juliensimon/autonlp-song-lyrics-18753417')

models.append('astarostap/autonlp-antisemitism-2-21194454')

models.append('aychang/bert-base-cased-trec-coarse')

models.append('mrm8488/bert-tiny-finetuned-sms-spam-detection')

Since the steps are more or less the same as described in first example, I’m going to automatize in a loop all the steps from downloading, to importing into SparkNLP and inferring, to illustrate an end-to-end import.

There is one extra step we need to carry out when importing Classifiers from Hugging Face: we need a labels.txt, a file that has 1 line per label included in the classifier.

That file can be created using the config.json file, with a field called id2label, provided in most of Hugging Face models. However, we may find models without that field, what leads to using just numeric values for the labels, what is not very user friendly.

To support both importing from config.json and creating our own labels, let’s declare an array:

predefined_labels = [None, None, None, None, ('False','True'), None, ('False','True')]

Each item in the array corresponds to the model in models array. If the value is None, then we will import the tags from the model. If there is a tuple of string labels, then the labels will be taken from the tuple. For example, for first model (philschmid/BERT-Banking77) will get the labels from the model, but last model (mrm8488/bert-tiny-finetuned-sms-spam-detection) don’t have them, so we are setting them to “False” and “True”.

Now, let’s predefine a pipeline that will be used to check every iteration the results of the model. All of these models will manage Sentences, not documents, so we will have a DocumentAssembler(), a SentenceDetectorDL() and a Tokenizer().

documentAssembler = DocumentAssembler()\

.setInputCol("text")\

.setOutputCol("document")sentenceDetector = SentenceDetectorDLModel.pretrained() \

.setInputCols(["document"]) \

.setOutputCol("sentence")spark_tokenizer = Tokenizer()\

.setInputCols("sentence")\

.setOutputCol("token")

And now, let’s start the bulk import!

from shutil import copyfile

import collections

import shutil

for i, model in enumerate(models):

print(f"- Downloading {model}")

tokenizer = AutoTokenizer.from_pretrained(model)

modelpt = AutoModelForSequenceClassification.from_pretrained(model)

tokenizer_path = f'./models/{model}_tokenizer'

model_tf_path = f'./models/{model}_tf'

model_sparknlp_path = f'./models/{model}_sparknlp'

model_zip_path = f'./models/{model}'

print(f"- Saving {model} in local")

tokenizer.save_pretrained(tokenizer_path)

modelpt.save_pretrained(model_tf_path, saved_model=True, save_format='tf')

print(f"- Adding vocab.txt")

vocab_pth = f"{tokenizer_path}/vocab.txt"

saved_model_pth = f'{model_tf_path}/saved_model/1/assets/vocab.txt'

copyfile(vocab_pth, saved_model_pth)

print(f"- Creating and adding labels.txt")

labels = None

labels_path = f'{model_pt_path}/labels.txt'

with open(f'{model_pt_path}/config.json', 'r') as f:

config = json.load(f)

if predefined_labels[i] is not None:

labels = list(predefined_labels[i])

else:

labels = config['id2label']

od = collections.OrderedDict(sorted(labels.items()))

labels = list(od.values())

if labels is None:

print("-- ERROR: predefined_labels are None and no 'id2label' found in config.json. Skipping")

continue

with open(labels_path, 'w') as fw:

for i, l in enumerate(labels):

if i != 0:

fw.write('\n')

fw.write(l)

print(f"- Labels: {labels}")labels_model_path = f'{model_tf_path}/saved_model/1/assets/labels.txt'

copyfile(labels_path, labels_model_path)

print("- Importing into SparkNLP")

seq = BertForSequenceClassification.loadSavedModel(

f'{model_tf_path}/saved_model/1',

spark)\

.setInputCols(["token", "sentence"])\

.setOutputCol("label")\

.setCaseSensitive(True)

print("- Saving the sparknlp model in disk")

seq.write().overwrite().save(model_sparknlp_path)

print("- Zipping it to prepare it for Modelshub upload")

shutil.make_archive(model_zip_path, 'zip', model_sparknlp_path)print("- Testing model in SparkNLP")

pipeline = Pipeline(stages = [

documentAssembler,

sentenceDetector,

spark_tokenizer,

seq])

empty = spark.createDataFrame([['']]).toDF("text")

p_model = pipeline.fit(empty)

test_sentences = ["""What feature in your car did you not realize you had until someone else told you about it?

Years ago, my Dad bought me a cute little VW Beetle. The first day I had it, me and my BFF were sitting in my car looking at everything.

When we opened the center console, we had quite the scare. Inside was a hollowed out, plastic phallic looking object with tiny spikes on it.

My friend and I literally screamed in horror. It was clear to us that somehow someone left their “toy” in my new car! We were shook, as they say.

This was my car, I had to do something. So, I used a pen to pick up the nasty looking thing and threw it out.

We freaked out about how gross it was and then we forgot about it… until my Dad called me.

My Dad said: How’s the new car? Have you seen the flower holder in the center console?

To summarize, we thought a flower vase was an XXX item…

In our defense, this is a picture of a VW Beetle flower holder. Camera - You are awarded a SiPix Digital Camera! call 09061221066 fromm landline."""]

res = p_model.transform(spark.createDataFrame(pd.DataFrame({'text': test_sentences})))result_df = res.select(F.explode(F.arrays_zip("sentence.result","label.result")).alias("cols"))\

.select(F.expr("cols['0']").alias("sentence"),

F.expr("cols['1']").alias("label"))result_df.show(50, truncate=100)

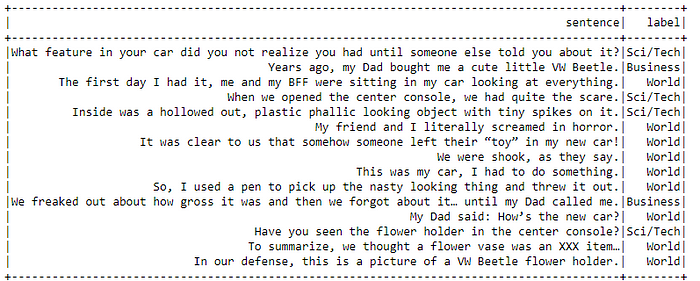

Let’s see how our mrm8488/bert-mini-finetuned-age_news-classification model classifies the topics of our sentences. using the predefined labels set by the author in Hugging Face:

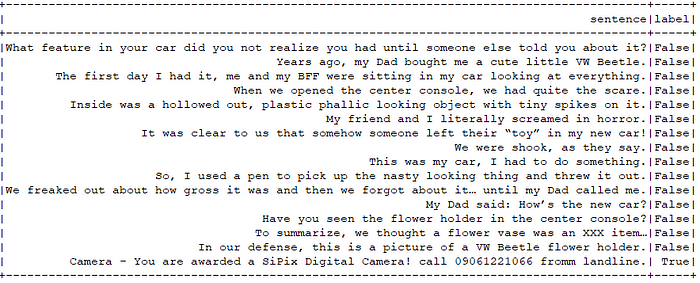

Now let’s take a look at the spam vs not spam model. The test was taken from a blog post, so it’s not spam. But we added a last spam sentence (Camera — You are awardeda SiPix…), to check if our spam model is able to detect it. These are the results of the iteration for the import of that model:

As we can see, the was correctly detected by our model, setting it to label True

Links to reference notebooks

The Spark NLP team has prepared a github documentation page with information about the supported transformers in Spark NLP and one reference notebook for each of them. You can find it here: https://github.com/JohnSnowLabs/spark-nlp/discussions/5669

Empower the Spark NLP community

Feel free to upload your imported models into the Spark NLP Models Hub as showed above, so that everyone can leverage your work. Make an impact in the Open Source community!