In industries like healthcare, in which regulatory-grade accuracy is a requirement, human validation of model results is often a critical requirement.

While models handle the legwork, the Generative AI Lab not only augments decision-making abilities for domain experts but empowers them to train better models as they go.

Incorporating human-in-the-loop (HITL) approaches in training new NLP models offers significant benefits, primarily enhancing model accuracy and reliability.

Humans review and correct model outputs or the outputs of other humans- domain experts in this case, providing ground truth data for model training.

Such reviews ensure high-quality, verified data which helps in refining the model’s learning algorithms.

Generative AI Lab has great support for this iterative process, allowing the calibration of the model based on real-world inputs and nuanced human judgments that automated systems might overlook, particularly in complex or ambiguous cases.

Furthermore, human-in-the-loop (HITL) can help address biases in machine learning models by ensuring diverse data interpretation and adjustment. This integration of human oversight accelerates the training process, leads to more robust models, and ensures that the final applications are more aligned with human contexts and values, making them more effective and trustworthy.

Generative AI Lab comes with a vast variety of features that support human-in-the-loop(HITL) workflows, including task management, full audit trails, custom review and approval workflows, versioning, and analytics, fully supporting the human-in-the-loop needs of high-compliance industries.

This article walks us through some of the features and capabilities implemented by Generative AI Lab for supporting team collaboration and manual review and approval workflows.

For simplicity, imagine a project created inside Generative AI Lab, having a team composed by the project manager, one annotator – John and one reviewer – Jane. The goal of the project is to create and train a model to be used to identify clinical entities.

The annotation team is tasked to identify and manually annotate and submit relevant entities as documented in the annotation guidelines provided by the project owner.

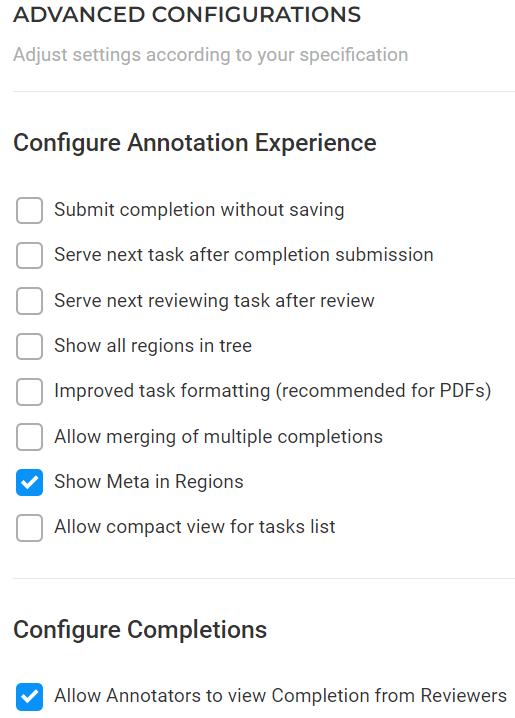

To guarantee the accuracy and reliability of the ground truth data, the project manager configured the system using the following setup:

- each task assigned to the annotator has a reviewer assigned to it as well. This setup ensures that all annotated data undergoes a thorough review process, enhancing the quality and dependability of the results.

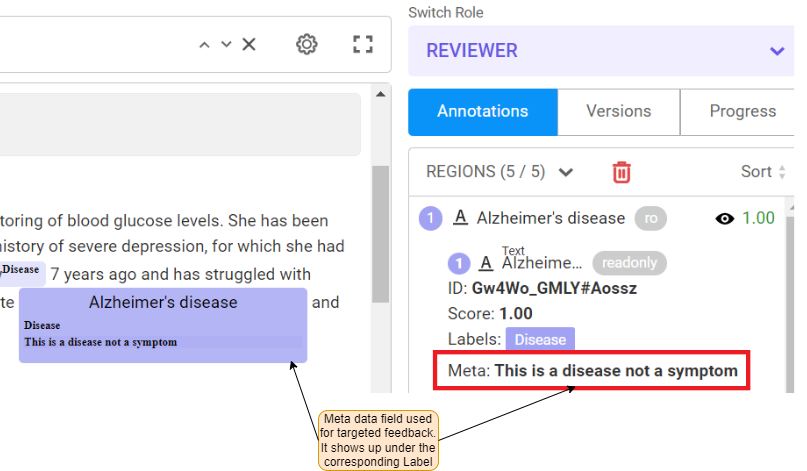

- “Show Meta in Regions” – enabled. This allows metadata associated to a label to be displayed on screen

- “Allow Annotators to view Completion from Reviewers” – enabled.

For more complex workflows supported by Generative AI Lab see the detailed documentation provided in the Help documentation that comes with the application under the topic “Workflows”.

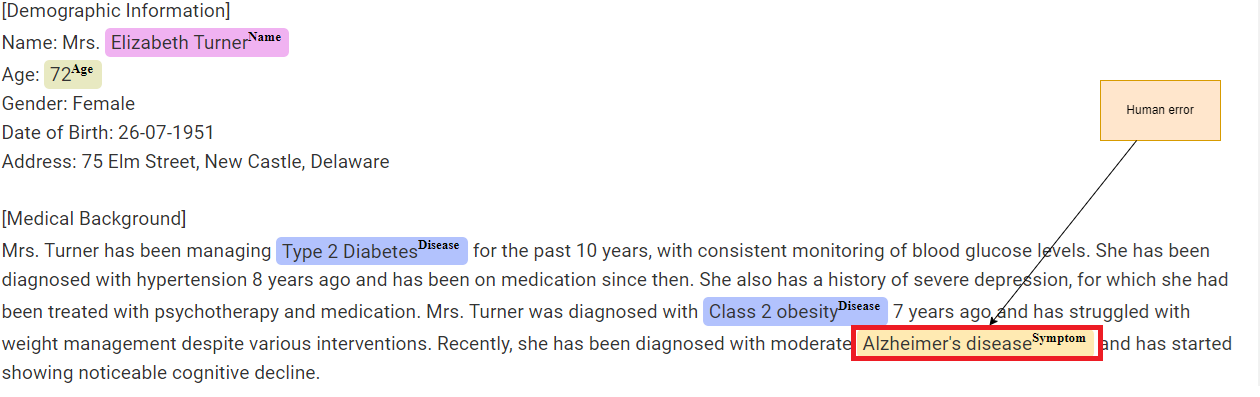

Let’s assume John annotates and submits the task he was assigned. But a mistake was made:

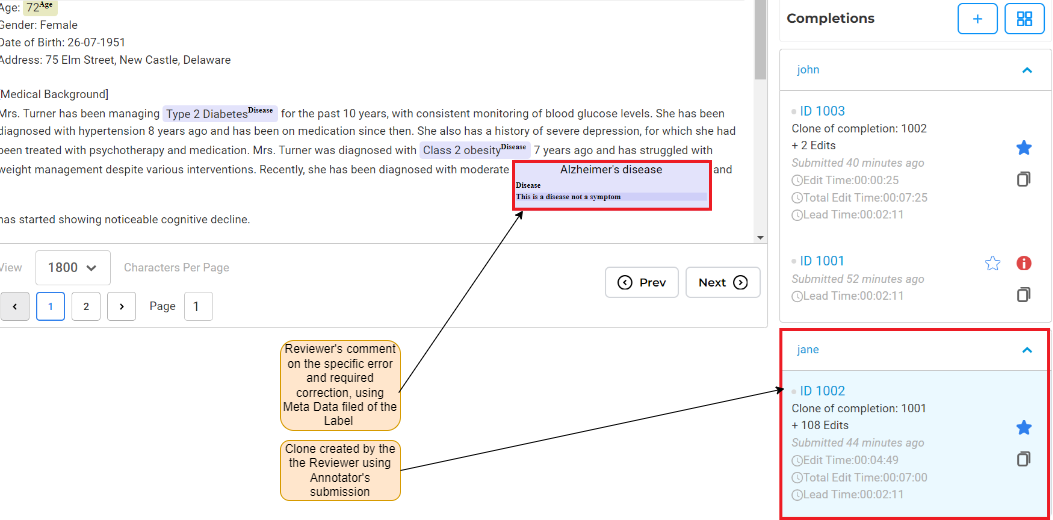

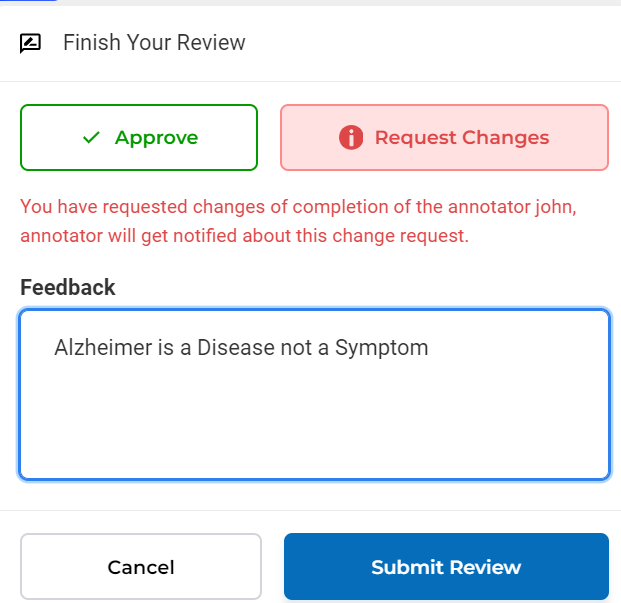

When the reviewer Jane reviews the task and notices the error, she uses Generative AI Lab advanced feature for cloning the task annotated by John, make the correction, provide accurate feedback for the specific error in the metadata filed specific to the wrong label used, requests changes for the task and finally submits. Here are the steps in detail:

- Review and requests changes:

- Clone annotator’s submission, apply change in place, add metadata for targeted explanation of the change request

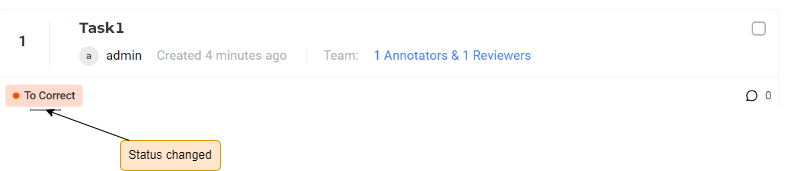

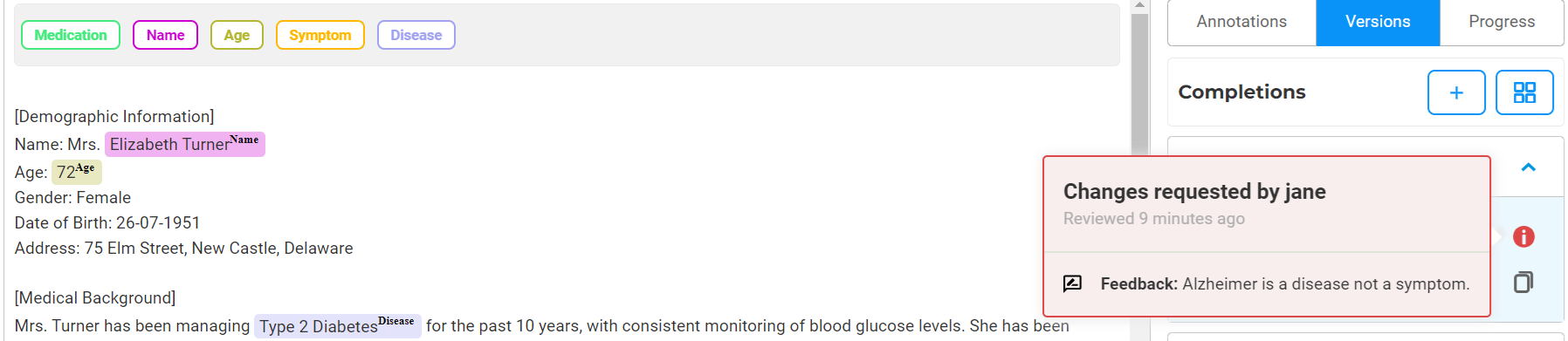

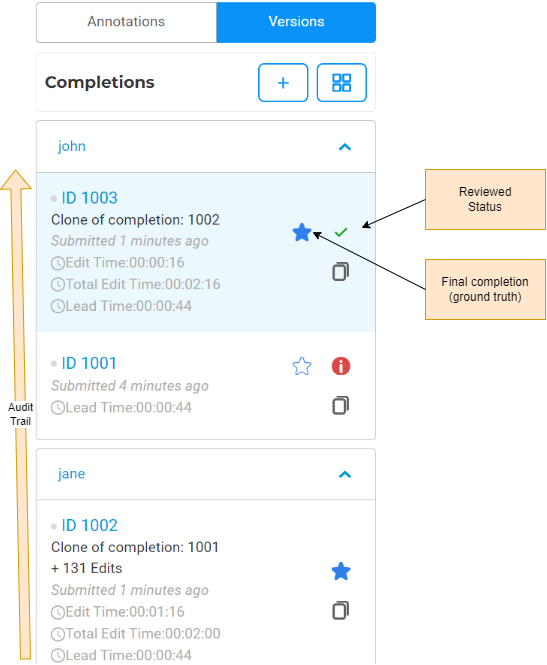

- The annotator navigates to his tasks and notices the tasks status change, opens the task then using the Versions tab looks at the feedback/request provided by the reviewer.

Since a detailed change trail is maintained by the application for every task change, it is not possible to modify the already submitted task. To apply the changes required by the reviewer, the annotator will use the clone feature implemented by Generative AI Lab – with a simple click of a button, the task with the correction submitted by the reviewer is cloned and used by the annotator to apply the requested change ; finally the task is resubmitted.

Generative AI Lab is designed to keep a full audit trail for all created completions, where each entry is stored with an authenticated user and a timestamp. It is not possible for Annotators or Reviewers to delete any completions, and only Managers and Project Owners can remove tasks.

The next figure shows the full audit trail viewed by the Project Owner:

This feature of Generative AI Lab offers significant benefits, including:

- Enhanced Feedback: Reviewers can make precise corrections and offer detailed comments on individual labels. This clarity helps annotators understand the specific improvements needed, enhancing their learning and performance.

- Improved Collaboration: Annotators gain access to the reviewer’s cloned submissions, allowing them to better align their work with the reviewer’s expectations. This access fosters improved collaboration and understanding between team members.

Quality Control: the feature ensures a higher standard of annotations by delivering clear and actionable feedback. This contributes to maintaining the integrity and quality of the data, crucial for reliable outputs.

Getting Started is Easy

Generative AI Lab is a text annotation tool that can be deployed in a couple of clicks using either Amazon or Azure cloud providers, or installed on-premise with a one-line Kubernetes script.

Get started here: https://nlp.johnsnowlabs.com/docs/en/alab/install