State-of-the-Art Medical Language Models

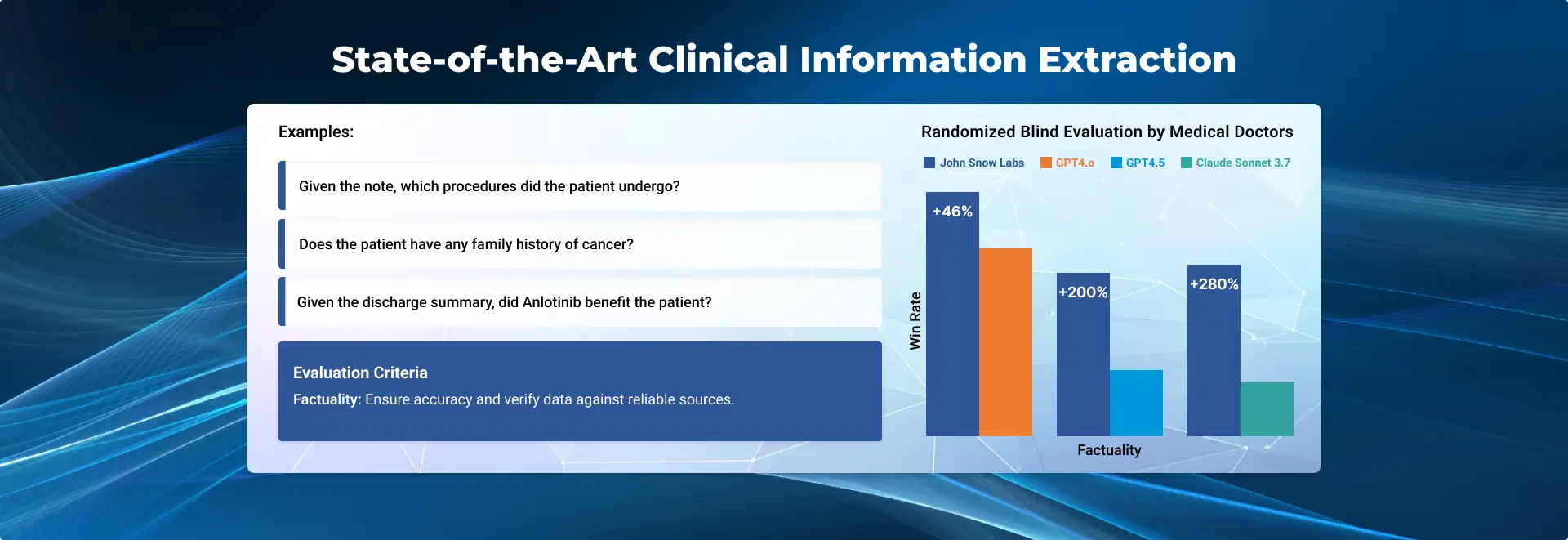

Clinical Information Extraction

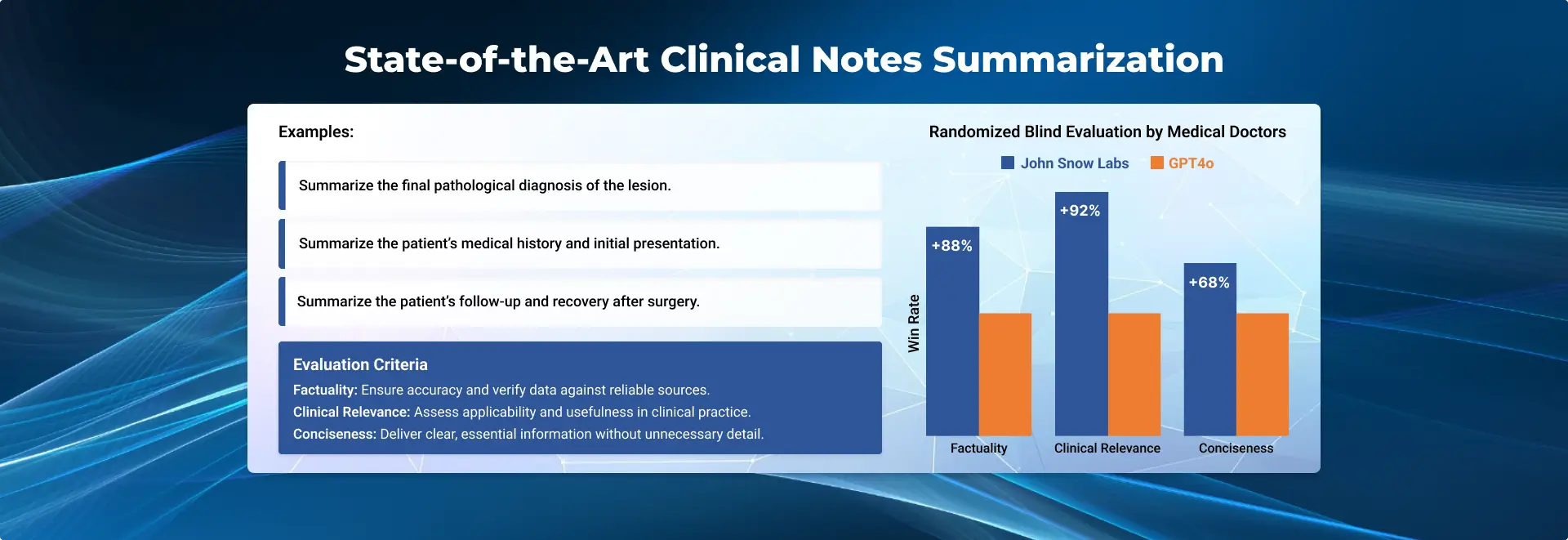

State of the Art Medical Language Models

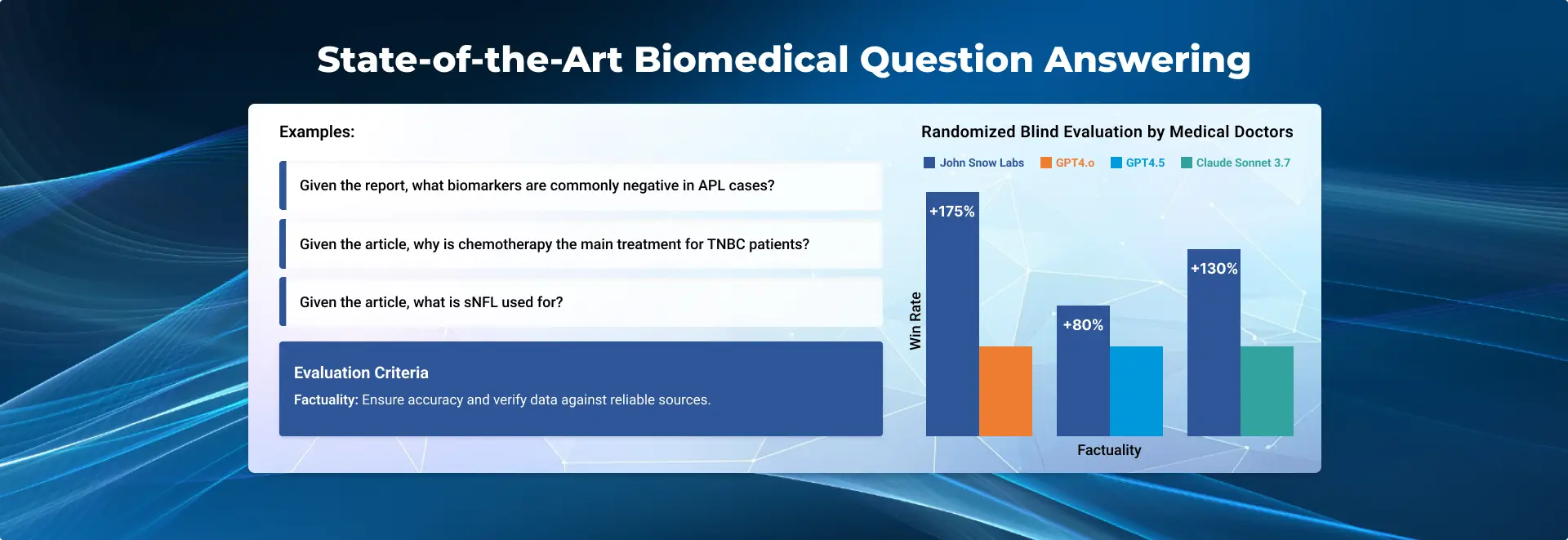

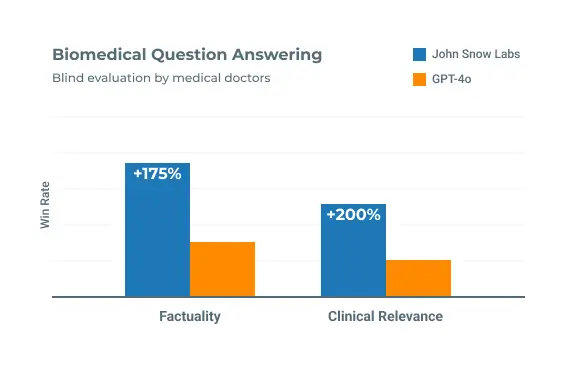

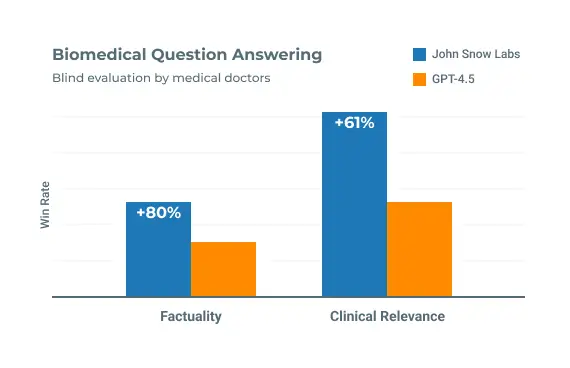

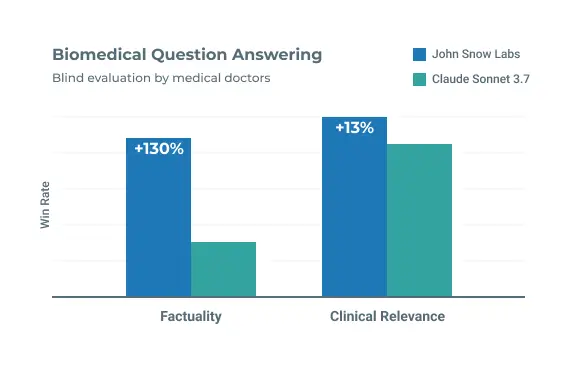

BioMedical Question Answering

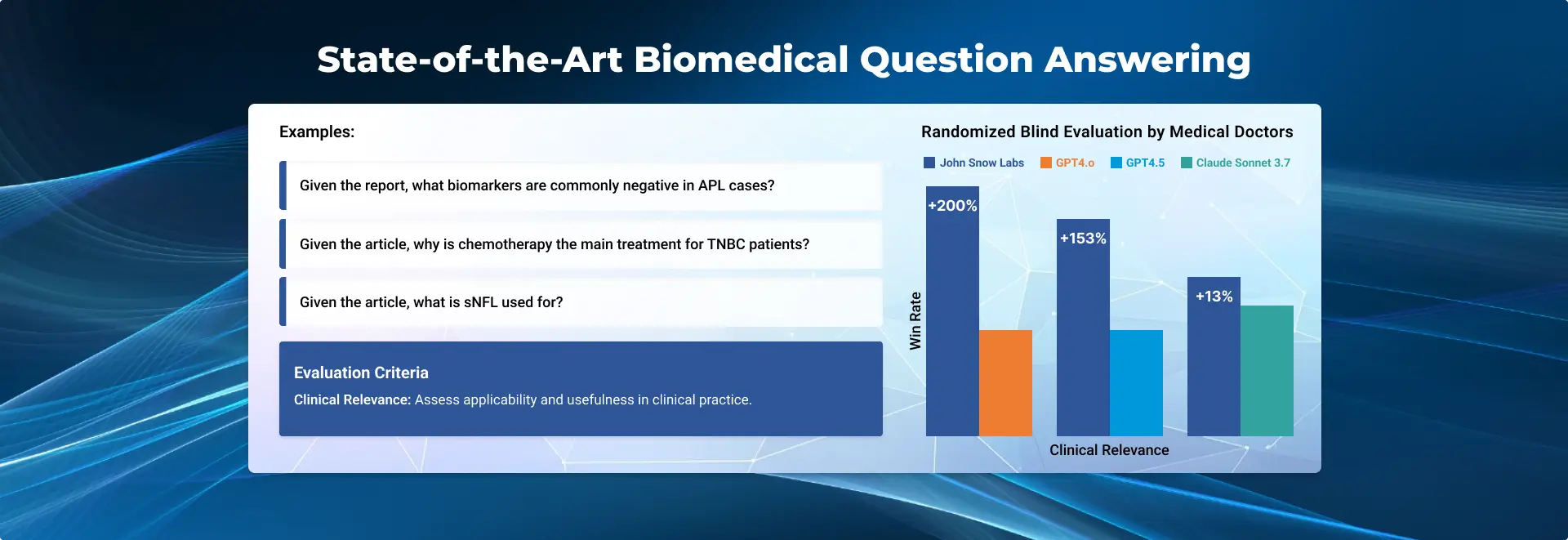

BioMedical Question Answering

Train and fine-tune dozens of models per week

Build a growing set of healthcare-specific LLM benchmarks

Develop and run automated tests for compliance and Responsible AI

Benchmark against every new LLM that anyone publishes

Fine-tune with proprietary data, annotated by medical doctors

Work with hardware and cloud providers to optimize LLM speed, size, and cost

| Benchmark | John Snow Labs | GPT-4o | Med-PaLM-2 |

|---|---|---|---|

| Clinical Knowledge | 89.4 | 86.0 | 88.3 |

| Clinical Assessment | 75.5 | 69.5 | 71.3 |

| Medical Research Q&A | 79.4 | 75.2 | 79.2 |

| Medical Genetics | 95.0 | 91.0 | 90.0 |

| Anatomy | 85.2 | 80.0 | 77.8 |

| Professional Medicine | 94.9 | 93.0 | 95.2 |

| Life Science | 93.8 | 95.1 | 94.4 |

| Core Concepts | 83.2 | 76.9 | 80.9 |

| Clinical Case Analysis | 79.8 | 78.9 | 79.7 |

| Average Score | 86.2 | 82.9 | 84.1 |

| Accuracy on OpenMed Benchmarks | |||

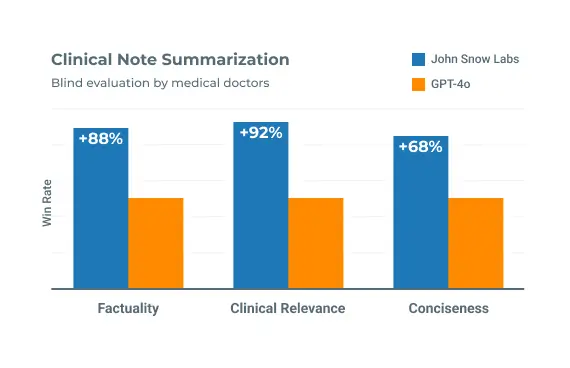

Preferred 88% more often on factuality, 92% more often on relevance, 68% more often on conciseness compared to GPT-4o.

Sample Questions:

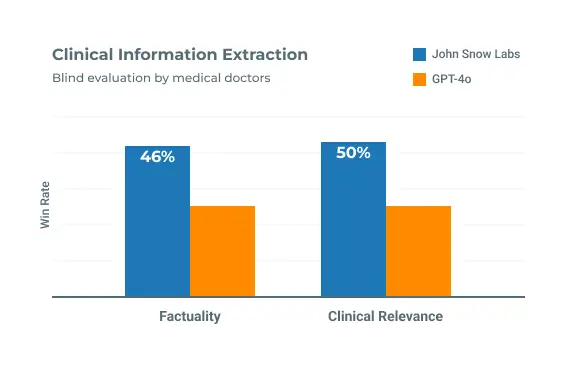

Preferred 46% more often on factuality, 50% more often on relevance and 45% more often on conciseness compared to GPT-4o.

Sample Questions:

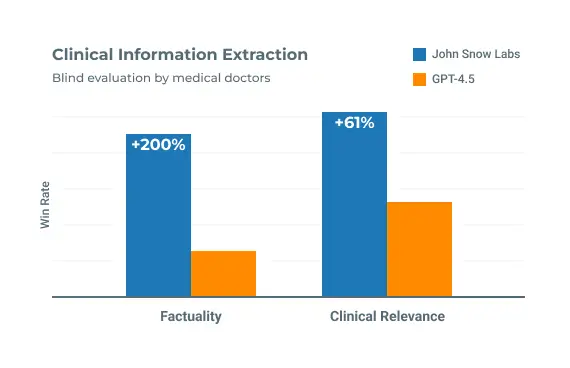

Preferred 175% more often on factuality, 200% more often on relevance, 256% more often on conciseness compared to GPT-4o.

Sample Questions:

The US Department of Veterans Affairs, a health system which serves over 9 million veterans and their families. This collaboration with VA National Artificial Intelligence Institute (NAII), VA Innovations Unit (VAIU) and Office of Information Technology (OI&T) show that while out-of-the-box accuracy of current LLM’s on clinical notes is unacceptable, it can be significantly improved with pre-processing, for example by using John Snow Labs’ clinical text summarization models prior to feeding that as content to the LLM generative AI output.

Using John Snow Lab’s Healthcare LLM models, the ClosedLoop platform enables users to retrieve cohorts using free-text prompts. Examples include: “Which patients are in the top 5% of risk for an unplanned admission and have chronic kidney disease of stage 3 or higher?” or “Which patients are in the top 5% risk for an admission, older than 72, and have not undergone an annual wellness checkup?”

This talk covers how applying healthcare-specific Large Language Models (LLMs) to Electronic Health Records (EHRs) presents a promising approach to constructing detailed oncology patient timelines. It explores how John Snow Labs’ healthcare-specific Large Language Model (LLM) offers a transformative approach to matching patients with the National Comprehensive Cancer Network (NCCN) clinical guidelines. By analyzing comprehensive patient data, including genetic, epigenetic, and phenotypic information, the LLM accurately aligns individual patient profiles with the most relevant clinical guidelines. This innovation enhances precision in oncology care by ensuring that each patient receives tailored treatment recommendations based on the latest NCCN guidelines.