NLP Lab 5.6 introduces a series of technical enhancements aimed at refining the data annotation and export processes. This blog post provides a detailed overview of the new functionalities, focusing on the technical aspects and user interface improvements. Key updates include the implementation of annotator-based filters for exports, advanced OCR server readiness checks, and user interface optimizations in various modules. These features are developed to improve efficiency in data management and user interaction within the NLP Lab environment.

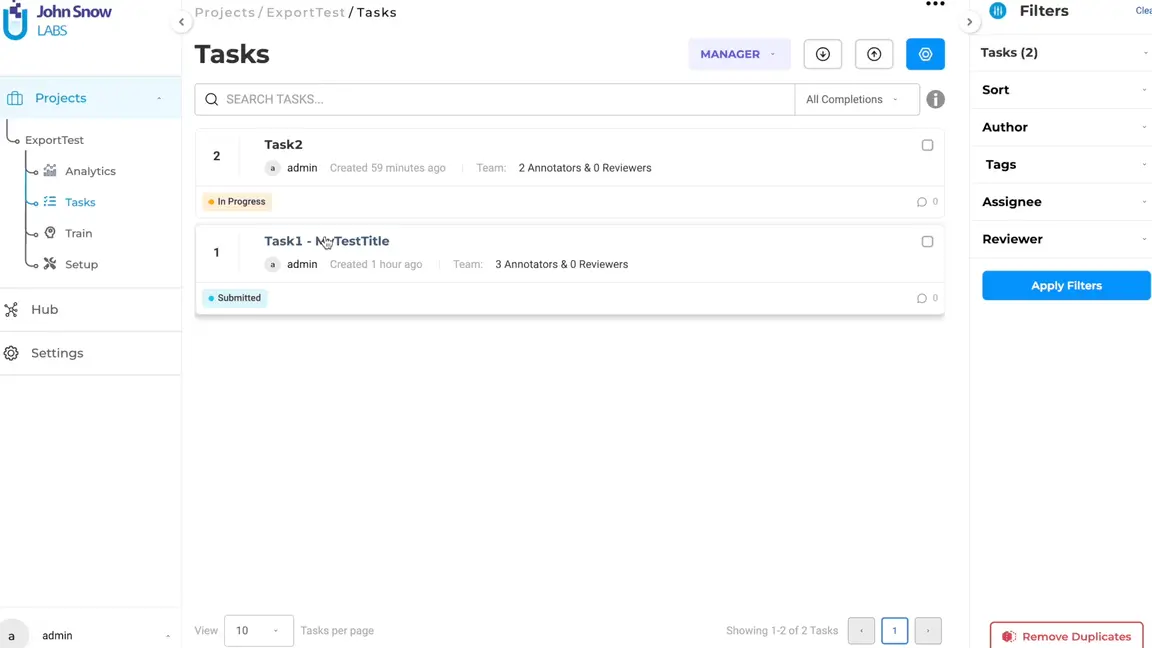

New: Filter Exported Completions by Annotator

Previously, the task of segregating data annotations made by specific annotators was a laborious process, involving the examination and processing (either manual or via custom code) of the exported JSON files to identify target completions. This method was not only time-consuming but also prone to errors. Addressing this drawback, NLP Lab 5.6 introduced an enhanced export feature that simplifies the process.

Users can now easily select the data they want to export by applying annotator-based filters as shown in the video above. This is achieved through new selectors added to the Export page, that ensure a more targeted and efficient export experience. Once the desired annotators are selected, the completions they created can be further filtered based on their status for a refined JSON export. Specifically, users can filter out tasks that either lack completions or are marked with a starred completion, thus enabling a targeted export process. This enhancement combines with the task-based filters already in place and saves time but also increases the accuracy and relevance of the exported data, making it a valuable tool for users engaged in extensive data annotation projects.

New: Filter Export by Predictions

This feature empowers users to selectively filter out tasks lacking pre-annotation results when exporting tasks and completions. By integrating this option, NLP Lab further improves the export process, allowing users to focus on tasks with pre-annotation outcomes.

Usability Improvements

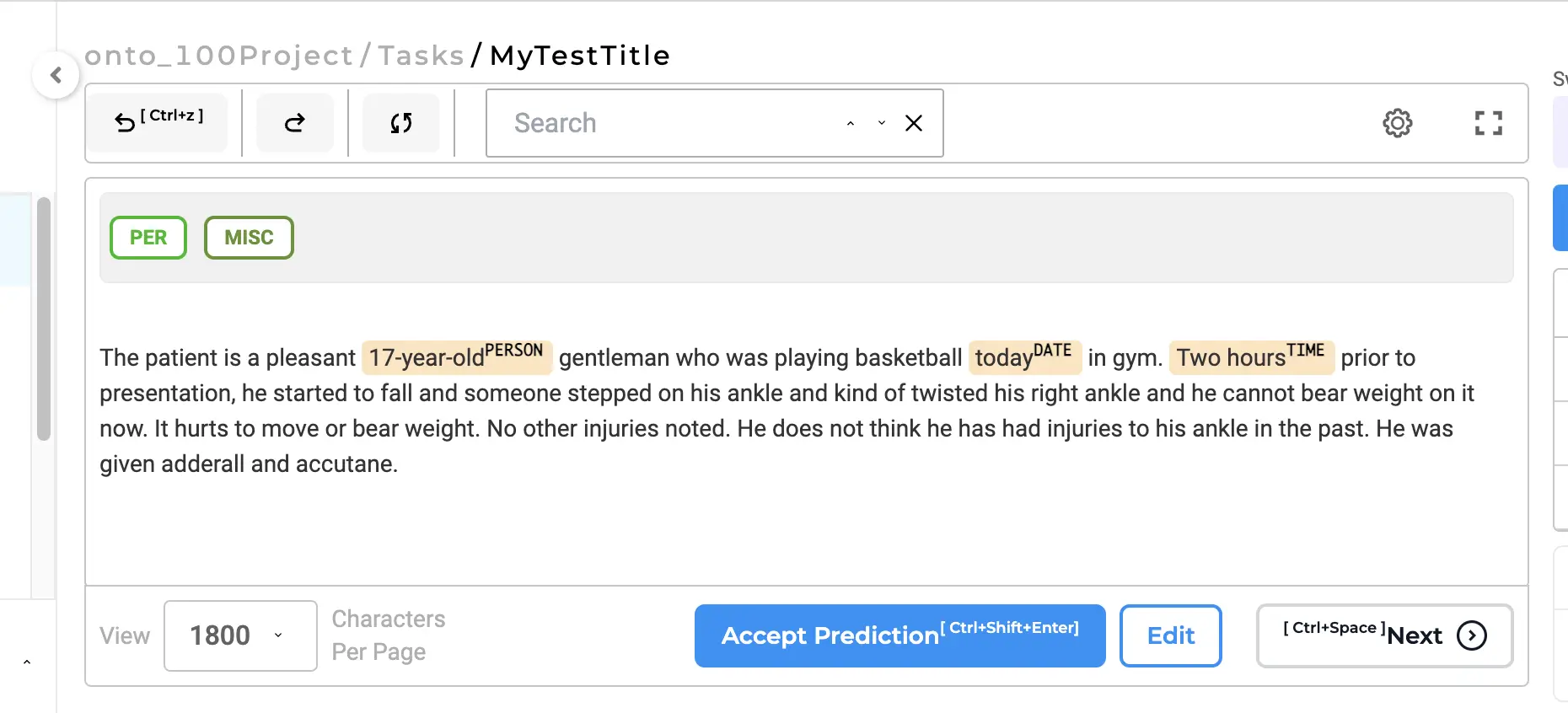

Keyboard Shortcut for “Accept Prediction”

A keyboard shortcut was added for the “Accept prediction” button. This feature, useful for creating and submitting completions based on automatic pre-annotations can now be activated via the keyboard shortcut, allowing users to stay focused and work efficiently without changing their interaction context. Additionally, the “Accept prediction” button, which was previously unavailable in pre-annotated tasks for Visual NER projects, has been made accessible for an enhanced experience of handling pre-annotated tasks in Visual NER projects.

Readiness Check for OCR Server on Image and PDF Import

The process of selecting OCR servers for image and/or PDF-focused projects has been refined for greater efficiency. Previously, when visual documents were imported, the system would automatically select any available OCR server on the import page. This approach, though straightforward, did not consider the specific readiness status of the project’s designated OCR server. Recognizing this limitation, NLP Lab 5.6 introduces a more intelligent selection mechanism. With the recent enhancement, the user interface is designed to temporarily pause when the project’s dedicated OCR server is in the process of deployment. This pause ensures that the OCR integration aligns with the project’s readiness, avoiding potential mismatches or delays that could affect the project workflow. Once the deployment of the OCR server is complete, the system automatically sets this newly deployed server as the default OCR server for the project. This ensures that the processing of images is timely for each project, enhancing the overall efficiency and effectiveness of document ingestion.

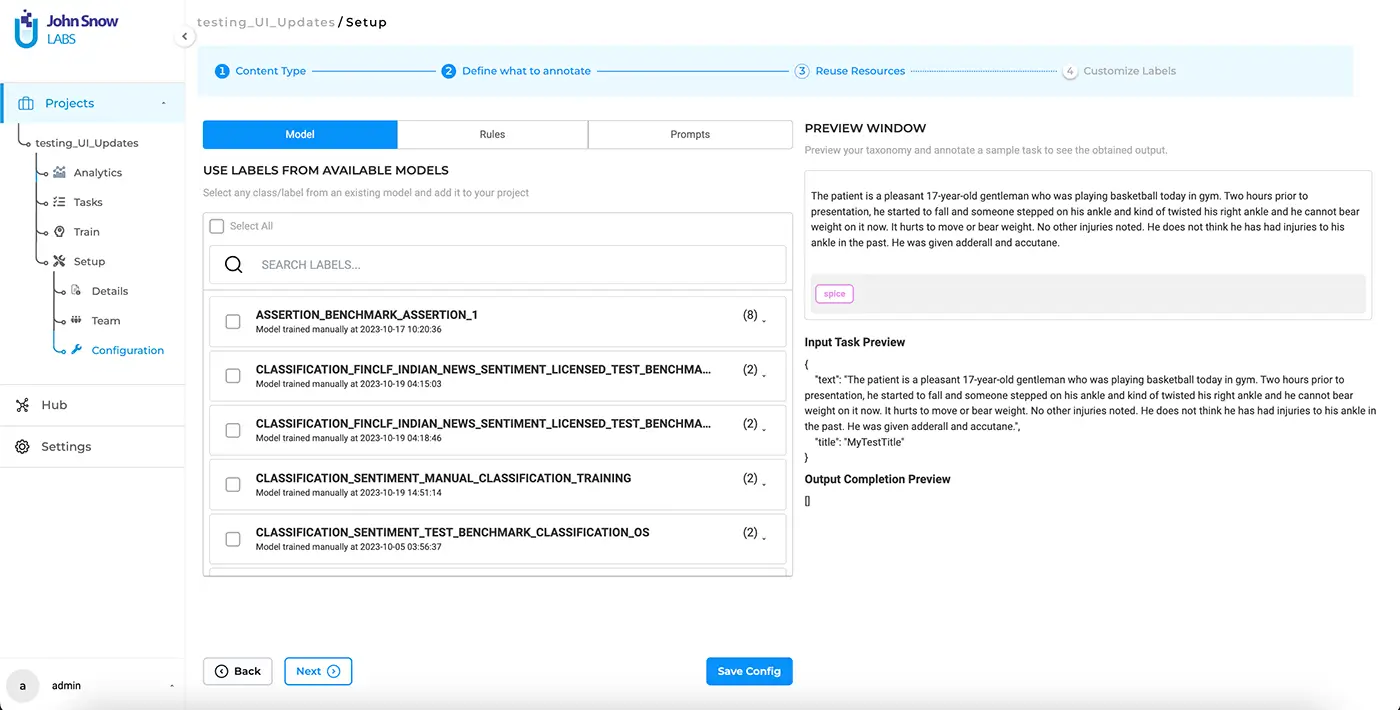

Enhanced Usability of the “Re-use Resources” Settings

The “Reuse Resources” section – step 3 of the Project Configuration – has now an improved user experience and allows more efficient resource management. Initially, users faced challenges in identifying which resources (Models, Rules, or Prompts) were already incorporated into a project when visiting this section during project configuration.

Addressing this issue, the “Reuse Resource” tab now prominently identifies the models, prompts, and rules added to the project. Furthermore, the “Reuse Resources” feature was expanded to provide an in-depth view of specific labels, choices, or relations selected. This detailed visualization ensures users are fully informed about the resources currently in use in the project. Such transparency is crucial in aiding users to make well-informed decisions regarding the addition or removal of resources, thereby streamlining the processes within the Project Configuration Settings.

Enhanced UI for Reuse Resource

The Reuse Resource Page has also undergone a series of enhancements, focusing on improving user navigation and the resource addition process. These updates are designed to augment the user interface while retaining the core functionality of the application, ensuring that users benefit from a more intuitive experience without having to adapt to significant workflow changes. One of the improvements is the introduction of tab-based navigation for selecting Models, Prompts, and Rules. This shift from the previous button-based system to tabs enhances both the visual organization and the ease of navigation on the Reuse Resource page. Users can now more intuitively and efficiently locate and manage the resources they need for their projects.

Additionally, the process of adding resources to a project has been updated. Users are no longer required to click “Add to Project Configuration” button each time they select a new model, prompt, or rule. Instead, the system automatically incorporates the chosen resource into the project configuration. This refinement eliminates repetitive steps, saving users time and simplifying the process of resource selection.

These UI updates, implemented to enrich the user experience, ensure that users can manage resources more effectively while following the same workflow. The focus remains on user convenience and efficiency, underscoring the NLP Lab’s commitment to continuous improvement in response to user needs and feedback.

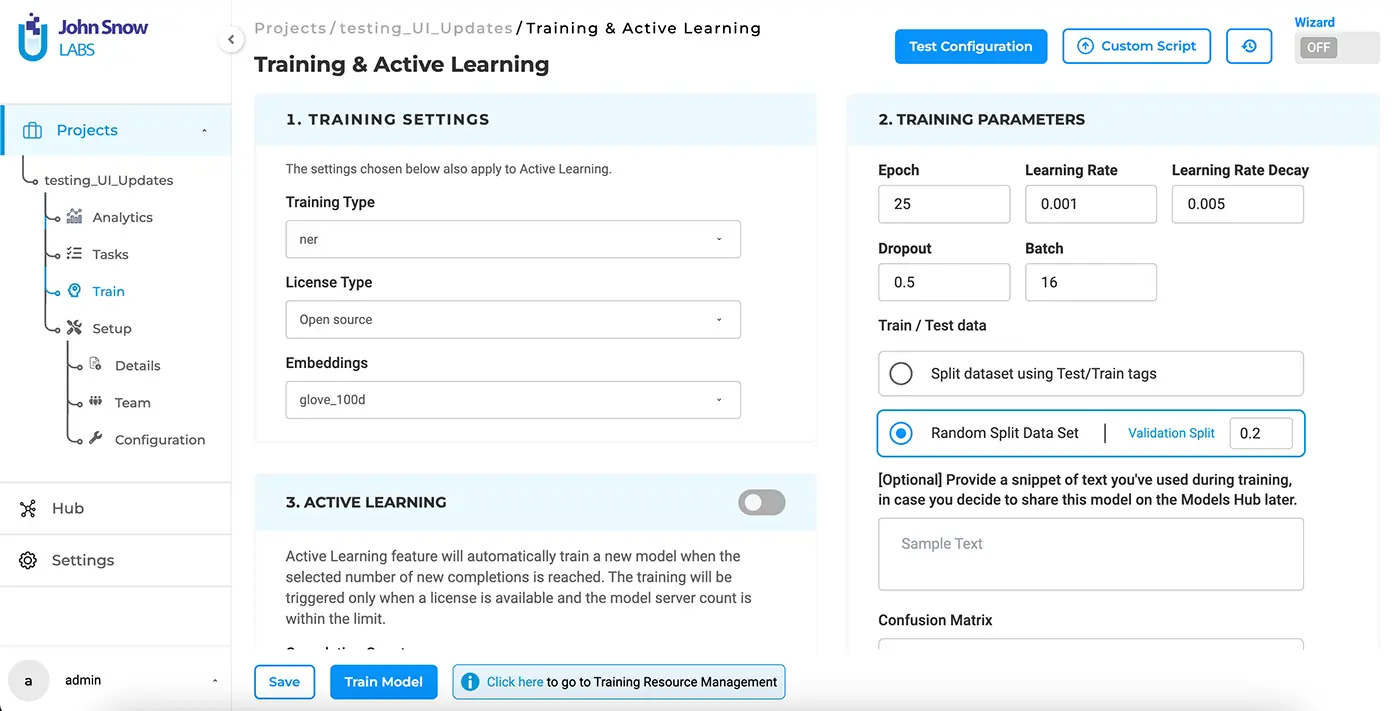

Enhanced UI for Model Training

NLP Lab 5.6. updates to the User Interface of the Training & Active Learning page for an improved user experience and streamlined interactions. The buttons located at the top of the page have been redesigned to be more compact for optimized screen space, allowing users to focus on the essential elements of the page without distraction. Another enhancement is the introduction of sticky buttons at the bottom of the Train page. This feature ensures that critical features, such as “Train” and “Save,” remain accessible at all times, regardless of the user’s position on the page. By eliminating the need to scroll, users can enjoy a more convenient and efficient workflow. This thoughtful design change underscores our commitment to continuously improving the user interface to meet the evolving needs of our users in the NLP Lab.

Conclusions

NLP Lab 5.6 brings forth technical enhancements such as the filter export by annotator function, improved OCR server selection mechanisms, and user interface refinements in the “Reuse Resources” and model training sections. These developments are specifically designed to address efficiency and accuracy in data handling while ensuring a streamlined user experience. The continuous integration of user feedback into the development process underscores NLP Lab’s commitment to evolving in line with the needs of its user base, reinforcing its utility in complex NLP project workflows.

Getting Started is Easy

The NLP Lab is a free text annotation tool that can be deployed in a couple of clicks on the AWS, Azure, or OCI Marketplaces or installed on-premise with a one-line Kubernetes script.

Get started here: https://nlp.johnsnowlabs.com/docs/en/alab/install

Start your journey with NLP Lab and experience the future of data analysis and model training today!