Annotation Lab 1.6.0 includes several updates on the task statuses.

For Project Owner, Manager, and Reviewer

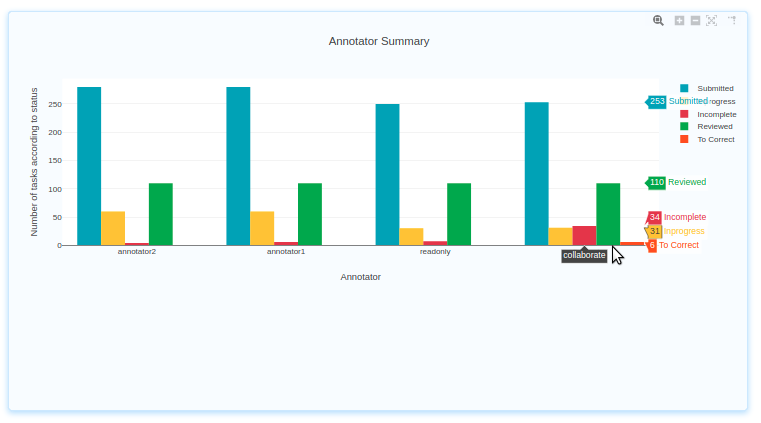

On the Analytics page and Tasks page, the Admin/Manager/Reviewer will see the general overview of the projects which will take into consideration the task level statuses as follows:

- Incomplete– Annotators have not started working on this task

- In Progress– At least one annotator still has not starred (marked as ground truth) any submitted completions

- Submitted– All annotators that are assigned to a task have starred (marked as ground truth) one submitted completion

- Reviewed– Reviewer has approved all starred submitted completions for the task

For Annotators user

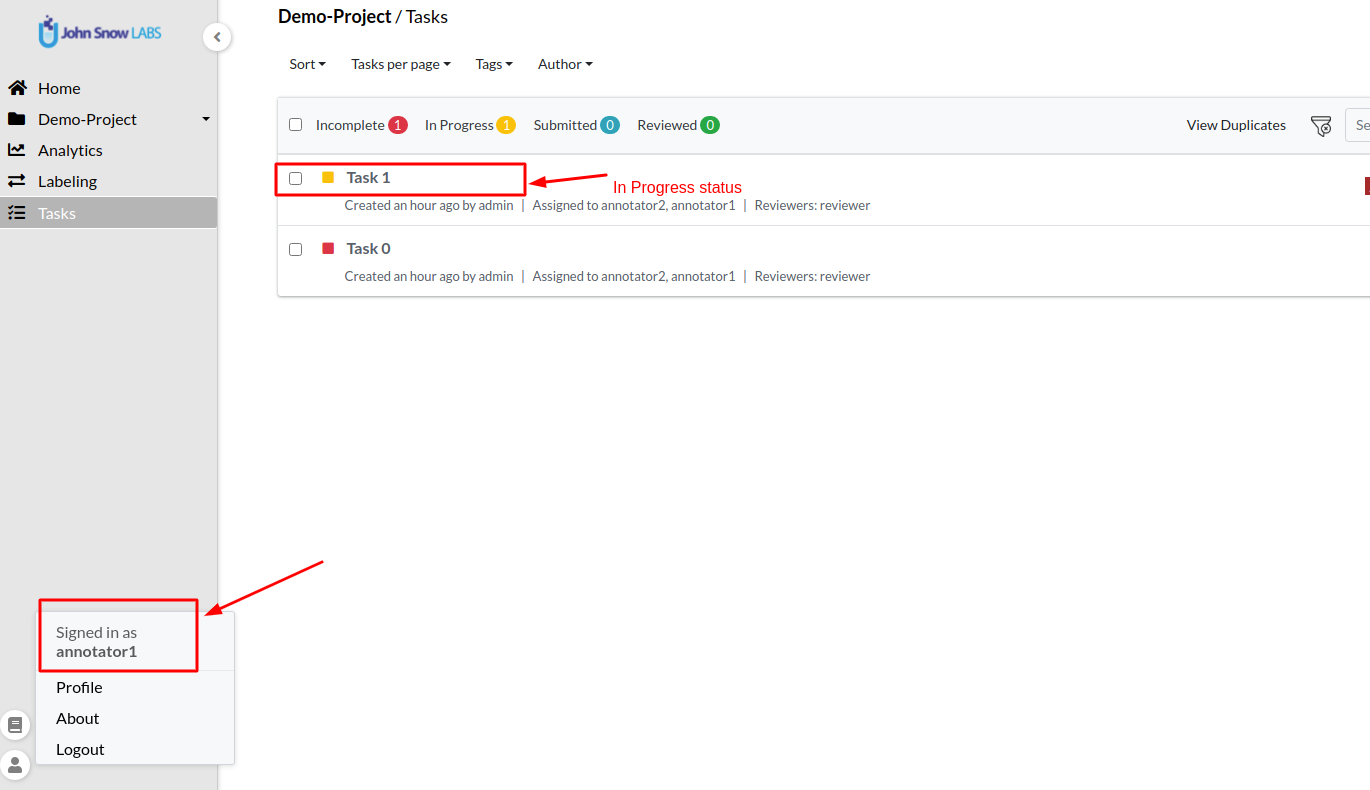

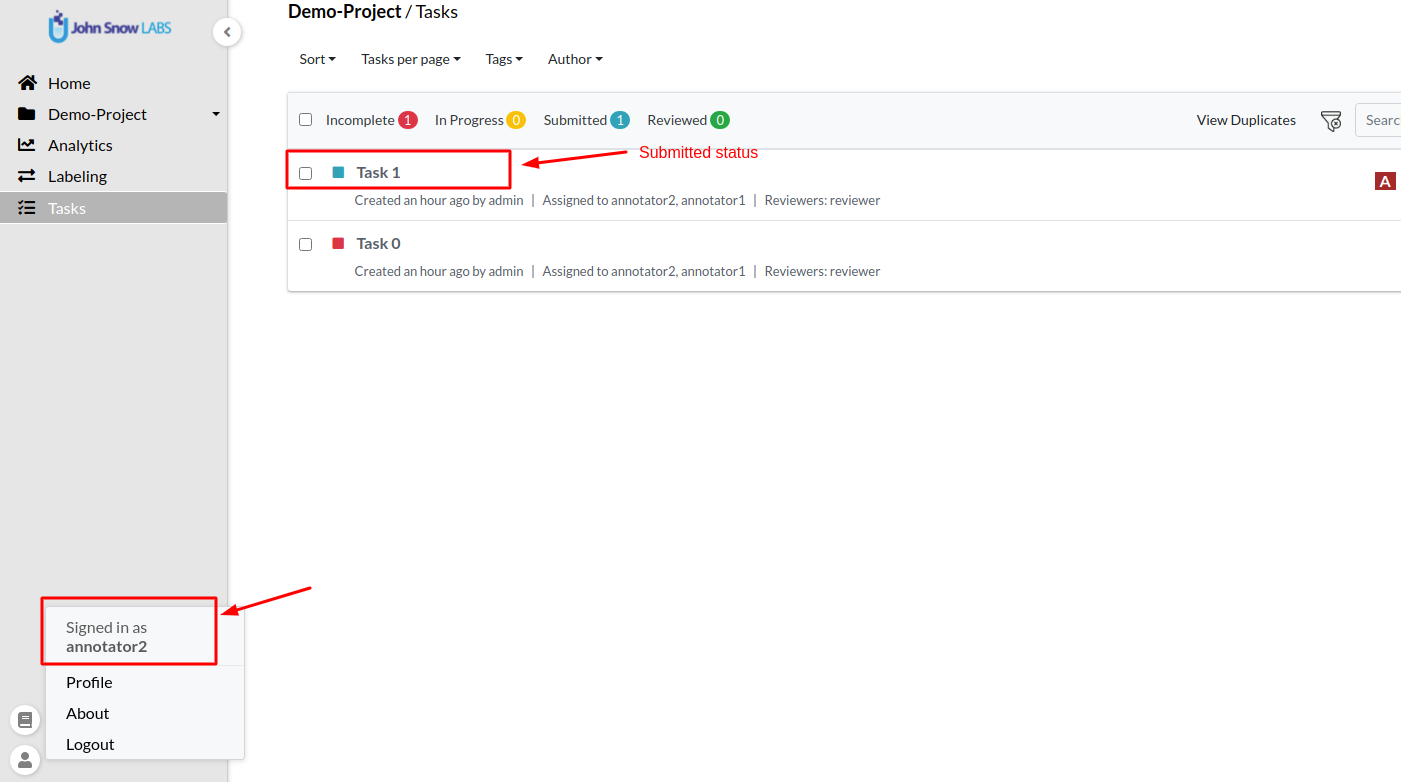

On the Annotator’s Task page, the task status will be shown with regards to the context of the logged-in Annotator’s work. As such, if the same task is assigned to two annotators then:

- if annotator1submits the task from his/her side then he/she will see task status as Submitted

- if annotator2is still working and not submitted the task, then he/she will see task status as In-progress

The following statuses are available on the Annotator’s view.

- Incomplete – Current logged-in annotator has not started working on this task.

- In Progress – At least one saved/submitted completions exist, but there is no starred submitted completion.

- Submitted – Annotator has at least one starred submitted completion.

- Reviewed – Reviewer has approved the starred submitted completion for the task.

- To Correct – Reviewer has rejected the submitted work. In this case, the star is removed from the reviewed completion. The annotator should start working on the task and resubmit.

Note:

- The status of a task is maintained/available only for the annotators assigned to the task.

- The Project Owner completion state is not considered while deciding the status of a task.

When multiple Annotators are assigned to a task, the reviewer will see the task as submitted when all annotators submit and star their completions. Otherwise, if one of the assigned Annotators has not submitted or has not starred one completion, then the Reviewer will see the task as In Progress.

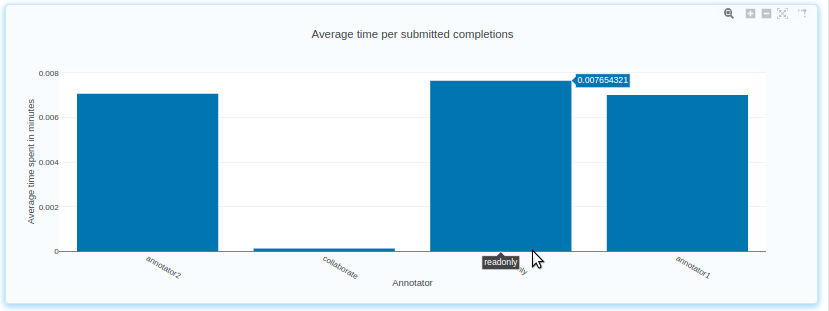

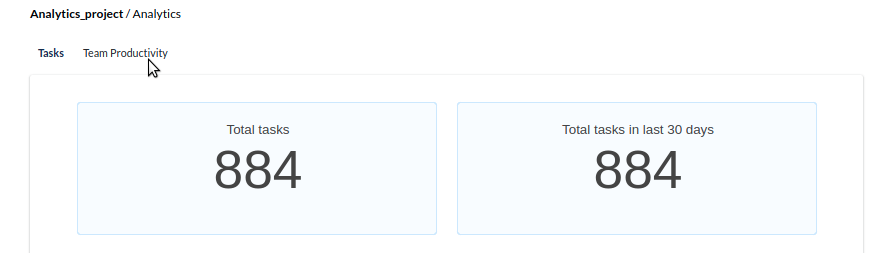

Productivity Stats improved

New charts have been added to the Analytics Dashboard which gives information on the Annotators’ productivity.

The Weekly Completion Chart has been removed and the name of the Completions tab has been changed to Team Productivity.

Filter and Labeling Page

On the Tasks Page, the Reviewer can now filter the Submitted tasks (Tasks that have completions marked as Ground-Truth) and start reviewing them. When using the Labeling screen, the next task served to the Reviewer will be the task that has at least one completion marked as ground truth and it has not been already reviewed. This will be triggered when Reviewer clicks the skip button on a labeling screen for that task.

When using the Labeling screen, the next task served to the annotator will be the task that is in Incomplete/In Progress state for that annotator.

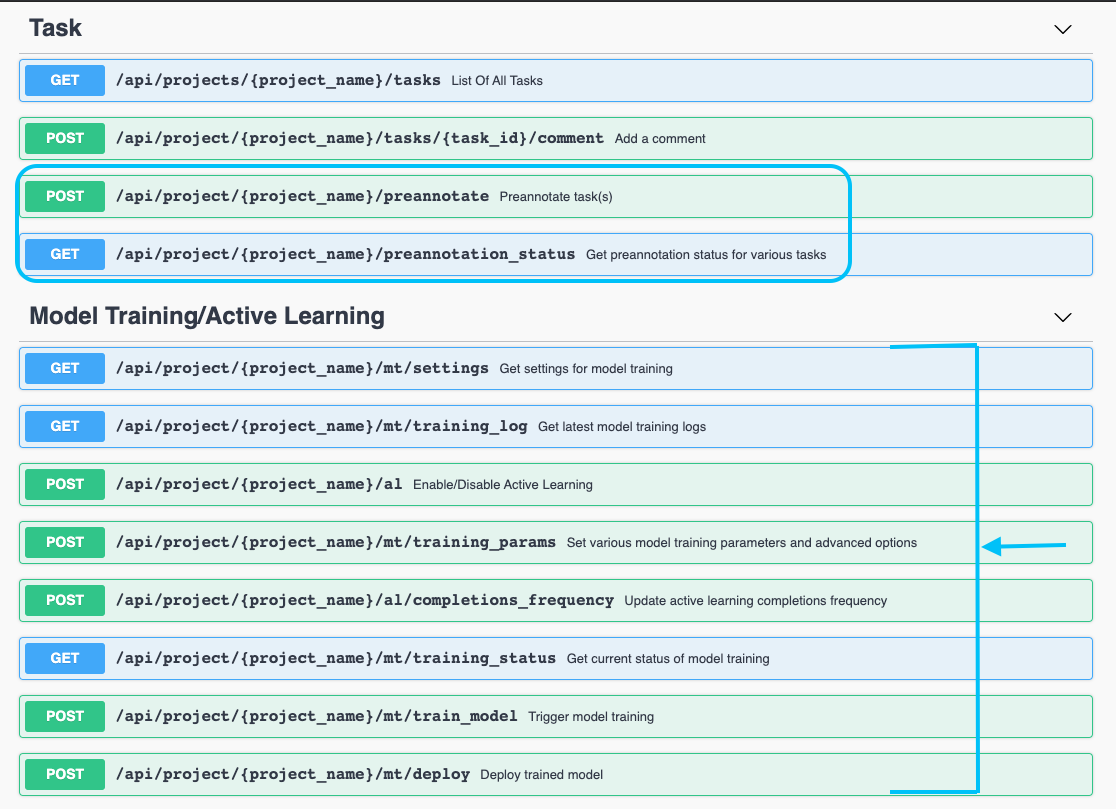

Enhanced API

All the model training-related stuff can be done using the APIs documented on/swagger page. It is possible to configure the training parameters, change the active learning completions frequency, do preannotations, deployments, check the status of training and preannotations, etc through the API.

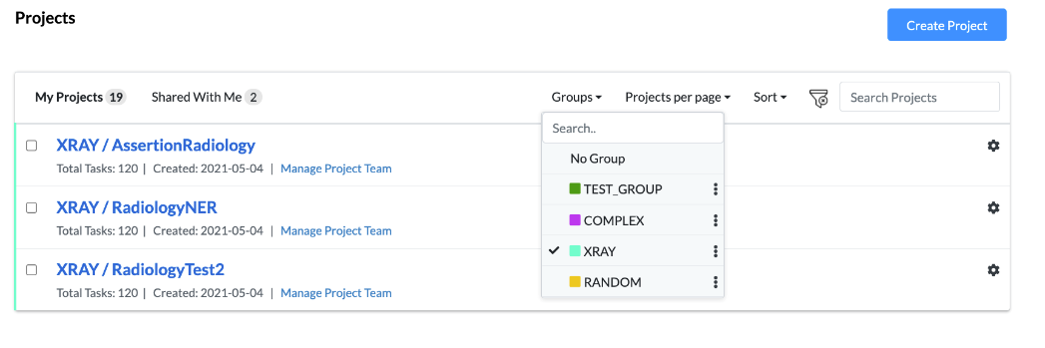

Group Projects

We have seen that an Annotation Lab server can grow to 100s of projects which leads to the need for sorting projects based on some user-defined groups. To help find the related groups easily, this version of Annotation Lab introduces the concept of project grouping. It is possible to assign a project to an existing or new group, remove it from any group, filter projects by group, etc. Once a project is assigned a group, the group name proceeds the name of the project.

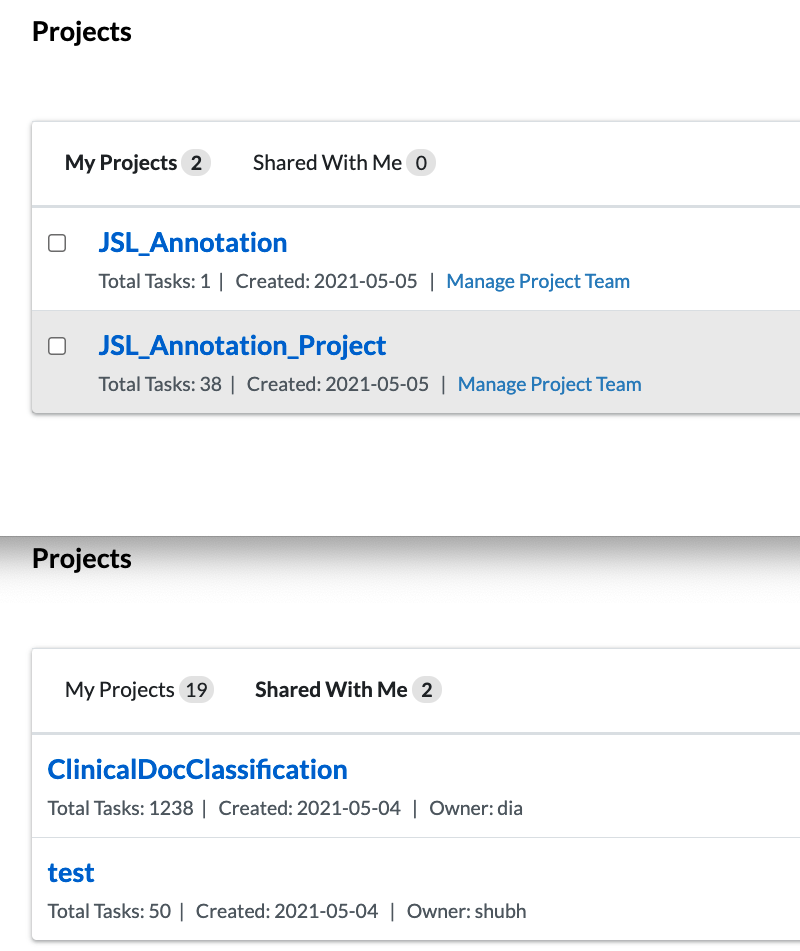

The name of Project Owner has been removed from the My Projects list as for currently logged-in user, it’s always the same. The owner name is present in the Shared With Me tab.

Security and Bug fixes

We have upgraded multiple stacks used in the Annotation Lab setup to the latest versions in order to make the product more secure. With this upgrade, we have fixed some UI issues present on the Analytics page and Labeling page. With these fixes, the charts give the more accurate results and the counts present in both the Analytics Page and Labeling Page are correctly shown based on the logged-in user.

The Project Switch dropdown present in the navigation drawer on the left was not working properly in some cases. Now, this issue is also fixed.