Contextual Entity Ruler in Spark NLP refines entity recognition by applying context-aware rules to detected entities. It updates entities using customizable patterns, regex, and scope windows. It boosts accuracy by reducing false positives and adapting to niche contexts. Key features include token/character-based windows, prefix/suffix rules, and modes. Integrate it post-NER to enhance precision without retraining models

Introduction

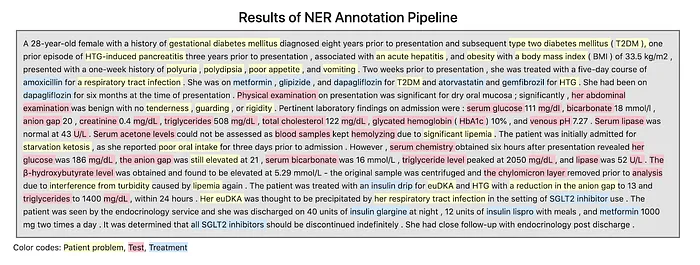

Named Entity Recognition (NER) models are powerful tools for extracting structured information from unstructured text. However, these models often struggle with contextual nuances, leading to false positives or missed entities. This is where ContextualEntityRuler comes in — a rule-based enhancement layer that refines NER results by incorporating contextual information.

In this blog post, we introduce ContextualEntityRuler, exploring how it improves entity recognition by leveraging predefined rules and flexible scope definitions. Whether you’re working with clinical NLP, financial documents, or any domain where accuracy matters, this approach can significantly enhance your entity extraction pipeline.

What is NER?

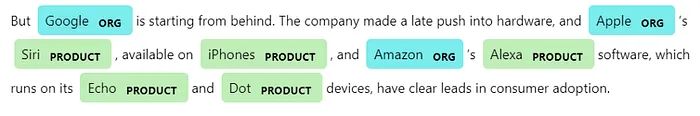

Blogpost titled Named Entity Recognition (NER) with BERT in Spark NLP addresses how to build a BERT-based NER model using the Spark NLP library

NER is a subtask of information extraction that seeks to locate and classify named entities mentioned in unstructured text into pre-defined categories such as person names, organizations, locations, medical codes, time expressions, quantities, monetary values, percentages, etc.

NER is used in many fields of Natural Language Processing (NLP), and it can help to answer many real-world questions, such as:

- Which companies were mentioned in the news article?

- Which tests applied to a patient (clinical reports)?

- Is there a product name mentioned in the tweet?

What is Contextual Entity Ruler?

ContextualEntityRuler is an advanced module designed to enhance the accuracy of entity recognition by updating chunks based on contextual rules. These rules are defined using dictionaries and can include prefixes, suffixes, or contextual patterns within a specified window around the detected chunk.

This module is particularly useful for refining entity labels or content in domain-specific text processing tasks. It allows NLP systems to better understand the context of entities and make more accurate predictions.

When Should You Use Contextual Entity Ruler?

Use ContextualEntityRuler when:

- You need to fine-tune NER outputs based on domain-specific contexts.

- You want to handle ambiguous entities by considering surrounding words.

- You aim to improve the precision of entity recognition without retraining the model.

Flexible Context Windows

The module allows you to define precise “scope windows” around entities, giving you control over how much surrounding context to consider. You can specify:

- Window size before and after the entity

- Whether to measure the window in tokens or characters

Multiple Pattern Matching Options

ContextualEntityRuler supports various ways to define matching patterns:

- Simple text patterns (prefixes and suffixes)

- Regular expressions

- Entity type matching

Customizable Behavior

The module offers several configuration options:

- Allow or restrict punctuation between patterns and entities

- Permit or forbid tokens between patterns and entities

- Different modes of operation (include, exclude, or label-only replacement)

Example Rules

{

"entity" : "Age",

"scopeWindow" : [15,15],

"scopeWindowLevel" : "char", #token,

"prefixPatterns" : ["pattern1", "pattern2"],

"suffixPatterns" : ["pattern1", "pattern2",],

"prefixRegexes" : ["regex1", "regex2"],

"suffixRegexes" : ["regex1", "regex2"],

"prefixEntities" : ["entity1", "entity2"],

"suffixEntities" : ["entity1", "entity2"],

"replaceEntity" : "new label",

"mode" : "exclude" ##include,replace_label_only

}

contextual_entity_ruler = ContextualEntityRuler() \

.setInputCols("sentence", "token", "ner_chunks") \

.setOutputCol("ruled_ner_chunks") \

.setRules(rules) \

.setCaseSensitive(False)\

.setDropEmptyChunks(True)\

.setAllowTokensInBetween(True)\

.setAllowPunctuationInBetween(True)

Example Pipeline

documentAssembler = DocumentAssembler() \

.setInputCol("text") \

.setOutputCol("document")

sentenceDetector = SentenceDetector() \

.setInputCols(["document"]) \

.setOutputCol("sentence")

tokenizer = Tokenizer() \

.setInputCols(["sentence"]) \

.setOutputCol("token")

word_embeddings = WordEmbeddingsModel.pretrained("embeddings_clinical", "en", "clinical/models") \

.setInputCols(["sentence", "token"]) \

.setOutputCol("embeddings")

jsl_ner = MedicalNerModel.pretrained("ner_jsl", "en", "clinical/models") \

.setInputCols(["sentence", "token", "embeddings"]) \

.setOutputCol("jsl_ner") \

jsl_ner_converter = NerConverterInternal() \

.setInputCols(["sentence", "token", "jsl_ner"]) \

.setOutputCol("ner_chunks")

contextual_entity_ruler = ContextualEntityRuler() \

.setInputCols("sentence", "token", "ner_chunks") \

.setOutputCol("ruled_ner_chunks") \

.setRules(rules) \

.setCaseSensitive(False)\

.setDropEmptyChunks(True)\

.setAllowPunctuationInBetween(True)

ruler_pipeline = Pipeline(

stages=[

documentAssembler,

sentenceDetector,

tokenizer,

word_embeddings,

jsl_ner,

jsl_ner_converter,

contextual_entity_ruler

])

empty_data = spark.createDataFrame([[""]]).toDF("text")

ruler_model = ruler_pipeline.fit(empty_data)

ruler_result = ruler_model.transform(data)

ruler_result.select("ruled_ner_chunks").show(truncate=false)

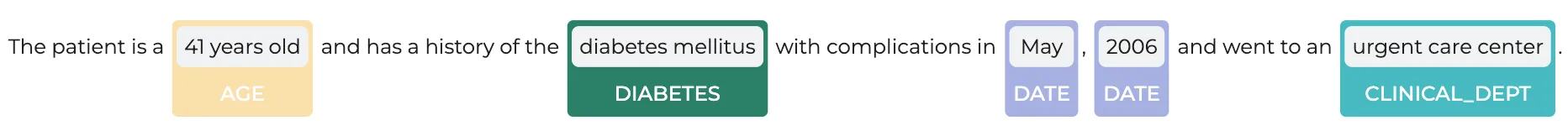

Use Case 1: Include and Exclude Modes

Given the following text:

The patient is a 41 years old and has a history of the diabetes mellitus with complications in May, 2006 and went to an urgent care center.

The initial NER model detects these chunks:

+-------------+-----+---+------------------+ |entity |begin|end|ner_chunks_result | +-------------+-----+---+------------------+ |Age |17 |28 |41 years old | |Diabetes |55 |71 |diabetes mellitus | |Date |95 |97 |May | |Date |100 |103|2006 | |Clinical_Dept|120 |137|urgent care center| +-------------+-----+---+------------------+

We aim to adjust the NER chunks with the following rules:

rules = [

{

"entity" : "Age",

"scopeWindow" : [15,15],

"scopeWindowLevel" : "char",

"suffixPatterns" : ["years old", "year old", "months",],

"replaceEntity" : "Modified_Age",

"mode" : "exclude"

},

{

"entity" : "Diabetes",

"scopeWindow" : [3,3],

"scopeWindowLevel" : "token",

"suffixPatterns" : ["with complications"],

"replaceEntity" : "Modified_Diabetes",

"mode" : "include"

},

{

"entity" : "Date",

"suffixRegexes" : ["\d{4}"],

"replaceEntity" : "Modified_Date",

"mode" : "include"

}

]

Explanation of Rules

1- Age Entity (Exclude Mode):

- Goal: Exclude the “years old” suffix while retaining the numeric value.

- How: The rule sets a character-level scope window of 15 before and after the entity, checking for the suffix pattern “years old” to exclude it.

- Effect: Only the number is retained, and the label is updated to “Modified_Age”.

2- Diabetes Entity (Include Mode):

- Goal: Include additional context if it matches the pattern “with complications”.

- How: A token-level scope window of 3 tokens is used. If the suffix “with complications” is detected, it is included in the chunk.

- Effect: The chunk is expanded, and the label is updated to “Modified_Diabetes”.

3- Date Entity (Include Mode with Regex):

- Goal: Combine adjacent Date entities if a 4-digit year is detected.

- How: Using a regular expression (\d{4}), the rule includes the year with the month.

- Effect: The combined date is labeled as “Modified_Date”.

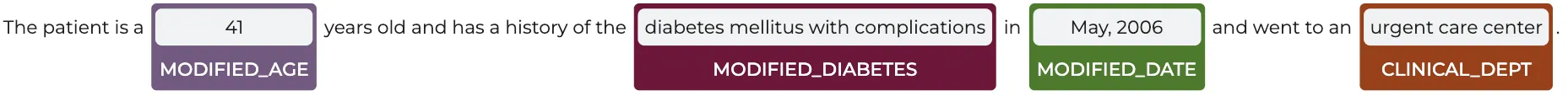

You can see the results, before and after applying the rules:

Before

After

+-----------------+-----+---+------------------------------------+ |entity |begin|end|ruled_ner_chunks_result | +-----------------+-----+---+------------------------------------+ |Modified_Age |17 |18 |41 | |Modified_Diabetes|55 |90 |diabetes mellitus with complications| |Modified_Date |95 |103|May, 2006 | |Clinical_Dept |120 |137|urgent care center | +-----------------+-----+---+------------------------------------+

Analysis and Benefits

- Age: The suffix “years old” is removed, leaving just the number, making it more structured for downstream tasks.

- Diabetes: Context is expanded to capture “with complications,” providing more accurate medical insight.

- Date: Merging month and year into one entity creates a complete date reference, useful for temporal analysis.

Use Case 2: Replace Label Only and Entity Support

Given the text:

Los Angeles, zip code 90001, is located in the South Los Angeles region of the city.

The initial NER model detects these chunks:

|entity |begin|end|ner_chunks_result| +--------+-----+---+-----------------+ |LOCATION|0 |10 |Los Angeles | |CONTACT |22 |26 |90001 | |LOCATION|47 |63 |South Los Angeles| +--------+-----+---+-----------------+

Scenario 1: Replace Label Only

rules = [ {

"entity" : "CONTACT",

"scopeWindow" : [6,6],

"scopeWindowLevel" : "token",

"prefixEntities" : ["LOCATION"],

"replaceEntity" : "ZIP_CODE",

"mode" : "replace_label_only"

}

]

Explanation

- Goal: Change the label of “CONTACT” to “ZIP_CODE” without altering the text.

- How: Using before-after 6-token window, the rule checks if a “LOCATION” entity precedes the “CONTACT” entity.

- Effect: Only the entity label is modified.

You can see the results, before and after applying the rules:

Before

After

+--------+-----+---+-----------------------+ |entity |begin|end|ruled_ner_chunks_result| +--------+-----+---+-----------------------+ |LOCATION|0 |10 |Los Angeles | |ZIP_CODE|22 |26 |90001 | |LOCATION|47 |63 |South Los Angeles | +--------+-----+---+-----------------------+

Scenario 2: Entity Support

rules = [ {

"entity" : "LOCATION",

"scopeWindow" : [6,6],

"scopeWindowLevel" : "token",

"regexInBetween" : "zip",

"suffixEntities" : ["CONTACT","IDNUM"],

"replaceEntity" : "REPLACED_LOC",

"mode" : "include"

}

]

Explanation

- Goal: Merge “LOCATION” and “CONTACT” entities if they are connected by the word “zip”.

- How: This rule searches for the pattern “zip” between “LOCATION” and “CONTACT” entities within before-after 6-token window.

- Effect: The entities are merged and labeled as “REPLACED_LOC”.

You can see the results, before and after applying the rules:

Before

After

+------------+-----+---+---------------------------+ |entity |begin|end|ruled_ner_chunks_result | +------------+-----+---+---------------------------+ |REPLACED_LOC|0 |26 |Los Angeles, zip code 90001| |LOCATION |47 |63 |South Los Angeles | +------------+-----+---+---------------------------+

Analysis and Benefits

- Replace Label Only: In Scenario 1, the label is changed without modifying the text, preserving the integrity of the chunk while refining the entity type.

- Entity Support: In Scenario 2, merging related entities provides more context and continuity, enhancing the quality of location data.

Conclusion

ContextualEntityRuler is a powerful module that empowers NLP systems to perform context-aware entity recognition and refinement. It is particularly useful when you need to fine-tune NER outputs based on domain-specific contexts, handle ambiguous entities by considering surrounding words, or improve the precision of entity recognition without retraining the model. By allowing detailed contextual rules and flexible matching patterns, it enhances accuracy and precision, especially in domain-specific applications.

🚀 Integrate ContextualEntityRuler into your NLP pipeline to take your entity recognition to the next level!

📌 Explore the full implementation here: 🔗 Tutorial Notebook