Clinicians are under time pressure due to increasing demand for medical care, information overload, and administrative regulations. A study conducted by Mayo Clinic found “45 percent of respondents experienced at least one major symptom of burnout”1 and that’s before COVID. Research conducted by Johns Hopkins, also pre-COVID, indicates that medical errors are the third leading cause of death in the U.S.2

According to the Association of American Medical College, the mounting physician shortage could exceed 100,000 by 20343. With the increasing demand for medical care due to population demographics, supply-side shortages will exacerbate the problems of burn-out and medical errors. These seemingly intractable problems, give us purpose.

Medical care is knowledge-intensive and complex. Data are torrential. Data scientists once advocating for turning data into information; are now transforming information into knowledge.

Consider, a computer can read 1,000 times faster than a human. What happens when computer Reading-Comprehension and Knowledge-Retention are improved. Artificial Intelligence and Machine Learning technologies have a new purpose: provide clinicians with more time to practice medicine. Only in science fiction novels will computers be licensed to practice medicine. The role of computers in medical care is to help clinicians learn faster and become more confident in their decision-making.

It’s not simply that computers can read faster than humans, this speed advantage is leveraged by rereading documents as new knowledge is acquired. This multi-pass process of reading and rereading documents provides a deeper understanding of complex relationships. Importantly, misinformation and disinformation can be identified and purged from the knowledge computers share with those licensed to practice medicine.

At the risk of overstating this, we have no choice but to turn to computers to help reduce the information overload and improve access to reliable knowledge. This is not the stuff of science fiction. It is very much science non-fiction.

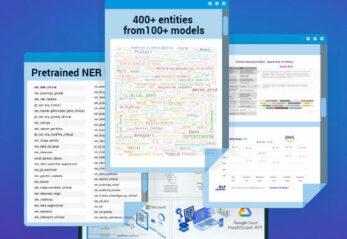

Let’s start with NLP – Natural Language Processing – this is machine learning technology that every computer user has experienced, in the form of a spell-checker. We rely on our computers to prevent spelling mistakes. NLP has advanced far beyond this simple task. It can determine the meaning of words within the context of a subject; for example, when “red” is not a color but rather a reference to a political party. It is also essential that computers understand scientific terms used in medicine. So, professionals can use NLP in healthcare. Most importantly the relationships among symptoms, procedures, diagnoses, risk factors, medications, and the body-object affected by an acute injury or chronic disease must be identified in a textual taxonomy/ontology that must be learned by the computer.

NLP is used to improve reading comprehension. It is also being inserted into the communications process of listening and talking to humans. NLP is a technology that is capable of extracting knowledge from millions of pages of textual matter. It is the first step in developing computer brainpower. Next, the knowledge extracted from textual matter must be retained and accessible to humans. This machine-generated Knowledge is best stored in a Knowledge Graph, a database that preserves every relationship, creating a network, not unlike the human brain neural network. Knowledge Graphs are powerful for conducting investigative analysis, tracing the path created by linking data that leads to the root cause or onset of a health concern.

The problem with Knowledge Graphs is they become “hairballs”: millions of dots with billions of connections. It is important to remember a Knowledge Graph is a database, the fuel needed to power applications. Those applications need to be designed with a clear purpose. This is the message I have attempted to convey. Technology must have a purpose. We have the technology necessary to improve computer Reading Comprehension and Knowledge Retention.

What comes next are time-saving applications. My quick calculation is saving one hour a day of every physician’s time would be equivalent to adding 100,000 physicians to the workforce. In other words, this is how we solve the physician shortage.

About the author

Richard Tanler is a new member of the John Snow Labs team. He has come up through the ranks of analyzing numbers. He was the founder of a successful Business Intelligence company and author of The Intranet Data Warehouse published by John Wiley and Sons. This prescient work described how analytic-intensive applications were destined to be accessed with little more than a Web browser and linked to massive data warehouses.

His advice to scientists and engineers: “Simplify the Simple”.

References:

1https://www.mayoclinicproceedings.org/article/S0025-6196(18)30938-8/fulltext

3https://www.aamc.org/news-insights/press-releases/aamc-report-reinforces-mounting-physician-shortage