The new release comes with a redesign of important models and improved performance on financial NLP tasks.

New state-of-the-art embedding models

Current advances in NLP are built on top of vector representation of text, usually called embeddings. To provide the capabilities to use, train, and finetune models for Finance, Finance NLP is releasing two categories of embedding models: word embedding models that create vector representations of words (or tokens), and sentence embedding models that create vector representations of longer chunks of text (e.g., sentences, paragraphs, documents).

Word Embedding model

For word embedding models, we aimed to create fast and lightweight models that can be used on token classification tasks such as Named Entity Recognition (NER). The newly released model has a dimension of 200 and was trained from scratch on a mix of financial and general texts, allowing the model to learn usual English words, but with a special focus on financial terms.

The architecture is based on Word2Vec, which provides the best tradeoff between representation capabilities and speed, allowing for fast and accurate models built on top of it (e.g., NER, Relation Extraction).

To use the model in Finance NLP, use the pretrained method of the corresponding annotator:

word_embedding_model = (

nlp.WordEmbeddings.pretrained(

"finance_word_embeddings", "en", "finance/models"

)

.setInputColumns(["sentence", "token"])

.setOutputColumn("word_embedding")

)

With the new word embedding model, we trained new models and improved existing models:

- De-identification model:

finner_deid_sec_fe - NER models:

finner_sec_edgar_fe,finner_aspect_based_sentiment_fe,finner_financial_xlarge_fe - Assertion models:

finassertion_aspect_based_sentiment_md_fe

The new models achieve similar or better performance than the existing ones and are optimized with new embedding models with better generalization capabilities. While some models will demonstrate improvements up to 15%, others may have reduced performance but will generalize better to different datasets.

Sentence Embedding model

Now for the sentence embedding model, we based our model on the BGE architecture and trained from scratch on our own domain-specific datasets together with public general English datasets. The model maps sentences/documents/paragraphs to a vector of 768 dimensions and is designed to improve RAG applications.

To use the model, use the corresponding annotator:

sentence_embeddings = (

nlp.BGEEmbeddings.pretrained(

"finance_bge_base_embeddings", "en", "finance/models"

)

.setInputCols("document")

.setOutputCol("sentence_embeddings")

)

With the new model, we improved the following ones built with it:

- Improved entity linking (resolver) models:

finel_edgar_company_name_fe,finel_nasdaq_company_name_stock_screener_fe,finel_names2tickers_fe,finel_tickers2names

With better sentence embedding models, Finance practitioners, researchers, and developers can design better text classification, entity linking, and RAG systems.

To demonstrate the higher capabilities of the sentence embedding model, we tested the retrieval recall on the FiQA dataset. By comparing the top 5 retrieved documents with the associated ones in the dataset, we obtained 20% and 300% recall improvements when comparing with the base model (not finetuned on domain-specific data) and on domain-specific sentence transformers model.

Conclusion

Finance NLP 2.0 arrives with better capability to train specialized models based on performant embedding models. Practitioners and data scientists in this area can benefit from them. All models can be found in the Spark NLP Models Hub.

We are motivated to start releasing new specialized models in future releases, stay tuned.

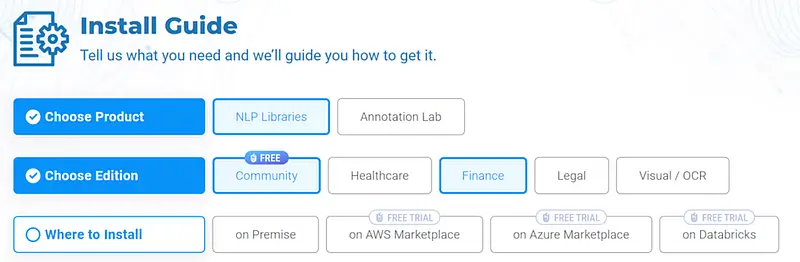

Fancy trying?

We’ve got 30-day free licenses for you with technical support from our financial team of technical and SMEs. This trial includes complete access to more than 150 models, including Classification, NER, Relation Extraction, Similarity Search, Summarization, Sentiment Analysis, Question Answering, etc., and 50+ financial language models.

Just go to https://www.johnsnowlabs.com/install/ and follow the instructions!

Don’t forget to check our notebooks and demos.

How to run

Finance NLP is relatively easy to run on both clusters and driver-only environments using johnsnowlabs library:

Install the johnsnowlabs library:

pip install johnsnowlabs

Then, on Python, install Finance NLP with

from johnsnowlabs import nlp nlp.install(force_browser=True)

Then, we can import the Finance NLP module and start working with Spark.

from johnsnowlabs import nlp, finance</code> # Start Spark Session spark = nlp.start()

For alternative installation methods of how to install in specific environments, please check the docs.