As presented in Part 1 – Test Suites of this article, Generative AI Lab supports comprehensive functionality, enabling teams of domain experts to train, test, and refine custom language models for production use, across various common tasks, all without requiring any coding.

Generative AI Lab supports advanced capabilities for test case generation, test execution, and model testing across various categories by integrating John Snow Lab’s LangTest framework.

In this article, we focus on the creation, configuration, automated test generation, and test execution using Generative AI Lab.

Read Part 3 – Running Tests of this article covering model testing and test results in exploration, for various categories, using the Generative AI Lab application.

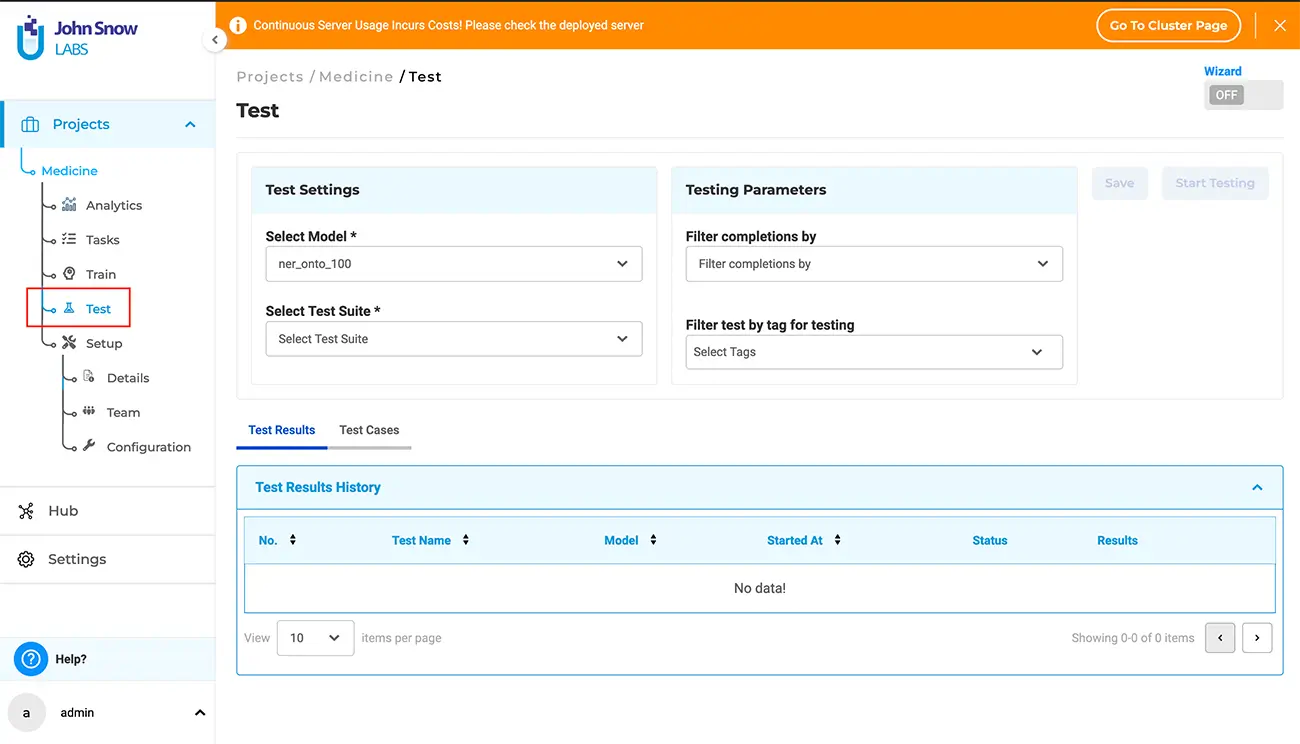

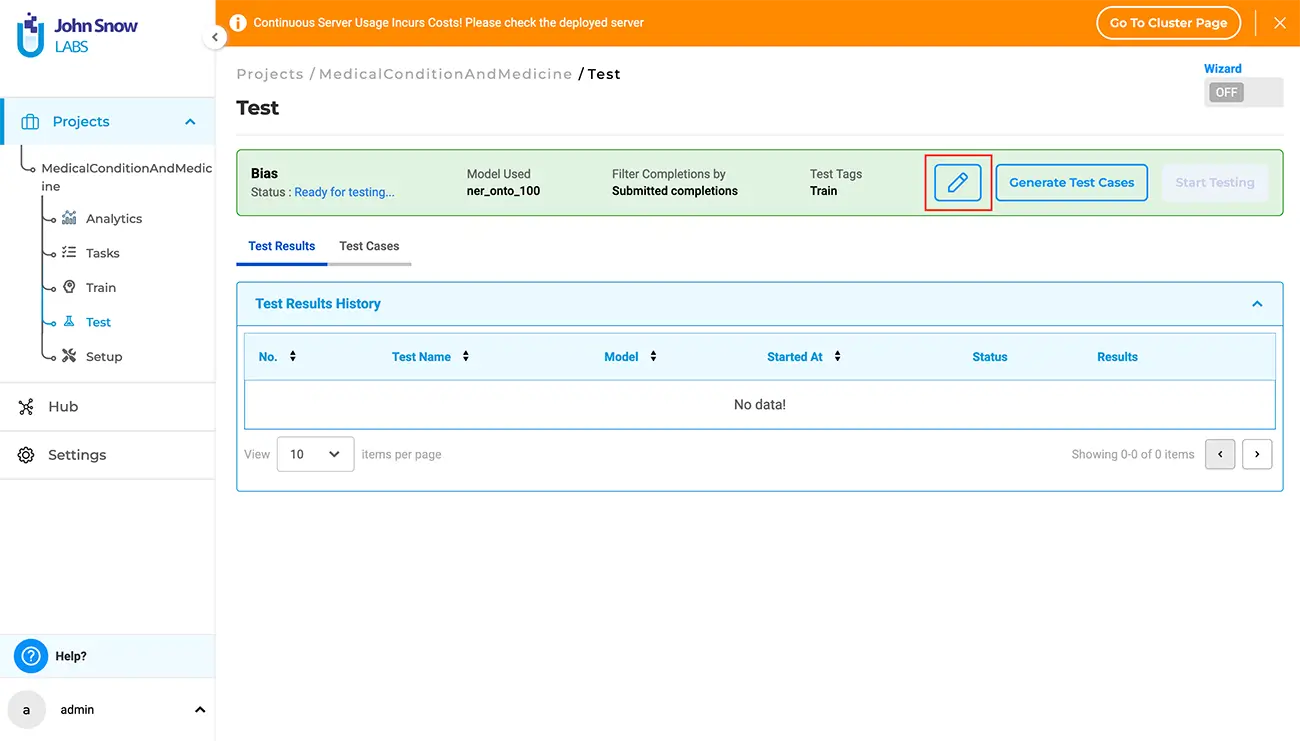

The “Test” page under Project Menu

Clicking on the “Test” node in the left navigation bar for your active project will take you to the “Test” page, where you can manage tests and execute model testing.

On this page, project managers can configure the required settings and corresponding parameters, create and edit test cases, start and stop model testing, review test logs, and review, download, or delete test results.

Note: The functionality available on the Test page is exclusively available to users with project manager roles.

Test – setup and configuration

Navigating to the “Test” page under a specific project, allows the user to specify what tests will be used to assess the quality of the project’s model.

There are two mandatory sections that need to be filled:

- Select Model: Select the pretrained/trained model that is used to predict annotations for the tasks/documents in the current project. The user can choose a model from the dropdown. All available models configured within the project are listed in this dropdown menu for selection.

- Select Test Suite: The test suite comprises a collection of tests designed to evaluate your trained model in various scenarios.

The user can choose an existing test suite from the dropdown menu or create a new one by clicking on “+ Create New Test Suite” within the dropdown menu.

Note: The option to create a new test suite is available only to supervisor and admin users with the manager role.

There are two configuration options available in the “Test Parameters” section; they are optional:

- “Filter completions by”

This option enables users to narrow down their analysis by selecting tasks based on their completion status. The available options are:

- Clear “Filter completions by”: Removes selected completion filters.

- Submitted completions: Select only tasks with submitted completions for analysis.

- Reviewed completions: Select only tasks with reviewed completions for analysis.

- “Filter test by tag”

This option enables users to refine their analysis by selecting only project tasks associated with specific tags. By default, the dropdown includes all default tags such as “Validated”, “Test”, “Corrections Needed”, and “Train”, as well as any custom tags created within the project.

Users can select tags to focus the model testing execution on the specific tagged tasks; if no tags are selected, all tasks will be considered for analysis.

Configuring tests methods

There are 2 methods available for configuring the Test settings and Test parameters: manual setup and wizard mode.

1. Manual setup method

This method gives the user full control over the entire configuration. Steps:

- Go to the “Test” page.

- In the Test Settings section, choose a model from the “Select Model” dropdown.

- Within the Testing Parameters section, pick completions from the “Filter completions by” dropdown.

- Also within Testing Parameters, select tags from the “Filter test by tag for testing” dropdown.

Click the “Save” button to confirm your selections and save the configuration.

2. Wizard Mode (Guided Setup)

This method provides a guided experience when some assistance is needed by the user when configuring the tests. Steps:

- Click the “Wizard” button to initiate Wizard mode. This will reveal the “Test Setting” tab, offering detailed information about Model Selection and Test Suite.

- From the “Select Model” dropdown, choose a model.

- Select a test suite from the dropdown or create a new one by clicking on ”+ Create New Test Suite”.

- Click “Next” to proceed to the “Test Parameters” tab, where you’ll see detailed information about “Filter completions by” and “Tags”.

- Within the “Filter completions by” dropdown, select the appropriate option.

- Choose one or more tags, or none, from the “Filter test by tag for testing” dropdown.

- Click “Next” to save the Test Settings and Parameters.

To modify the Test Settings and Parameters, simply click on the “Edit” icon.

Automatic Test Case Generation

After saving the Test Settings and Parameters, the following options become available: “Generate Test Cases”, “Start Testing”, and “Edit”. Users must generate test cases and conduct testing independently.

Clicking on “Generate Test Cases” will start the process of test case generation based on the saved Test Settings and Parameters. The generated test cases will appear under the “Test Cases” tab.

Note: For the Bias and Robustness categories, test cases can be edited and updated by the user prior to launching the test execution phase.

Modifying Test Settings or Parameters and generating new test cases will discard any existing ones.

Getting Started is Easy

Generative AI Lab is a text annotation tool that can be deployed in a couple of clicks using either Amazon or Azure cloud providers, or installed on-premise with a one-line Kubernetes script.

Get started here: https://nlp.johnsnowlabs.com/docs/en/alab/install