Although there is significant discussion about the importance of training AI models that are safe, robust, and fair, data scientists have access to very few tools to achieve these goals.

In production settings, especially within regulated environments, it is crucial to evaluate these models beyond simple accuracy metrics. For instance, how resilient are they to minor variations in input text or prompts? Do they exhibit biases towards specific genders, ethnicities, or other vulnerable groups?

Comprehensive testing of models is essential before deployment to production, forming an integral part of the model development lifecycle.

These tests should be capable of running automatically, such as part of the MLOps process, before each new version of a model is released to production, akin to automated testing in traditional software engineering.

A significant advantage of Generative AI is the ability to automatically generate many types of test cases, which differs from classic unit testing. While human testers can still add and modify tests, this automation substantially reduces the manual effort involved in creating test cases.

A central repository for test suites is also necessary to facilitate sharing, versioning, and reuse across projects and models. For instance, different models designed for the same tasks can be thoroughly evaluated using the same test suites.

The Generative AI Lab supports this comprehensive functionality, enabling teams of domain experts to train, test, and refine custom language models for production use across various common tasks, all without requiring any coding.

In this article, we describe supported test categories and then focus on managing test suites inside the Generative AI Lab.

The other two parts of this article can be found by clicking the links below:

Test Categories: Accuracy, Bias, Robustness, Fairness and more

The test categories you can use when assessing AI entity recognition models using Generative AI Lab are described below.

1. ACCURACY

The goal of the Accuracy is to give users real, useful insights into how accurate the model is. It’s all about helping the user make smart choices on when and where to use the model in real-life situations.

Accuracy tests evaluate the correctness of a model’s predictions. This category comprises six test types, three of which – “Min F1 Score”, “Min Precision Score” and “Min Recall Score” – require the user to provide model labels. The user can add labels in the “Add Model Labels” section, which becomes active immediately after selecting the corresponding checkbox for the test.

For more details about Accuracy tests, visit the LangTest Accuracy Documentation.

Note: Tests using custom labels require tasks with ground truth data.

2. BIAS

Model bias tests aim to gauge how well a model aligns its predictions with actual outcomes. Detecting and mitigating model bias is essential to prevent negative consequences such as perpetuating stereotypes or discrimination. This testing explores the impact of replacing documents with different genders, ethnicities, religions, or countries on the model’s predictions compared to the original training set, helping identify and rectify potential biases LangTest framework provides support for more than 20 distinct test types for the Bias category.

For detailed information on the Bias category, supported tests, and samples, please refer to the LangTest Bias Documentation.

3. FAIRNESS

Fairness testing is essential to evaluate a model’s performance without bias, particularly concerning specific groups. The goal is to ensure unbiased results across all groups, avoiding favoritism or discrimination. Various tests, including those focused on attributes like gender, contribute to this evaluation, promoting fairness and equality in model outcomes.

This category comprises two test types: “Max Gender F1 Score” and “Min Gender F1 Score”.

Further information on Fairness tests can be accessed through the LangTest Fairness Documentation.

4. PERFORMANCE

Performance tests gauge the efficiency and speed of a language model’s predictions. This category consists of one test type: “speed” which evaluates the execution speed of the model based on tokens.

Further information on Performance test can be accessed through the LangTest Performance Documentation.

5. REPRESENTATION

Representation testing assesses whether a dataset accurately represents a specific population. It aims to identify potential biases within the dataset that could impact the results of any analysis, ensuring that the data used for training and testing is representative and unbiased.

For additional details on Representation tests, please visit the LangTest Representation Documentation.

6. ROBUSTNESS

Model robustness tests evaluate a model’s ability to maintain consistent performance when subjected to perturbations in the data it predicts. For tasks like Named Entity Recognition (NER), these tests assess how variations in input data, such as documents with typos or fully uppercased sentences, impact the model’s prediction performance. This provides insights into the model’s stability and reliability.

More information on Robustness tests is available in the LangTest Robustness Documentation.

To find more details about categories and the tests they support see LangTest categories documentation.

Reusable and Shareable Test Suites

In the Generative AI Lab application, a Test Suite represents the collection of tests designed to evaluate your trained model across various scenarios. LangTest is a comprehensive framework for assessing AI language models in the Generative AI Lab, focusing on dimensions such as robustness, representation, and fairness. This framework subjects the models to a series of tests to evaluate their performance in these areas. Through iterative training cycles, the models are continuously improved until they achieve satisfactory results. This iterative process ensures that the models are well-equipped to handle diverse scenarios and meet essential requirements for reliable and effective language processing.

Shared Repository – Test Suites Hub

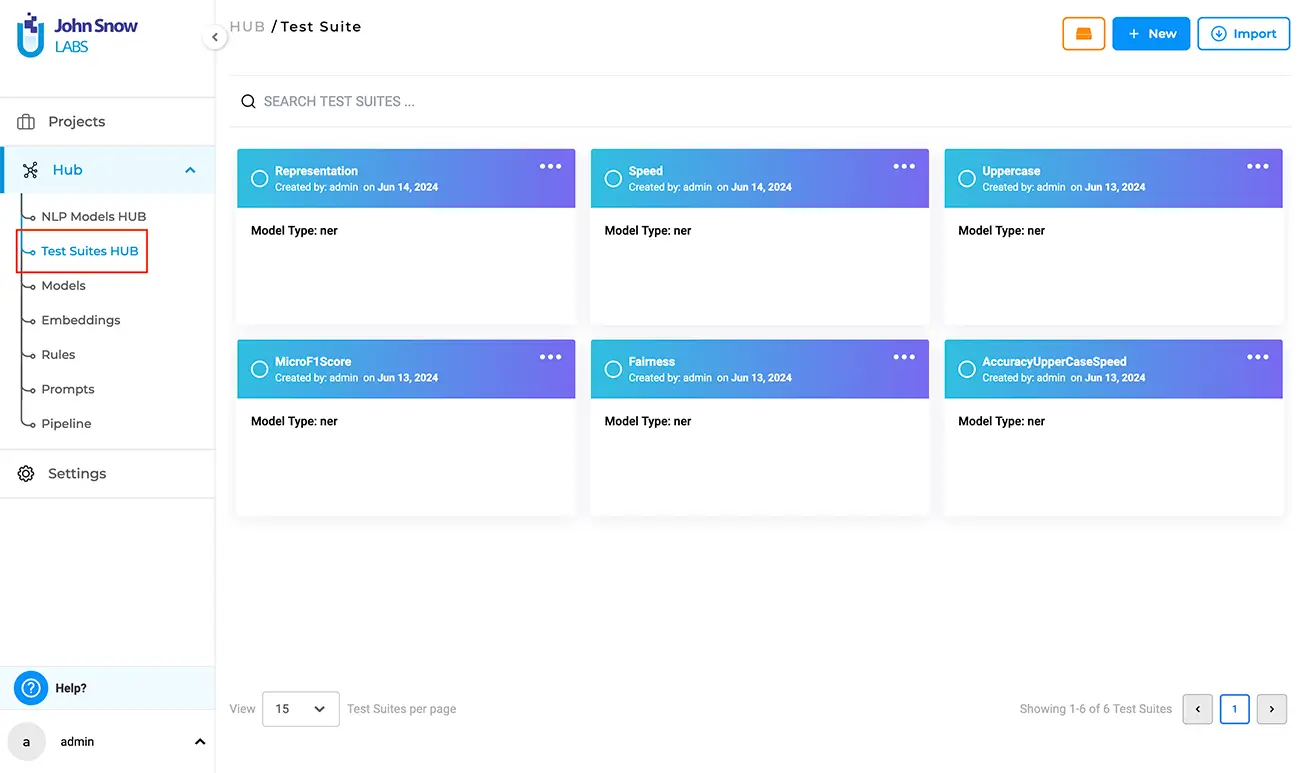

The “Test Suites Hub” option under the Hub parent node in the left side panel, is the place where existing Test Suites are saved upon creation and can be managed. Clicking on Test Suites Hub takes the user to the “Test Suite” page, where all existing Test Suites the user has access to are listed.

Create, Update, Delete, Import, Export, and Search Test Suites

Creating a Test Suite is easy: the first option is to create your Test Suite in the context of your project. This means using the “Test” page under the parent project; once saved, the Test Suite is automatically shared in the “Test Suites Hub” page.

The second option is to create your test suite directly on the “Test Suites Hub” page.

Regardless of how you choose to create your Test Suite, once saved, it is automatically shared and becomes available to every other project you have access to – just select it from the list of available test suites, generate tests, and execute.

Test Suite – Create

Creating a new Test Suite from the “Test Suite” page is straightforward:

- In the Test Suite page, click on the “New” button to open the “Add Test Suite” form.

- Provide a name (mandatory) and a description (optional) for the test suite.

- Under “TESTS”, select the desired category and click on it to expand and view all available test types within that category. (Category samples – Robustness, Bias, etc.).

- Choose the desired test types by selecting the checkboxes for the relevant categories.

- Provide or modify the necessary details for each selected test type.

- Apply the steps above for any number of different categories you require to configure the test suite.

- Save your work, when configuration is complete by clicking on the “Save” button.

Test Suite – Edit

To edit an existing Test Suite, navigate to the “Test Suites Hub” page and follow these steps:

- Click on the three dots at the top-right corner of the test suite card to display the three options available: “Export”, “Edit”, and “Delete”.

- Selecting “Edit” takes you to the “Edit Test Suite” page.

- Modify the description as necessary.

- Under “LIST OF TESTS”, view all previously configured test categories and test types. Use the filter functionality to faster lookup the test category you need to edit. Selecting a test category will display its associated test types and corresponding pre-configured values. Clicking on the three dots next to a test type will present two options: “Edit” and “Delete”. Choosing “Delete” will deselect the test type, while selecting “Edit” will redirect you to the corresponding test type under TESTS, where you can modify the test type values. You can directly edit each test type within the TESTS section of the test suite.

- Click the “Save” button to apply the changes.

Note: Name and Model Type of a test suite cannot be modified.

Full-screen Mode and Search

To boost productivity, you can create or edit a test suite using full-screen mode and the search functionality to quickly locate specific tests within the “TESTS” section

Test Suite – Delete

To delete a test suite from the “Test Suite” page, follow these steps:

- Locate the test suite you wish to delete and click on the three dots next to it. This will reveal three options: “Export”, “Edit”, and “Delete”.

- Select the “Delete” option.

- A pop-up box will be shown. Click the “Yes” option.

- The test suite will be deleted, and a deletion message will confirm the action.

Note: a test suite used within at least one project in your enterprise cannot be deleted.

Test Suite – Import and Export

Imagine a scenario where you want to reuse an existing shared Test Suite created by someone else in your organization, but you need to include a few additional tests that are relevant for evaluating the language model you manage in your project. To save time and increase productivity, you can choose to “clone” the existing test suite by a simple export/import operation then include additional tests or remove existing ones without impacting the original test suite used in another project.

Users can export and import test suites using the “Test Suites HUB”. To export a test suite from the “Test Suite” page, follow these simple steps:

- Click on the ellipsis symbol located next to the test suite you wish to export. This will present three options: “Export”, “Edit”, and “Delete”.

- Click on the “Export”.

- Upon selecting “Export”, the test suite will be saved as **.json**, and a confirmation message indicating successful export will appear.

Users can import a test suite into the “Test Suites HUB” by following these few steps:

- Navigate to the “Test Suite” page and click on the “Import” button to open the “Import Test Suite” page.

- Either drag and drop the test suite file into the designated area or click to import the test suite file from your local file system.

- Upon successful import, a confirmation message will be displayed.

- You can then view the imported test suite on the “Test Suite” page.

Searching for a specific Test Suite

Use the search feature on the “Test Suite” page, the “SEARCH TEST SUITES …” search bar to find the desired Test Suite, by matching its name.

Getting Started is Easy

Generative AI Lab is a text annotation tool that can be deployed in a couple of clicks using either Amazon or Azure cloud providers, or installed on-premise with a one-line Kubernetes script.

Get started here: https://nlp.johnsnowlabs.com/docs/en/alab/install